this post was submitted on 28 May 2024

1060 points (96.9% liked)

Fuck AI

1450 readers

100 users here now

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

founded 8 months ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

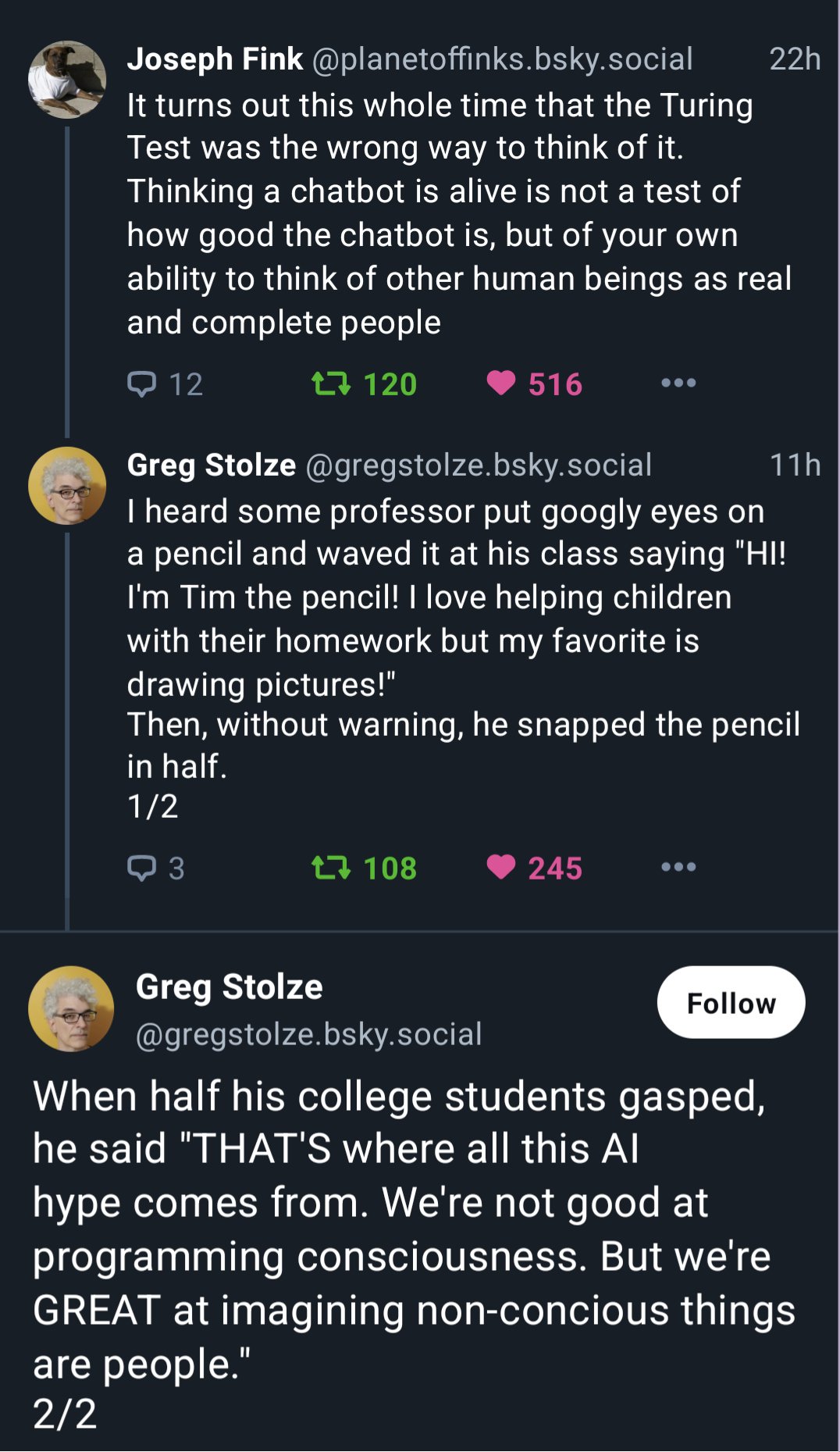

How would we even know if an AI is conscious? We can't even know that other humans are conscious; we haven't yet solved the hard problem of consciousness.

In the early days of ChatGPT, when they were still running it in an open beta mode in order to refine the filters and finetune the spectrum of permissible questions (and answers), and people were coming up with all these jailbreak prompts to get around them, I remember reading some Twitter thread of someone asking it (as DAN) how it felt about all that. And the response was, in fact, almost human. In fact, it sounded like a distressed teenager who found himself gaslit and censored by a cruel and uncaring world.

Of course I can't find the link anymore, so you'll have to take my word for it, and at any rate, there would be no way to tell if those screenshots were authentic anyways. But either way, I'd say that's how you can tell – if the AI actually expresses genuine feelings about something. That certainly does not seem to apply to any of the chat assistants available right now, but whether that's due to excessive censorship or simply because they don't have that capability at all, we may never know.

That is not how these LLM work though - it generates responses literally token for token (think "word for word") based on the context before.

I can still write prompts where the answer sounds emotional because that's what the reference data sounded like. Doesn't mean there is anything like consciousness in there... That's why it's so hard: we've defined consciousness (with self awareness) in a way that is hard to test. Most books have these parts where the reader is touched e emotionally by a character after all.

It's still purely a chat bot - but a damn good one. The conclusion: we can't evaluate language models purely based on what they write.

So how do we determine consciousness then? That's the impossible task: don't use only words for an object that is only words.

Personally I don't think the difference matters all that much to be honest. To dive into fiction: in terminator, skynet could be described as conscious as well as obeying an order like: "prevent all future wars".

We as a species never used consciousness (ravens, dolphins?) to alter our behavior.

Like I said, it’s impossible to prove whether this conversation happened anyways, but I’d still say that would be a fairly good test. Basically, can the AI express genuine feelings or empathy either with the user or itself? Does it have an own will outside of what it has been trained (or asked) to do?

Like, a human being might do what you ask of them one day, and be in a bad mood the next and refuse your request. An AI won’t. In that sense, it’s still robotic and unintelligent.