I might be wrong, but usenet is download only? There isn’t an equivalent of seeding for usenet as I understand.

Personally I use https://www.fastusenet.org/ and https://www.nzbgeek.info/

This will serve as a backup community for the usenetinvites system.

No pirated content.

This subreddit is for sharing usenet related invites only. No account requests/offers, no non-usenet invites.

No trading, buying, or selling. It is not acceptable to trade, buy, or sell invites. Please share what you have freely.

Posts that contain both an offer and a request for invites will be assumed to suggest a trade.

Start ALL titles with -

[W] = Wanted

[O] = Offering

Don't be a dick.

See rule #1 This is not the place to discuss content that you have illegally obtained or wish to obtain. We will not tolerate repeat offenders and are ban happy when it comes to this rule.

I might be wrong, but usenet is download only? There isn’t an equivalent of seeding for usenet as I understand.

Personally I use https://www.fastusenet.org/ and https://www.nzbgeek.info/

Nope you are correct, and I just revealed that im clueless about Usenet in a public forum... To a community of it's users 🤡.

Nothing wrong with being here to learn!

Sadly I have no DS invites left, I think I'll get some when I'll renew my subscription. Anyway, with usenet you don't have to seed. After you download the files you can do whatever you want with them. This wiki might be usefull tho'

Despite Torrents benefiting from decentralized file distribution, its efficacy remains governed by seeding availability. Usenet, with centralized storage, boasts impressive retention periods, often spanning several years. This is why it does not require seeding unlike torrents.

Thanks for the explanation, now it's clicking in my brain why all of the Usenet trackers I hear about are all private... They likely wouldn't be around very long if they were distributing linux iso's from their own servers to the general public.

Usenet (or nntp) relies on physical servers at set locations around the world, these normally reside in large datacenters. these physical servers are owned by or maintained by the "backbones" so for example, Giganews owns and maintains its own servers, and sells access to them as either giganews, or supernews.com. They also sell access to 3rd party resellers who brand the access as their own and sell it onto you giganews resellers are RhinoNewsgroups, Usenet.net, Powerusenet - these may use their own cache for recent content, but once past that they all share the same content.

When a "linux distro" is uploaded to Usenet, it is uploaded to one of these physical servers, either directly through a provider like giganews, or through a reseller, this doesn't matter as these physical servers are all connected via certain propagation agreements with all of the other backbones and caches be it xsnews, searchtech limited, this process is called propagation, and it can take up to 40 minutes and even longer for these physical servers to become synced.

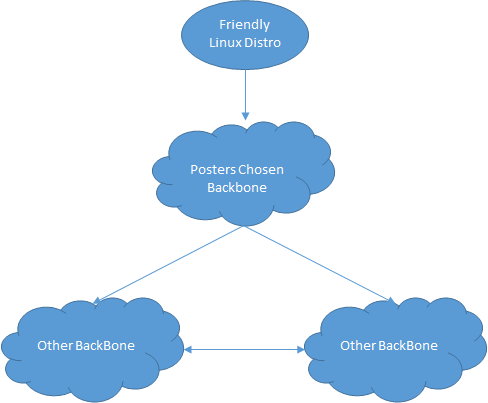

Here's a little diagram:

This is as you can see a cyclical process - the posters chosen provider gets the article, and then other servers go "oh what do you have that we dont? lets grab that" this process occurs until the servers are >=99% complete, when a poster uploads they normally upload their release with par files to account for 10% of the overall file - so if 5% of the release is damaged due to poor transfer or such - your download client can grab these par files and fix the missing sections - kinda cool!

Wow, thanks for taking the time to type that explanation! Your response was much easier to understand vs what i got trying to google.

It's clear now that a private torrent tracker is more in line with what I originally wanted, but I'll hang around the Usenet community to try and learn more as it seems really cool. I kinda wish i found out about it 20 years ago but it's never too late to learn!

Certainly never too late to learn , wish you luck on your journey :)