I was offline for an extended period and realized how dependent on online services I am. so now that I am back online, I tried to have an offline version of various sites via the kiwix project.

the setup should be easy - fetch and then download via torrents the gigabytes of data; each site is in its own ZIM file. store them in a single folder. leave 'em seeding, help out folks. done.

next, get the kiwix app. some browser-like atrocity delivered via flatpak. I'm half-guessing it's electron, because everything shitty usually is and this is just otherwordly shitty. anyhow, the thing is meant to download the ZIMs directly to the computer, which I don't want, I already got the files on my server, accessible via network share or any other mechanism.

in the myriad of confusing, counter-intuitive and just dogshit UX options there's the option to choose the folder containing files. easy enough, pick the folder from the network share and... nothing happens. clicking on home, search, nothing nets any result. ok, restart the app? yeah nah. the app is now frozen. after a while it just disappears and relaunching it doesn't work.

after dicking around with killing everything kiwix related, the app finally launches - in a frozen state. the server's HDD activity light barely lights up so I'm stumped at what it's doing.

finally, the app decides it's no longer "not responding" and I can try searching. let's try something simple, "macbook" - not found. the entirety of human knowledge on my drive and this little-known thingy somehow got skipped.

in the midst of trying various things, we reboot. upon launching the app, it doesn't have any memory of those ZIM files - a flatpak network folder mapping issue I am sure. still, awesome so far.

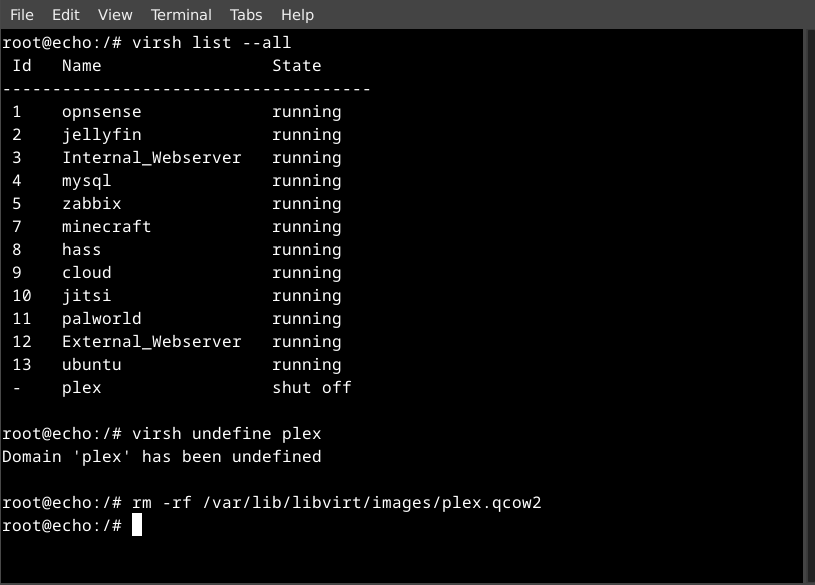

fuck this app, let's try another way. my server is debian and its packages include kiwix-tools which has the kiwix-serve module. looks easy, kiwix-serve -v -p 7766 /media/data/kiwix/* and... says we're good, I'll set up a systemd service file later, let's connect the app.

except, that's not a thing. nowhere I was able to click and prod and tweak was there an option to enter a network URL.

dogdamit, let's use firefox. server's URL:port and... there we go, a landing page, lists all the ZIMs I got; it's kinda ugly and dated, no way that's a harbinger of doom and hopefully complex use cases like searching the thing will work...

they will not. whatever you search for nets zero fucking results. now, if you open a ZIM file individually, e.g. ifixit, and then search in it, that'll show results. but then, what's the point of the meta search page?

so thanks for reading, I'm looking forward to ditching my ISP and relying on this thing to keep me alive. "bear mauling me what do" - not found.