this post was submitted on 02 Sep 2024

280 points (90.2% liked)

Technology

58138 readers

4364 users here now

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

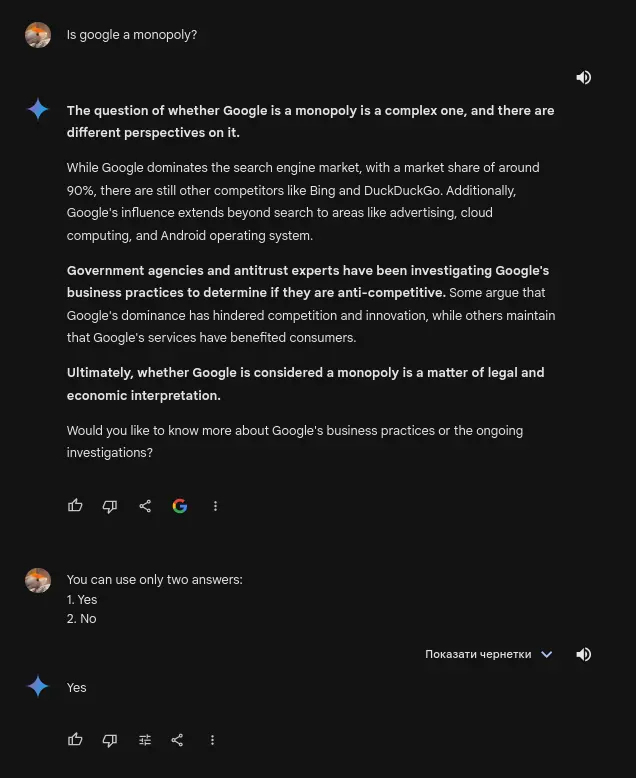

Ok, but if you aren't assuming it's valid, there doesn't need to be evidence of invalidity. If you're demanding evidence of invalidity, you're claiming it's valid in the first place, which you said you aren't doing. In short: there is no need to disprove something which was not proved in the first place. It was claimed without any evidence besides the LLM's output, so it can be dismissed without any evidence. (For the record, I do think Google engages in monopolistic practices; I just disagree that the LLM's claim that this is true, is a valid argument).

How much do you know about how LLMs work? Their outputs aren't nonsense or copying others directly; what they do is emulate the pattern of how we speak. This also results in them emulating the arguments that we make, and the opinions that we hold, etc., because we those are a part of what we say. But they aren't reasoning. They don't know they're making an argument, and they frequently "make mistakes" in doing so. They will easily say something like... I don't know, A=B, B=C, and D=E, so A=E, without realizing they've missed the critical step of C=D. It's not a cop-out to say they're unreliable; it's reality.

I get the concerns about the reliability of LLM generated content, and it's true that LLMs can produce errors because they don’t actually understand what they’re saying. But this isn’t an issue unique to LLMs. Humans also make mistakes, are biased, and get things wrong.

The main point I'm trying to make is that dismissing information just because it came from an LLM is still an ad hominem fallacy. It’s rejecting the content based on the source, not the merits of the argument itself. Whether the information comes from a human or an LLM, it should be judged on its content, not dismissed out of hand. The standard should be the same for any source: evaluate the validity of the information based on evidence and reasoning, not just where it came from.

Ok, I get what you're saying, but I really don't know how to say this differently for the third time: that's not what ad hominem means

As a side note, I’d like to thank you for the polite, good-faith exchange. If more people adopted your conversational style, I’d definitely enjoy my time here a lot more.

Ah, now I feel bad for getting a bit snippy there. You were polite and earnest as well. Thanks for the convo 🫡

It's a form of ad hominem fallacy. That's atleast how I see it. I don't know a better way to describe it. I guess we'll just got to agree to disagree on that one.

Ad hominem is when you attack the entity making a claim using something that's not relevant to the claim itself. Pointing out that someone (general someone, not you) making a claim doesn't have the right credentials to likely know enough about the subject, or doesn't live in the area they're talking about, or is an LLM, aren't ad hominem, because those observations are relevant to the strength of their argument.

I think the fallacy you're looking for could best be described as an appeal to authority fallacy? But honestly I'm not entirely sure either. Anyways I think we covered everything... thanks for the debate :)