TLDR - Meat has been unfairly blamed by bad (possibly biased) statistical analysis.

some investigators may test many alternative analytic specifications and selectively report results for the analysis that yields the most interesting findings.

when investigators analyze data from observational studies, there are often hundreds of equally justifiable ways of analyzing the data, each of which may produce results that vary in direction, magnitude, and statistical significance

Evidence shows that investigators’ prior beliefs and expectations influence their results [5]. In the presence of strong opinions, investigators’ beliefs and expectations may shape the literature to the detriment of empirical evidence

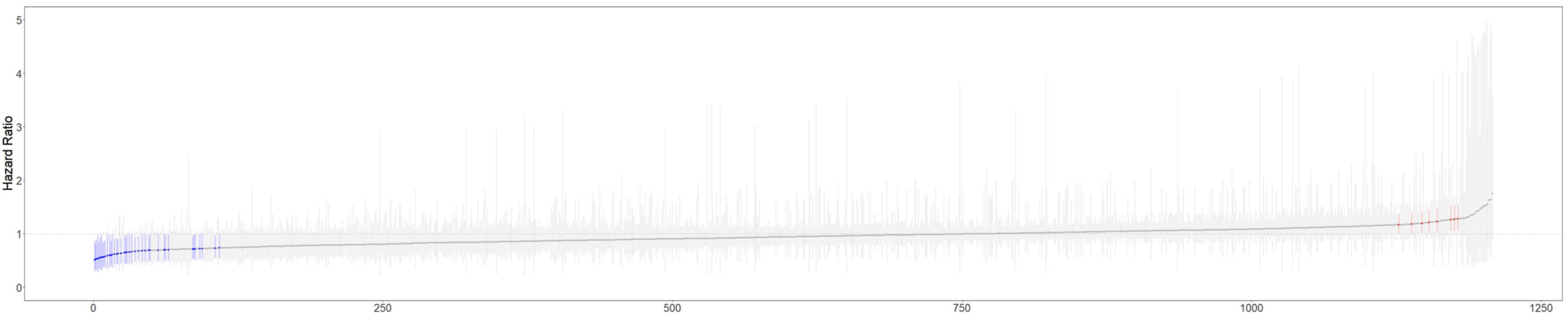

Basically given a all the possible variable permutations they took a very large sampling of inputs to outcomes and looked at the resultant hazard ratio, demonstrating that you can cherry pick to get the results you want (good or bad). This is the core weakness of observational studies.

Curve analysis demonstrates itself as a valuable too in iterating through many of the combinations of observational data to show stronger trends.

The left/blue side of the graph are outcomes that show meat decreased all cause mortality, the right/red side of the graph are outcomes that show meat increases all cause mortality. If you were a hungry researcher, you could publish unending papers indicating either way from this same observational data pool! - Hence the constant news cycle driven by dietary agendas - not based on hard science RCTs.

Observational studies with LOW hazard ratios, should be the START of in-depth science and RCTs, not the end of science.

Reminds me of this little book: https://en.wikipedia.org/wiki/How_to_Lie_with_Statistics