this post was submitted on 02 Feb 2024

1599 points (97.6% liked)

Microblog Memes

5863 readers

3660 users here now

A place to share screenshots of Microblog posts, whether from Mastodon, tumblr, ~~Twitter~~ X, KBin, Threads or elsewhere.

Created as an evolution of White People Twitter and other tweet-capture subreddits.

Rules:

- Please put at least one word relevant to the post in the post title.

- Be nice.

- No advertising, brand promotion or guerilla marketing.

- Posters are encouraged to link to the toot or tweet etc in the description of posts.

Related communities:

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

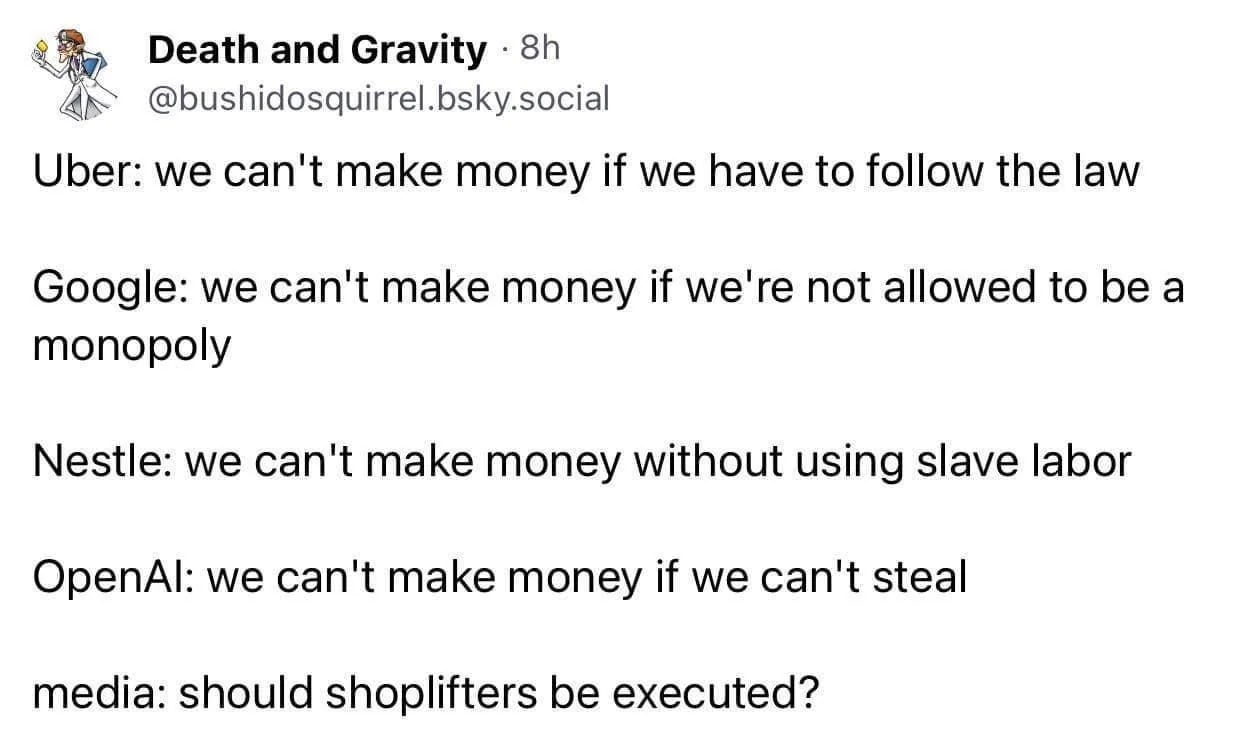

Is sampling and analyzing publicly available data and not storing it considered stealing?

I'm not defending anyone here, but that's just weird. It's also weird to take a meme seriously, but w/e.

Public data still have licenses. Eg, some open source licences force you to open source the software you created using them, something OpenAI doesn't do.

If you're using it as you found it, then yeah. But if I take derived data from it like word count and word frequency, it's not exactly the same thing and we call that statistics. Now if I draw associations of how often certain words appear together, and then compound that with millions of other sources to create a map of related words and concepts, I'm no longer using the data as you described because I'm doing something entirely different with it. What LLMs do is generates new information from its underlying sources.

In my example, they would still be using the source code to create new software that is not open source, not matter how many Markov chains are behind it.

That's really stretching it, tbh. You're arguing that the cake is made of chicken because it contained whole eggs at some point.

You can't use someone's work for whatever you want just because it's publicly accessible.

I'm actually still not sure how I feel about this.

I can use books to learn a new language. AI can use texts to learn their kind of language in a sense.

I'm not sure where the limit is or should be though.

I don't think that's really the same thing. Most people learning another language aren't doing it specifically so they can turn around and sell translations to millions of customers.

And if they were, they'd probably need to be accredited and licensed, using standardized sources that they pay for, directly or indirectly.

That sounds like a translator to me. And also, they're kind of doing it for free. What they're selling is access to their latest models, their API and their plugins store. They're not exactly selling the information that has been transformed.

Hasn't web scraping been done for like forever, though? How is this any different? You get publicly accessible information and you derive data from it. You're literally not stealing anything or storing it as-is.

It has to be stored in some form for the AI to "learn" from and remember it, and a lot of the debate is around whether AI is actually able to learn, or if it can only really blindly combine 1:1 copies of elements into something derivative.

There's also the debate of whether what humans learn and produce based on influence can be compared to AI, but humans aren't able to consume millions of records in seconds like AI.

They're not storing the original data and OpenAI even state so themselves. LLMs compound derived associations between words and concepts from whatever it analyzes, which is further modified by all the other sources it analyzes and that's what gets stored during training. It doesn't matter if it's a few sources or a million sources, it's not storing any of it as-is. It's very much like how we process information ourselves for the length of our entire lives by making generalizations. We don't memorize everything precisely besides the foundational blocks of language, but our neurons do fire in a certain pattern when given a trigger. How is that stealing?

I believe the debate is not around storing the data - nobody, to my knowledge, blames Open AI for copy-pasting the internet on their servers. But they are using the data that belongs to everyone to produce a product they sell/intend to sell commercially. Quite a bit more tricky! Extending analogy to us humanses, in order to learn a language we have to buy a book and read it, so we did pay someone for our knowledge we then sell. Did Open AI pay everyone for everything they fed to their skynet? Or maybe they used only "open source" stuff, so now they comply with all the licenses attached, do they?

What if an artist got inspiration from a Google image search, without paying the creators for that? I think that's fine, and I don't see why it's suddenly wrong when a machine learning algorithm does it.

A lot of people are indeed accusing OpenAI of stealing because they claim that LLMs can reproduce entire original works because there are misconceptions of how LLMs work. This is why even OpenAI came out stating that their models simply don't store source information. I've seen people make that argument here and in other threads, so I'm assuming that's why it's written like that in the post.

But why should anyone pay to analyze freely available data? It's a whole different process to build something new than to simply use the data. Like, I don't see search giants paying to build their indexes where it's arguably where their money is. And to OpenAI's credit, they're not even selling the data but they're also giving their derived data back for free in its entirety. It sounds like a great deal to me!

I'm not sure if that's true. I'm on my third language and I can confidently say that anyone can learn a language entirely from the mountains of freely-available resources. People are chomping at the bit to teach you their language. Likewise, even if I only used open source to learn to code, I wouldn't need to copy anybody's licenses to analyze their code to figure out how the implemented a feature so that I can build my own. Those are not patented ideas and it's arguably what LLMs like ChatGPT do. (But I will say that GitHub Copilot is a little different because that one does seem to pull from repos directly because I think it pulls from GitHub using Bing.)