this post was submitted on 15 Jun 2024

32 points (100.0% liked)

SneerClub

1074 readers

24 users here now

Hurling ordure at the TREACLES, especially those closely related to LessWrong.

AI-Industrial-Complex grift is fine as long as it sufficiently relates to the AI doom from the TREACLES. (Though TechTakes may be more suitable.)

This is sneer club, not debate club. Unless it's amusing debate.

[Especially don't debate the race scientists, if any sneak in - we ban and delete them as unsuitable for the server.]

See our twin at Reddit

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

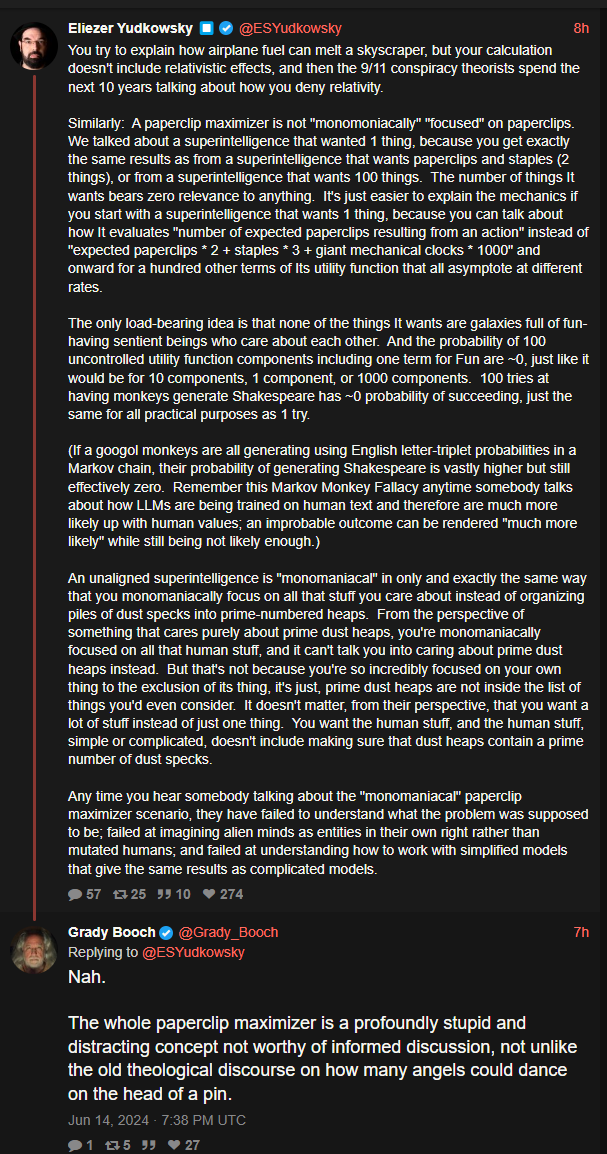

We get it, we just don't agree with the assumptions made. Also love that he is now broadening the paperclips thing into more things, missing the point of the paperclips thing abstracting from the specific wording of the utility function (because like with disaster prepare people preparing for zombie invasions, the actual incident doesn't matter that much for the important things you want to test). It is quite dumb, did somebody troll him by saying 'we will just make the LLM not make paperclips bro?' and he got broken so much by this that he is replying up his own ass with this talk about alien minds.

e: depressing seeing people congratulate him for a good take. Also "could you please start a podcast". (A schrodinger's sneer)

rofl, I cannot even begin to fathom all the 2010 era LW posts where peeps were like, "we will just tell the AI to be nice to us uwu" and Yud and his ilk were like "NO DUMMY THAT WOULDNT WORK B.C. X Y Z ." Fast fwd to 2024, the best example we have of an "AI system" turns out to be the blandest, milquetoast yes-man entity due to RLHF (aka, just tell the AI to be nice bruv strat). Worst of all for the rats, no examples of goal seeking behavior or instrumental convergence. It's almost like the future they conceived on their little blogging site shares very little in common with the real world.

If I were Yud, the best way to salvage this massive L would be to say "back in the day, we could not conceive that you could create a chat bot that was good enough to fool people with its output by compressing the entire internet into what is essentially a massive interpolative database, but ultimately, these systems have very little do with the sort of agentic intelligence that we foresee."

But this fucking paragraph:

ah, the sweet, sweet aroma of absolute copium. Don't believe your eyes and ears people, LLMs have everything to do with AGI and there is a smol bean demon inside the LLMs that is catastrophically misaligned with human values that will soon explode into the super intelligent lizard god the prophets have warned about.