Reddthat Announcements

Main Announcements related to Reddthat.

- For all support relating to Reddthat, please go to !community@reddthat.com

Hello! It seems you have made it to our donation post.

Thankyou

We created Reddthat for the purposes of creating a community, that can be run by the community. We did not want it to be something that is moderated, administered and funded by 1 or 2 people.

Opencollective -> LiberaPay

After the disappearance of our Fiscal host we have migrated to LiberaPay:

https://liberapay.com/reddthat

This donation goes directly to me without any third parties holding onto it like we did with Opencollective.

Background

In one of very first posts titled "Welcome one and all" we talked about what our short term and long term goals are.

In the last 2 years we have carved out a place for all of us to be heard and enjoy. Images, videos, comments however we communicate, I'm thankful of everyone who calls Reddthat home. Over 5000 users is something I'd never thought possible.

You are what makes Reddthat worth coming back too.

Donation Links (Updated 2025-06)

- Liberapay: https://liberapay.com/reddthat/

- (best for recurring donations)

- Stripe 3% + $0.3

- Ko-Fi: https://ko-fi.com/reddthat

- (best for once off donations)

- Stripe 3% + $0.3

- 5% on all recurring (Unless I pay them $8/month for 0% fee)

- Crypto:

- XMR Directly:

4286pC9NxdyTo5Y3Z9Yr9NN1PoRUE3aXnBMW5TeKuSzPHh6eYB6HTZk7W9wmAfPqJR1j684WNRkcHiP9TwhvMoBrUD9PSt3 - BTC Directly:

bc1q8md5gfdr55rn9zh3a30n6vtlktxgg5w4stvvas - LTC Directly:

ltc1qfs92f6d80jelhj6avwf9u7q5p7ldqqzg0twunw

Current Plans & Descriptions

- Keep the server running smoothly!

- Our S3 storage is growing and will only continue to grow.

- Hopefully we can drop the EU Server which acts as a proxy for LW traffic now that Parallel Sending might be possible

- We currently are paying slightly more for our main server than we should, and I'll keep an eye out for a new and better deal.

Annual Costings:

Our current costs are (Updated: 2025-06-15)

- Domain: 15 Euro (~$26 AUD)

- Server: $1080 AUD

- Wasabi Object Storage: $180 Usd (~$290 AUD)

- Total: ~1386 AUD per year (~$116/month)

That's our goal. That is the number we need to achieve in funding to keep us going for another year.

Cheers,

Tiff

PS. Thank you to our donators! Your names will forever be remembered by me:

Last updated on 2025-03-22

Current Recurring Gods (🌟)

- Nankeru (🌟)

- Incognito (x4 🌟🌟🌟🌟)

- Guest (x3 🌟🌟🌟)

- Bryan (🌟)

- djsaskdja (🌟)

- MentallyExhausted (🌟)

- asqapro (🌟)

- Bitwize01(🌟)

Previous Heroes

- Guest(s) x13

- souperk

- MonsiuerPatEBrown

- Patrick x4

- Stimmed

- MrShankles

- RIPSync

- Alexander

- muffin

- Dave_r

- Ed

- djsaskdja

- hit_the_rails

- ThiccBathtub

- Ashley

- Alex

Opencollective no more, Hello Liberapay!

- Reference Open Collective Post: https://opencollective.com/reddthat/conversations/we-have-migrated-to-liberapay-4pzb7zgp#comment-1749981430361

- Our Liberapay: https://liberapay.com/reddthat

- Which goes directly to me

- You can also do ko-fi: https://ko-fi.com/reddthat

- Which goes via Paypal (I think)

- Or send it directly via Crypto if that is your thing.

- See the main donation page for the addresses

Summary:

It has now been 8 weeks since our last contact with our fiscal host on Opencollective and I have made the hard decision to migrate away to Liberapay.

"New" Donation Platform

When I started Reddthat I wanted to show everything that we were doing in a transparent way. We have the modlog which shows eveything that happens on Reddthat and the Lemmy-verse and I wanted to bring that to the financial side of things as well.

So I looked around at the payment processors. Patreon/etc had higher fees than what I expected and as they were donations I really didn't want to lose up-to 8% of your good will!

Liberapay was the obvious choice originally but it turns out they are a platform where people can donate directly to people. Everyone needs to link their Paypal, Stripe, or EU Bank account to accept transactions on Liberapay.

This also looked a bit hard and I don't really trust Paypal, especially if out of the blue I started getting $50 "donations". Working in marketing I've head of paypal closing down accounts and holding money for ransom while you have to consistently prove you are who you are and jump through their hoops.

As I was already an "active" member of the wider Fediverse, I looked up how these services managed to accept donations and eventually stumbled upon Opencollective. This was exactly what I wanted. A open and inviting platform to handle all the money side of things while providing a truly transparent account of what is happening.

Opencollective solved all of the things I could hope for while also solving a secondary issue. Taxes. Something I have no idea about when it comes to donations, but will surely need to be up to speed on it within the next month!

After reading a few documents, you need to pick a Fiscal Host on opencollective, or prove you are a "business?" (or something like that, I don't remember) so you can become a fiscal host and accept money. This looked to be extra work on top of getting Reddthat up and running so I went looking for a local Australian fiscal host, and found one who also had 0% fees! They would accept all the donations and then transfer it back to me as a reimbursement, or I could even get them to pay invoices! This ticked all my boxes. Even if the host evidently ended charging a small 1-2% I would have been happy with that as our relationship after these last two years have been great. We even donated back to them for a few months in the early days.

~~Fast forward to today. We now have 2 months I have paid for without being reimbursed by our host. For a total of A$285.49. That would have left A$518.40 in our account for our future months~~

Update: We have managed to get all of our donations back!

Unfortunately since the payment in April I haven't heard anything from them and so we are saying Goodbye to OpenCollective and Hello to Liberapay! I'll hold out hope that they will come back online, or they've gone away for a huge holiday without internet, but this is a lesson we can all learn from.

I would like to continue using OpenCollective but the thought of losing more money the same way through another fiscal host would be too much. And the alternative of having to setup more paperwork to become our own fiscal host, and getting hit with a transaction fee for taking your donations and then "paying" myself just doesn't seem worth it. So Liberapay it is.

I've setup Liberapay and I've setup Stripe. Given them a fair amount of my details ( 😥 ). So you should be able to donate directly to me and we'll never have any of these unfortunate issues relating to other entities holding our money.

I feel like I've let you all down with ~$500+ disappearing into the ether. We can only learn from our mistakes and moving to Liberapay, Ko-Fi, or Crypto, where I hold the funds is the best way forward to ensure stability.

I'd like to remind everyone that donations are completely optional but they certainly help with it comes to a "big" instance like ours. Lemmy is growing every year and it's great to see everyone and all the things they do and the communities we have created!

And now back to the regular update.

June 2025

We've rolled out v0.9.12 for Lemmy which contained a few bugfixes as well as Peertube federation support, so now you should be able to see more peertube videos i that is your thing.

We turned 2 this month. The 6th of June was our birthday and it was an absolute banger! It was so big we all blacked out at the party and forgot it was happened! As June is a busy month for me, I made sure to renew our domain to make sure nothing happened. ;)

Since last update Lemmy World has successfully turned on the Parallel Sending which we (Reddthat) instigated way back when we started lagging behind and we all found out that sending internet traffic from one side of the world to the other sequentially might be bad. This means we no longer have need for our proxy system and it has saved us 4Euro/month (and the extra management overhead).

This also has had the same affect on our sister instances in AU/NZ allowing them to not lag behind too.

I hope you all had a great year and here's to another amazing one!

Cheers,

Tiff (& The Reddthat Admin Team)

PS. I believe I have managed to cancel all recurring donations. So if you wish to keep donating please do come over to Liberapay. <3

PPS. Did I mention that I've enabled Secret Donations for Liberapay, so I won't be able to know who you are!

April is here!

So much has happened since the last update, we've migrated to a new server, we've failed to update to a new lemmy version, automated our rollouts, fought with OVH about contracts. It's been a lot.

Strap in for story time about the upgrade, or skip till you see the break for the next section.

So good news is that we are successfully on v0.19.11.

The bad news is that we had an extended downtime.

Recently I had some extra time to completely automate the rollout process so Reddthat didn't rely solely on me being on 1 specific computer which had all the variables that was needed for a deployment.

As some people know I co-manage the lemmy-ansible repository. So it wasn't that hard to end up automating the automation. Now when a new Version is announced, I update a file/files, it performs some checks to make sure everything is okay, and i approve and roll it out. Normally we are back online within 30 seconds as the lemmy "backend" containers do checks on start to make sure everything is fine and we are good to go. Unfortunately it never came back up.

So I reverted the change thinking something was wrong with the containers and the rollout proceeded to happen again. Still not up :'( Not having my morning coffee and being a little groggy after just waking up.

Digging into it our database was in a deadlock. Two connections were attempting to do the same but different which resulted in it being locked up and not processing any queries.

Just like Lemmy World, when you are "scaling" sometimes bad things can happen. re: https://reddthat.com/post/37908617.

We had the same problem. When rolling out the update two containers ended up starting at the same time and both tried to do the migrations instead of realising one was already doing them.

After quickly tearing it all down. We started the process of only having 1 container to perform the migration and then once that had finished starting everything else we were back online.

Going forward we'll probably have to have a brief downtime for every version to ensure we don't get stuck like this again. But we are back-up and everything's working.

Now for the scheduled programming.

OVH

OVH scammed me out of the Tax on our server renewal last month. When our previously 12 month contract was coming to the end we re-evaluated our finances and were found wanting. So we ended up scaling down to a more cost-effective server and ended up being able to pay in AUD instead of USD which will allow us to stay at a single known price and not fluctuate month to month.

Unfortunately I couldn't cancel the contract. The OVH system would not let me click terminate. No matter what I did, what buttons I pressed, or how many times I spun my chair around it wouldn't let me cancel. I didn't want to get billed for another month when we were already paying for the new server. So a week before the contract ended I sent a support ticket to OVH. You can guess how that went. The first 2 responses I got from them after 4 days was "use the terminate feature". They didn't even LOOK at the screenshots clearly outlining the steps I had taken and the generic error... So I get billed for another month... and then have to threaten them with legal proceedings. They then reversed the charge. Except for the Tax. So I had to pay 10% of the fee to cancel our service. Really unhappy with OVH after this ordeal.

Automated rollouts

I spent some time after our migration ensuring that we have another system setup which will be able to rollout updates. So we are not dependant on just me and my one random computer :P All was going very well until an upgrade with database migrations happened. We'll be working on that soon to make sure we don't have unforeseen downtime.

Final Forms

Now that the dust has settled and we've performed the migrations starting next month I'll probably go back to our quarterly updates unless something insane happens. (IE: Lemmy drops v1 👀 )

We also modified our "Reddthat Community and Support" community to be a Local Only community. The original idea for the community was to have a place where only reddthat could chat, but back when we started out that wasn't a thing! So now if you want to voice your opinion to other Reddthat users please feel free too knowing other instances won't come in and derail the conversation.

As a reminder we have many ways to donate if you are able and feel like it! A recurring donation of $5 is worth more to me than a once of $60 donation. Only because it allows me to forecast the year and work out when we need to do donation drives or relax knowing everything in it's current state will be fine.

- Open Collective (preferred)

- Kofi

- Litecoin Direct

- Monero Direct (preferred)

Cheers,

Tiff

We just successfully upgraded to the latest Lemmy version, 0.9.10, probably the last before the v1 release.

This addresses some of the PM spam that everyone has been getting. Now when that user is banned and we remove content it also removes the PMS. So hopefully you won't see them anymore!

Over the next couple days will be planning for our migration to our new server as our current server's contract has ended. I expect the down time to last for about an hour, if not shorter. You'll be able to follow updates for the migration by our status page at https://status.reddthat.com/

Normally this update would be a week in advance and more nicely formatted that turns out the contract ends on the 25th and I don't want to get charged for another month at a higher rate when I just purchased the new server.

See you on the other side,

Tiff

EDIT:

22 Mar 2025 02:42: I'm going to start the migration in 5 mins (@ 3:00)

22 Mar 2025 03:01: that was the fastest migration I've ever done. pre-seeding the server and Infra as Code is amazing!

We've turned off our crypt donation p2pool (as no-one was using it), and two of our frontends, alexandrite and next (for the same reasons)

Time to celebrate with some highly accurate Australian content:

Hello Reddthat! We are back for another update on how we are tracking. It's been a while eh? Probably because it was such smooth sailing!

In the middle of February we updated Lemmy to v0.19.9 which contained some fixes for federating between Mastodon and Lemmy so hopefully we will see less spam and more interaction from the larger mastodon community. While that in of itself is a nice fix, the best fix is the recent thumbnail fix! Thumbnails now have extra logic around generating them and now have a higher chance of actually being created! Let us know if you think there has been a change over the past month-ish.

Budget & Upcoming Migration

Reddthat has been lucky to have such a great community that has helped us stay online for over a year and if you can believe it, in just a few more months it will be 2 years, if we can make it.

Our costs have slowly increased over the years as you can all see by our transactions on OpenCollective (https://opencollective.com/reddthat). We've managed to reduce some costs in our S3 hosting after it balooned out and bring it down to a more manageable level. Unfortunately as well, the current economic issues have resulted in the Australian dollar slipping further and as we pay everything in USD or EUR it has resulted in slightly higher costs on a month-to-month basis..

Our best opportunity to keep online for the foreseeable future is to downsize our big server from a 32GB ram instance to a 16GB ram instance which will still provide enough memory that we will be able to function as we currently do while not affecting us in a meaningful way.

This means we'll need to reassess if running all our different front ends are useful, or do we only choose a few? Currently I am looking to turn off next and alexandrite. If you are a regular user of these frontends and prefer them please let me know as from our logs these are the least used while also take up the most resources. (Next still has bugs regarding caching every single image).

We can get a vps for about ~A$60-70 per month which will allow us to still be as fast as we are now while saving 40% off our monthly costs. This will bring us to nearly 90% funded by the community. We'll still be slowly "losing" money from our open collective backlog but we'll have at least another 6 months under our belt, if not 12 months! (S3 costs and other currency conversion not withstanding).

All of this will happen in late March early April as we will need to make sure we do it before the current contract is up so we don't get billed for the next month. Probably the 29th/30th of I don't fall asleep too early on those days.

It'll probably take around 45mins to 60mins but if I get unlucky maybe 2 hours.

Age Restriction

Effective immediately everyone on Reddthat needs to be 18 years old and futher interaction on the platform confirms you are over the age of 18 and agree with these terms.

If you are under the age of 18 you will need to delete your account under Settings

This has also been outlined in our signup form that has been updated around the start of February.

Australian & UK Policy Changes

It seems the UK has also created their own Online Safety Act that makes it nearly impossible for any non-corporation to host a website with user generated content (USG). This is slightly different to the Australian version where it specifically targets Social Media websites.

Help?

I would also like your help!

To keep Reddthat online, and to help comply with these laws, if you see content or user accounts which are under the age of 18 please report the account/post/content citing that the user might be under the age of 18.

We will then investigate and take action if required.

Thanks everyone

As always keep being awesome and having constructive conversations!

Cheers,

Tiff!

PS. Like what we are doing? Keep us online for another year by donating via our OpenCollective or other methods (crypto, etc) via here

Let's bring in the new year with some maintenance.

We are going to update Lemmy to the latest version 0.19.8

To perform this there will be a downtime of 30 to 45 minutes while our database updates.

Unless everything goes wrong we'll be up within the hour.

See you on the other side.

Tiff

Edit 1:

We are all done!

Edit 2:

Frontends didn't start correctly and should be sorted now

Hello Reddthat-thians!

As always here is our semi-whenever there is news update. I would, as always like to thank you all for being here and for the kind support we received last time I made an update.

We hit a few couple of milestones this last quarter:

- Our first BTC transaction was received! Thank you anonymous!

- Lemmy released two versions (which we have yet to update too, more below)

- I only restarted the services once the whole quarter as I thought we were down/stuck.

Lemmy 0.19.7

The latest update brings some fixes to Lemmy as well as ~~new features such as Private/Invite only communities. I can't wait to see what this does to help people find safe spaces and to self regulate.~~ Edit: that's in 0.20.0. The only new features (worth talking about imo) are parallel sending and allowing people to have 1000 characters in their bio.

This update is not live yet as the update requires a 30-60 minute database update that I want to test on a backup to make sure we can safely update.

Other big instances have already done the update and most things went successfully so I'm confident that we can update without much fuss but I'd like to actually enjoy my holidays rather than spending 4 hours debugging db migrations! So, testing first.

Users

Something I think we struggle with is our proposition. As we have an opinionated view on what our community should be which doesn't always resonate when trying to sign up. With "only" 100 users active daily compared to other servers with over 1k it puts into perspective that not everyone agrees with our policies, but there are certainly people who do!

As such I'm open to ideas on growing our userbase or ideas on rephrasing our signup page, and sidebar.

Australian Social Media Laws

Here is the biggest news. Last week the Australian Government passed a new law that requires Social Media to no longer be accessible for kids under the age of 16. As such we will need to design a system & possibly modify Lemmy to be compliant with these laws.

I will not debate whether this is good or bad, because at the end of the day, we only have a few options: comply with these laws, don't comply and turn off reddthat, sell / handoff to another admin.

This has been a fun side project for me and I want to continue it. As such I'm looking into ways to comply with the local laws to ensure that Australian minors are blocked.

For Reddthat, this law requires social media to take "reasonable" steps to ensure children under the age of 16 are not be given an account. They do not define what reasonable means and leave it up to the social media platforms to define it. Really helpful for those indie social media platforms...

Unfortunately, like myself, I was thinking that we might technically not be defined as a social media platform thus skirting around the rule. The law defines a social media platform as any site whose primary or significant purpose is to connect 2 or more users, allows end-users to link to or interact with other end-users, and that allows end-users to post content.

So Lemmy, Mastodon, Blusky et al will be affected.

Researching it more leads to the idea which puts the onus on social media companies to continually verify accounts, so they take a proactive step to verify accounts that are believed to be accessed by minors under 16. Thus you need to prove you are above 16 years old. The cherry on top? You are not allowed to use a government issued ID to do it.

Thinking back to when you were 16 years old, what forms of identification did you have? A driver's licence? Maybe a passport? A school ID card? The last one (school ID) would be the only valid id you could use to verify yourself that isn't government issued in this instance. And good luck if you don't go to school anymore and started in the workforce when your 16.

Needless to say this is going to be a recurring theme over the next year and I will keep you all informed about it with our updates.

Future Features

A general outline of what I'm hoping to achieve is more controls in Lemmy to:

- have accounts in a 'monitored' state

- have a way to customise approval processes

- have accounts use a trust rating based on age, post/comment numbers, etc.

- this would help with spam as well (obviously this would need a lot of factors)

- have automod built in (or actually setup correctly for us)

Obviously these are things that will need Lemmy development to help facilitate and I'll be creating a few issues over the weeks once I've fleshed out what a solution might look like.

If you have ideas please share! let's start the conversation on what the processes would look like to help solve our issues.

Financials

Thanks to the big donation drive from last time I posted we are still looking healthy enough in the financials to last until May next year! Which is good as our server plan renews in April. At that time we will be downgrading to a smaller instance as we may have over exaggerated when purchasing last April. We'll obviously know more closer to April next year about our financials and what we can afford to ensure we are cost neutral (if possible!).

Our LemmyWorld proxy also is an extra cost that we never budgeted for and has been ticking away successfully. Maybe by April LW will have upgraded to >0.19.5 which will give us parallel sending allowing us to remove the proxy.

Our object storage is humming along but costs are creaping up. I'll be doing an audit and possible look into doing a cost analysis to see if other object storage solutions would be cheaper. But for less than $20/m it's probably not even worth my time...

As a reminder we have many ways to donate if you are able. A recurring donation of $5 is worth more to me than a once of $60 donation. Only because it allows me to forecast the year and work out when we need to do donation drives or relax knowing everything in it's current state will be fine.

- Open Collective (preferred)

- Kofi

- Litecoin Direct

- Monero Direct (preferred)

Note: while you still can transfer me Bitcoin, I have removed it as an option because of the current transaction fees. Monero or Litecoin offer transaction fees as low as $0.005 so they are the preferred options compared to the $5 transaction fee of Bitcoin.

Conclusion

You are awesome. Posting, Commenting, and interacting with communities on Reddthat or through Reddthat makes it enjoyable every time to write these updates. So keep being awesome, even if you are a lurker!

If I were not hosting in Australia I would still be required to conform to laws if I were to allow Australian people to use Reddthat. Blocking all of Aus would be an option. But we need to ask ourselves does that fall under our values or ideals? (No obviously). Neither does requiring people to verify they are over a certain age. But we'll see what's to become.

This is the same as how CPPA or GDPR are still enforcable while we are all the way on the other side of the world.

This doesn't require a knee jerk reaction but requires serious thinking, whiteboarding and well thought out communication.

As always,

Cheers,

Tiff

PS Happy Holidays!

We had a brief outage today due to the server running out of space.

I have been tracking our usage but associated it with extra logging and the extra build caches/etc that we've being doing.

Turns out the problem was the frontend Next-UI which has been caching every image since the container was created! All 75GB of cached data!

Once diagnosed it was a simple solution to fix. I'm yet to notify the project of this error/oversight and I'll edit this once Issues/PRs are created.

I also haven't looked at turning the caching off yet as my priority was recovering the main Reddthat service.

Thanks all for being here!

Tiff

Hello. It is I, Tiff. I am not dead contrary to my lack of Reddthat updates 😅 !

It's been a fun few months since our last update. We've been mainlining those beta releases, found critical performance issues before they made it into the wider Lemmyverse and helped the rest of the Lemmyverse update from Postgres 15 to 16 as part of the updates for Lemmy versions 0.19.4 and 0.19.5!

Thank-you to everyone who helped out in the matrix admin rooms as well as others who have made improvements which will allow us to streamline the setup for all future upgrades.

And a huge thank you to everyone who has stuck around as a Reddthat user too! Without you all this little corner of the world wouldn't have been possible. I havn't been as active as I should be for Reddthat, moderating, diagnosing issues and helping other admins has been taking the majority of my Reddthat allocated time. Creating these "monthly" updates should... be monthly at least! so I'll attempt start posting them monthly, even if nothing is really happening!

High CPU Usages / Long Load Times

Unfortunately you may have noticed some longer page load times with Reddthat, but we are not alone! These issues are with Lemmy as a whole! Since the 0.19.x releases many people have talked about Lemmy having an increase in CPU usage, and they have the monitoring to prove it too. On average there was a 20% increase and for those who have single user instance this was a significant increase. Especially when people were using a raspberry pi or some other small form factor device to run their instance.

This increase was one of the many reasons why our server migrations were required a couple months ago. There is good news believe it or not! We found the issue with the long page load times, and helped the developers find the solution! -

This change looks like it will be merged within the next couple days. Once we've done our own testing, we will backport the commit and start creating our own Lemmy 'version'. Any backporting will be met with scrutiny and I will only cherry-pick the bare minimum to ensure we never get into a situation where we are required to use the beta releases. Stability is one of my core beliefs and ensuring we all have a great time!

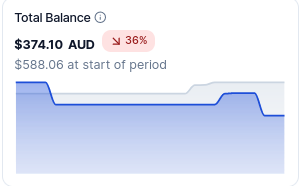

Donation Drive

We need some recurring donations!

We currently have $374.10 and our operating costs have slowly been creeping up over the course of the last few months. Especially with the current currency conversions. The current deficit is $74. Even with the amazing 12 current users we will run out of money in 5 months. That's January next year! We need another 15 users to donate $5/month and we'd be breaking even. That's 1 coffee a month.

If you are financially able please see the sidebar for donation options, go to our funding post , or go directly to our Open Collective and signup for recurring donations!

Our finances are viewable to all and you can see the latest expense here: https://opencollective.com/reddthat/expenses/213722

- OVH Server (Main Server) - $119.42 AUD

- Wasabi S3 (Image Hosting) - $16.85 AUD

- Scaleway Server (LemmyWorld Proxy) - $6.62 AUD

Scaleway

Unfortunately until Lemmy optimises their activity sending we still need a proxy in EU, and I havn't found any server that is cheaper than €3.99. If you know of something with 1GB RAM with an IPv4 thats less than that let me know. The good news is that Lemmy.ml is currently testing their new sending capabilities so it's possible that we will be able to eventually remove the server in the next year or so. The biggest cost in scaleway is actually the IPv4. The server itself is less than €1.50 so if lemmy.world had IPv6 we could in theory save €1.50/m. In saying all this, that saving per month is not a lot of money!

Wasabi

Wasabi S3 is also one of those interesting items where in theory it should only be USD$7, but in reality they are charging us closer to USD$11. They charge a premium for any storage that is deleted before 30 days, as they are meant to be an "archive" instead of a hot storage system.

This means that all images that are deleted before 30 days incur a cost. Over the last 30 days that has amounted to 305GB! So while we don't get charged for outbound traffic, we are still paying more than the USD$7 per month.

We've already tried setting the pictrs caching to auto-delete the thumbnails after 30 days rather than the default 7 days, but people still upload and delete files, and close our their accounts and delete everything. I expect this to happen and want people to be able to delete their content if they wish.

OVH Server

When I migrated the server in April we were having database issues. As such we purchased a server with more memory (ram) than the size of the database, which is the general idea when sizing a database. Memory: 32 GB. Unfortunately I was thinking on a purely technical level rather than what we could realistically afford and now we are paying the price. Literally. (I also forgot it was in USD not AUD :| )

Again, having the extra ram gives us the ability to host our other frontends, trial new features, and ensure we are going to be online incase there are other issues. Eventually we will also increase our Lemmy applications from 1 to 2 and this extra headroom will facilitate this.

Donate your CPU! (Trialing)

If you are unable to donate money that is okay too and here is another option! You can donate your CPU power instead to help us mine crypto coins, anonymously! This is a new initiative we are trialing. We have setup a P2Pool at: https://donate.reddthat.com. More information about joining the mining pool can be found there. The general idea is: download a mining program, enter in our pool details, start mining, when our pool finds a "block", we'll get paid.

I've been testing this myself as an option of the past month as a "side hustle" on some laptops. Over the past 30 days I managed to make $5. Which is not terrible if we can scale it out. If it doesn't takes off, that's fine too!

I understand some people will be hesitant for any of the many reasons that exist against crypto, but any option to help us pay our server bills and allow people to donate in an anonymous way is always a boon for me.

Conclusion

These Lemmy bugs have been causing a headache for me in diagnosing and finding solutions. With the upcoming 0.19.6 release I hope that we can put this behind us and Reddthat will be better for it.

Again, thank you all for sticking around in our times of instabilities, posting, commenting and engaging in thoughtful communications.

You are all amazing.

Cheers,

Tiff

It's our Birthday! 🎂

It's been a wild ride over the past year. I still remember hearing about a federated platform that could be a user driven version of Reddit.

And how we have grown!

Thank you to everyone, old and new who has had an account here. I know we've had our ups and downs, slow servers, botched migrations, and finding out just because we are on the otherside of the world Lemmy can't handle it, but we are still here and making it work!

If I could go back and make the choice again. Honestly, I'd probably make the same choice. While it has been hectic, it has been enjoyable to the n'th degree.

I've made friends and learnt a lot. Not just on a technical level but a fair amount on a personal level too.

We have successfully made a community of over 300 people who regularly use Reddthat as their entryway into the Lemmyverse. Those numbers are real people, making real conversations.

Here's to another amazing year!

Tiff

PS: I'm still waiting for that first crypto donation 😜

PPS: Would anyone like a Hoodie with some sort of Reddthat logo/design? I know I would.

April is here and we're still enjoying our little corner of the lemmy-verse! This post is quite late for our April announcement as my chocolate coma has only now subsided

Donations

Due to some recent huge donations recently on our Ko-Fi we decided to migrate our database from our previous big server to a dedicated database server. The idea behind this move was to allow our database to respond faster with a faster CPU. It was a 3.5GHz cpu rather than what we had which was a 3.0GHz. This, as we know, did not pan out as we expected.After that fell through we have now migrated everything to 1 huge VPS in a completely different hosting company (OVH).

Since the last update I used the donations towards setting up an EU proxy to filter out down votes & spam as a way to try and respond faster to allow us to catch up. We've purchased the new VPS from OVH (which came out of the Ko-Fi money), & did the test of the separate database server in our previous hosting company.

Our Donations as of (7th of April):

- Ko-Fi: $280.00

- OpenCollective: $691.55 (of that 54.86 is pending payment)

Threads Federation

Straight off the bat, I'd like to say thank you for those voicing your opinions on the Thread federation post. While we had more people who were opposed to federation and have since deleted their accounts or moved communities because of the uncertainty, I left the thread pinned for over a week as I wanted to make sure that everyone could respond and have a general consensus. Many people bought great points forward, and we have decided to block Threads. The reasoning behind blocking them boils down to:

- Enforced one-way communication, allowing threads users to post in our communities without being able to respond to comments

- Known lack of Moderation which would allow for abuse

These two factors alone make it a simple decision on my part. If they allowed for comments on the post to make it back to a threads user then I probably would not explicitly block them. We are an open-first instance and I still stand by that. But when you have a history of abusive users, lack of moderation and actively ensure your users cannot conduct a conversation which by definition would be 2 way. That is enough to tip the scales in my book.

Decision: We will block Threads.net starting immediately.

Overview of what we've been tackling over the past 4 weeks

In the past month we've:

- Re-configured our database to ensure it could accept activities as fast as possible (twice!)

- Attempted to move all lemmy apps to a separate server to allow the database to have full use of our resources

- Purchased an absurd amount of cpus for a month to give everything a lot of cpu to play with

- Setup a haproxy with a lua-script in Amsterdam to filter out all 'bad' requests

- Worked with the LW Infra team to help test the Amsterdam proxy

- Rebuilt custom docker containers for added logging

- Optimised our nginx proxies

- Investigated the relationship between network latency and response times

- Figured out the maximum 3r/s rate of activities and notified the Lemmy Admin matrix channel, created bug reports etc.

- Migrated our 3 servers from one hosting company to 1 big server at another company this post

This has been a wild ride and I want to say thanks to everyone who's stuck with us, reached out to think of ideas, or send me a donation with a beautiful message.

The 500 mile problem (Why it's happening for LemmyWorld & Reddthat)

There are a few causes of this and why it effects Reddthat and a very small number of other instances but the main one is network latency. The distance between Australia (where Reddthat is hosted) and Europe/Americas is approximately 200-300ms. That means the current 'maximum' number of requests that a Lemmy instance can generate is approximate 3 requests per second. This assumes a few things such as responding instantly but is a good guideline.

Fortunately for the lemmy-verse, most of the instances that everyone can join are centralised to the EU/US areas which have very low latency. The network distance between Paris and Amsterdam is about 10ms. This means any instances that exist in close proximity can have an order of magnitude of activities generated before they lag behind. It goes from 3r/s to 100r/s

- Servers in EU<->EU can generate between 50-100r/s without lagging

- Servers in EU<->US can generate between 10-12r/s without lagging

- Servers in EU<->AU can generate between 2-3r/s without lagging

Already we have a practical maximum of 100r/s that an instance can generate before everyone on planet earth lags behind.

Currently (as of writing) Lemmy needs to process every activity sequentially. This ensures consistency between votes, edits, comments, etc. Allowing activities to be received out-of-order brings a huge amount of complexity which everyone is trying to solve to allow for all of us (Reddthat et al.) to no longer start lagging. There is a huge discussion on the git issue tracker of Lemmy if you wish to see how it is progressing.

As I said previously this assumes we respond in near real-time with no processing time. In reality, no one does, and there are a heap of reasons because of that. The biggest culprit of blocking in the activity threads I have found (and I could be wrong) is/was the metadata enrichment when new posts are added. So you get a nice Title, Subtitle and an image for all the links you add. Recent logs show it blocks adding post activities from anywhere between 1s to 10+ seconds! Since 0.19.4-beta.2 (which we are running as of this post) this no longer happens so for all new posts we will no longer have a 5-10s wait time. You might not have image displayed immediately when a Link is submitted, but it will still be enriched within a few seconds. Unfortunately this is only 1 piece of the puzzel and does not solve the issue. Out of the previous 24hours ~90% of all recieved activities are related to votes. Posts are in the single percentage, a rounding error.

This heading is in reference to the 500 miles email.

Requests here mean Lemmy "Activities", which are likes, posts, edits, comments, etc.

So ... are we okay now?

It is a boring answer but we wait and enjoy what the rest of the fediverse has to offer. This (now) only affects votes between LemmyWorld to Reddthat. All communities on Reddthat are successfully federating to all external parties so your comments and votes are being seen throughout the fediverse. There are still plenty of users in the fediverse who enjoy the content we create, who interact with us and are pleasant human beings. This only affects votes because of our forcing federation crawler which automatically syncs all LW posts and comments. We've been "up-to-date" for over 2 weeks now.

It is unfortunate that we are the ones to be the most affected. It's always a lot more fun being on the outside looking in, thinking about what they are dealing with and theorising solutions. Knowing my fellow Lemmy.nz and aussie.zone were affected by these issues really cemented the network latency issue and was a brilliant light bulb moment. I've had some hard nights recently trying to manage my life and looking into the problems that are effecting Reddthat. If I was dealing with these issues in isolation I'm not sure I would have come to these conclusions, so thank you our amazing Admin Team!

New versions means new features (Local Communities & Videos)

As we've updated to a beta version of 0.19.4 to get the metadata patches, we've already found bugs in Lemmy (or regressions) and you will notice if you use Jerboa as a client. Unfortunately, rolling back isn't advisable and as such we'll try and get the issues resolved so Jerboa can work.

We now have ability to change and create any community to be "Local Only".

With the migration comes support for Video uploads, Limited to under 20MB and 10000 frames (~6 minutes)! I suggest if you want to shared video links to tag it with [Video] as it seems videos on some clients don't always show it correctly.

Thoughts

Everyday I strive to learn about new things, and it has certainly been a learning experience! I started Reddthat with knowing enough of alternate technologies, but nearly nothing of rust nor postgres. 😅

We've found possibly a crucial bug in the foundation of Lemmy which hinders federation, workarounds, and found not all VPS providers are the same. I explained the issues in the hosting migration post. Learnt a lot about postgres and tested postgres v15 to v16 upgrade processes so everyone who uses the lemmy-ansible repository can benefit.

I'm looking forward to a relaxing April compared to the hectic March but I foresee some issues relating to the 0.19.4 release, which was meant to be released in the next week or so. 🤷

Cheers,

Tiff

PS. Since Lemmy version 0.19 you can block an instance yourself without requiring us to defederate via going to your Profile, clicking Blocks, and entering in the instance you wish to be blocked.

Fun Graphs:

Instance Response Times:

Data Transfers:

Basically, I'm sick of these network problems, and I'm sure you are too. We'll be migrating everything: pictrs, frontends & backends, database & webservers all to 1 single server in OVH.

First it was a cpu issue, so we work around that by ensuring pictrs is on another server, and have just enough CPU to keep us all okay. Everything was fine until the spammers attacked. Then we couldn't process the activities fast enough, and now we can't catch up.

We are having constant network drop outs/lag spikes where all the networking connections get "pooled" with a CPU steal of 15%. So we bought more vCPU and threw resources at the problem. Problem temporarily fixed, but we still had our "NVMe" VPS, which housed our database and lemmy applications showing an IOWait of 10-20% half the time. Unbeknown to me, that it was not IO related, but network related.

So we moved the database server off to another server, but unfortunately that caused another issue (the unintended side effects, of cheap hosting?). Now we have 1 main server accepting all network traffic, which then has to contact the NVMe DB server and pict-rs server as well. Then send all that information back to the users. This was part of the network problem.

Adding backend & frontend lemmy containers to the pict-rs server helped alleviate and is what you are seeing at the time of this post. Now a good 50% of the required database and web traffic is split across two servers which allows for our servers to not completely be saturated with request.

On top of the recent nonsense, it looks like we are limited to 100Mb/s, that's roughly 12MB/s. So downloading a 20MB video via pictrs would require the current flow: (in this example)

- User requests image via cloudflare

- (its not already cached so we request it from our servers)

- Cloudflare proxies the request to our server (app1).

- Our app1 server connects to the pictrs server.

- Our app1 server downloads the file from pictrs at a maximum of 100Mb/s,

- At the same time, the app1 server is uploading the file via cloudflare to you at a maximum of 100Mb/s.

- During this point in time our connection is completely saturated and no other network queries could be handled.

This is of course an example of the network issue I found out we had after moving to the multi-server system. This is of course not a problem when you have everything on one beefy server.

Those are the board strokes of the problems.

Thus we are completely ripping everything out and migrating to a HUGE OVH box. I say huge in capital letters because the OVH server is $108/m and has 8 vCPU, 32GB RAM, & 160GB of NVMe. This amount of RAM allows for the whole database to fit into memory. If this doesn't help then I'd be at a loss at what will.

Currently (assuming we kept paying for the standalone postgres server) our monthly costs would have been around $90/m. ($60/m (main) + $9/m (pictrs) + $22/m (db))

Migration plan:

The biggest downtime will be the database migration as to ensure consistency we need to take it offline. Which is just simpler than

DB:

- stop everything

- start postgres

- take a backup (20-25 mins)

- send that backup to the new server (5-6 mins (Limited to 12MB/s)

- restore (10-15 mins)

pictrs

- syncing the file store across to the new server

app(s)

- regular deployment

Which is the same process I recently did here so I have the steps already cemented in my brain. As you can see, taking a backup ends up taking longer than restoring. That's because, after testing the restore process on our OVH box we were no where near any IO/CPU limits and was, to my amazement, seriously fast. Now we'll have heaps of room to grow with a stable donation goal for the next 12 months.

See you on the other side.

Tiff

In the past 24-48 hours we've done a lot of work as you may have noticed, but you might be wondering why there are posts and comments with 0 upvotes.

Normally this would be because it is from a mastodon-like fediverse instance, but for now it's because of us and is on purpose.

Lemmy has an API where we can tell it to resolve content. This forces one of the most expensive parts of federation (for reddthat) , the resolving of post metadata, to no longer be blocking. So when new posts get federated we can answer quicker saying "we already know about that, tell us something we don't know"!

This makes the current activity graphs not tell the whole story anymore as we are going out-of-band. So while the graphs say we are behind, in reality we are closer than ever (for posts and comments).

Shout out to the amazing @example@reddthat.com who thought up this crazy idea.

Tldr, new posts are coming in hot and fast, and we are up-to-date with LW now for both posts and comments!

There was a period on an hour where we migrated our database to it's own server in the same data centre.

This server has faster CPUs than our current one, 3.5GHz v 3.0GHz, and if all goes well we will migrate the extra CPUs we bought to the DB server.

The outage was prolonged due to a PEBKAC issue. I mistyped an IP in our new postgres authentication as such I kept getting a "permission error".

The decision of always migrating the DB to it's own server was always going to happen but was pushed forward with the recent donations we got from Guest on our Ko-Fi page! Thank-you again!

If you see any issues relating to ungodly load times please feel free to let us know!

Cheers,

Tiff

Our server rebooted and unfortunately I had not saved our new firewall rules. This blocked all Lemmy services from accessing our databases.

This has now been fixed up! Sorry to everyone for the outage!

Edited: this post to be the Lemmy.World federation issue post.

We are now ready to upgrade to postgres 16. When this post is 30 mins old the maintenace will start

Recently beehaw has been hung out to dry with Open Collective dissolving their Foundation which was their hosted collective. Basically, the company that was holding onto all of beehaw's money, will no longer accept donations, and will close. You can read more in their alarmingly titled post: EMERGENCY ANNOUNCEMENT: Open Collective Foundation is dissolving, and Beehaw needs your help

While this does not affect us, it certainly could have been us. I hope the Beehaw admins are doing okay and manage to get their money back. From what I've seen it should be easy to zero-out your collective, but "closure" and "no longer taking donations" make for a stressful time.

Again, Reddthat isn't affected by this but it does point out that we are currently at the behest of one company (person?) from winning the powerball or just running away with the donations.

Fees

Upon investigating our financials we are actually getting stung on fees quite often. Forgive me if I go on a rant here, skip below for the donation links if you don't want to read about the ins and outs. OpenCollective uses Stripe, which take 3% + $0.30 per transaction. Thats pretty much an industry standard. 3% + 0.30. Occasionally it's 3% + 0.25 depending on the payment provider Stripe uses internally. What I didn't realise is that Open Collective also pre-fills the donation form. This defaults to 15.00%. So in reality when I have set the donation to be $10, you end up paying $11.50. $1.50 goes to Open Collective, and then Stripe takes $0.48 in transaction fees. Then, when I get reimbursed for the server payments, Stripe takes another payment fee, because our Hosted Collective also has to pay a transfer fee. (Between you and me, I'm not sure why that is when we are both in Australia... and inter-bank transactions are free). So of that $11.50 out of your pocket, $1.50 goes to Open Collective, Stripe takes $0.48, then at the end of the month I lose $0.56 per expense! We have 11 donators so and 3 expenses per month, which works out to be another $0.15 per donator. So at the end of the day, that $11.50 becomes $9.37. A total difference of $2.13 per donator per month.

As I was being completely transparent I broke these down to 3 different transactions. The Server, the extra ram, and our object storage. Clearly I can save $1.12/m by bundling all the transactions, but that is not ideal. In the past 3 months we have paid $26.83 in payment transaction fees.

After learning this information, anyone who has recurring donations setup should check if the would like to continue giving 15% to Open Collective or not, or pass that extra 15% straight to Reddthat instead!

So to help with this outcome we are going to start diversifying and promoting alternatives to Open Collective as well as attempting to publish, maybe a specific page which I can update like /donate. But for the moment we will update our sidebar and main funding page.

Donation Links

From now on we will be using the following services.

- Ko-Fi: https://ko-fi.com/reddthat

- (best for once off donations)

- 0% on once-off donations

- 5% on all recurring (Unless I pay them $8/month for 0% fee)

- Open Collective: https://opencollective.com/reddthat

- (best for recurring donations)

- Stripe 3% + $0.3 (+ $0.58 Expense fee/month)

- Crypto:

- XMR Directly:

4286pC9NxdyTo5Y3Z9Yr9NN1PoRUE3aXnBMW5TeKuSzPHh6eYB6HTZk7W9wmAfPqJR1j684WNRkcHiP9TwhvMoBrUD9PSt3 - BTC Directly:

bc1q8md5gfdr55rn9zh3a30n6vtlktxgg5w4stvvas

I'm looking into Librepay as well but it looks like I will need to setup stripe myself, if I can do that in a safe way (for me and you) then I'll add that too.

Next Steps

From now on I'll be bundling all expenses for the month into one "Expense" on Open Collective to minimise fees as that is where most of the current funding is. I'll also do my best to do a quarterly budget report with expenditures and a sum of any OpenCollective/Kofi/Crypto/etc we have.

Thank you all for sticking around!

Tiff

Just got the server back up into a working state, still investigating why it decided to kill itself.

Unfortunately dealing with $job all day today with things randomly breaking like this. I'm guessing leap year shenanigans, but have no way in knowing that yet.

Just wanted to let you all know it's back up!

Unfortunately we had a Valentine's Day outage of around 2 hours.

Incident Timeline: (times in UTC)

04:39 - Our monitoring sees a 50x error.

04:41 - I am alerted via email & phone.

04:48 - I acknowledge the incident and start investigating

04:50 - I cannot access the VM via SSH. I issue a reboot via our control panel.

04:54 - Our server has a load of 12 and an 57% of all IO operations are IOWait.

05:30 - I issue another reboot and can't seem to figure out what's wrong

05:58 - I lodge a ticket with our provider to check the host, and to power off and on again as we still have huge IOWait values, and 100% Memory usage.

06:30 - hosting company hasn't got back to me and I start investigating by rolling back the latest configuration changes I've done & reboot.

06:35 - sites are back online.

Resolution

Latest change included turning on huge pages with a value of 100MB to allow postgres to get some performance gains.

This change was done on Monday morning and I had planned to do a power cycle this week to confirm everything was on the up-and-up. Turns out my host did that for me.

The outage lasted longer than it should have due to some $job and $life.

Until next time,

Cheers,

Tiff

Happy leap year! February comes with a fun 29th day? What will we do with the extra 24 hours? Sorry I missed the January update but I was too busy living the dream in cyberspace.

Community Funding

As always, I'd like to thank our dedicated community members who keep the lights on. Unfortunately our backup script went a little haywire over this last couple months and it resulted in a huge bill regarding our object storage! This bug has been fixed and new billing alerts have been updated to ensure this doesn't happen again.

If you are new to Reddthat and have not seen our OpenCollective page it is available over here. All transactions and expenses are completely transparent. If you donate and leave your name (which is completely optional) you'll eventually find your way into our main funding post over here!

Upcoming Postgres Upgrade

In the admin matrix channels there has been lots of talk about database optimisations recently as well as the issues relating to out of memory (OOM) errors. These OOM issues are mostly in regards to memory "leaks" and is what was plaguing Reddthat on a weekly basis. The good news is that other instance admins have confirmed that Postgres 16 (we are currently on Postgres 15) fixes those leaks and performs better all around!

We will be planning to upgrade Postgres from 15 to 16 later on this month (February). I'm tentatively looking at performing it during the week of the 18th to 24th. This will mean that Reddthat will be down for the period of the maintenance. I expect this to take around 30 minutes, but further testing on our development machines will produce a more concrete number.

This "forced" upgrade comes at a good time. As you may or may not be aware by our uptime monitoring we have been having occasional outages. This is because of our postgres server. When we do a deploy and make a change, the postgres container does not shutdown cleanly. So when it restarts it has to perform a recovery to ensure data consistency. This recovery process normally requires about 2-3 minutes where you will see an unfortunate error page.

This has been a sore spot with me as it is most likely an implementation failure on my part and I curse to myself whenever postgres decides to restart for whatever reason. Even though it should not restart because I made a change on a separate service. I feel like I am letting us down and want to do better! These issues leads us into the next section nicely.

Upcoming (February/March) "Dedicated" Servers

I've been playing with the concept of separating our services for a while now. We now have Pictrs being served on different server but still have lemmy, the frontends and the database all on our big single server. This single server setup has served us nicely and would continue to do so for quite some time but with the recent changes to lemmy adding more pressure onto the database we need to find a solution before it becomes an even bigger problem.

The forced postgres upgrade gives us the chance to make this decision and optimise our servers to support everyone and give a better experience on a whole.

Most likely starting next month (March) we will have two smaller front-end servers, which will contain the lemmy applications, a smallish pictrs server, and a bigger backend server to power the growing database. At least that is the current plan. Further testing may make us re-evaluate but I do not forsee any reason we would change the over-arching aspects.

Lemmy v0.19 & Pictrs v0.5 features

We've made it though the changes to v0.19.x and I'd like to say even with the unfortunate downtimes relating to Pictrs' temp data, we came through with minor downtimes and (for me) better appreciation of the database and it's structure.

Hopefully everyone has been enjoying the new features. If you use the default web-ui, you should also be able to upload videos, but I would advise against it. There is still the limit of the 10 second request regarding uploads, so unless your video is small and short it won't complete in-time.

Closing

It has been challenging over the past few months and especially with communities not as active as they once were. It almost seems that our little instance is being forgotten about and other instances have completely blown up! But it is concerning that without 1 or 2 people our communities dry up.

As an attempt to breathe life into our communities, I've started a little Community Spotlight initiative. Where every 1-2 weeks I'll be pinning a community that you should go and checkout!

The friendly atmosphere and communities is what make me want to continue doing what I do in my spare time. I'm glad people have found a home here, even if they might lurk and I'll continue trying to make Reddthat better!

Cheers,

Tiff

On behalf of the Reddthat Admin Team.

We've finally updated to 0.19 after some serious wrangling with the database migrations.

You need to re-login on your apps, & reset 2FA if you had it

Basically it went super smooth on dev, (because the dev db was super-ish small). Turned out the regular pruning of the activities (sent/received) were not happening on production, so the database had grown to over 90GB!

It's finally sorted itself and everything is right with the world.

🚀

Cool New Features:

- Instance blocks, for Users!

- Import/Export Settings!

- a 60 minute downtime 😜

- "working" 2FA

The complete release notes are here: https://join-lemmy.org/news/2023-12-15_-_Lemmy_Release_v0.19.0_-_Instance_blocking,_Scaled_sort,_and_Federation_Queue

Cheers,

Tiff

Edit 1.

There was/is a known bug relating to outgoing federation (https://github.com/LemmyNet/lemmy/issues/4288) which we are hoping to be fixed with 0.19.1-rc1. To ensure we can federate with everyone we have updated to our first "beta" version in production. '-rc1'

Hello Everyone, I would like to wish everyone a happy New Year period where we've decided as a human race to stop working and start enjoying spending copious amounts of money on loved ones, items for ourselves, or just relishing the time to relax and recuperate.

Whatever your desired state of mind while the New Year period is we at Reddthat hope you enjoy it!

Community Funding

First up, I'd like to thank our dedicated community members who keep the lights on. Knowing that together we are creating a little home on the internet pleases me to no end. Thankyou!

A special mention to our first time contributors as well. Welcome to Reddthat: Ashley & Guest!

If you are new to Reddthat and have not seen our OpenCollective page it is available over here. All transactions and expenses are completely transparent. If you donate and leave your name you'll eventually find your way into our main funding post over here

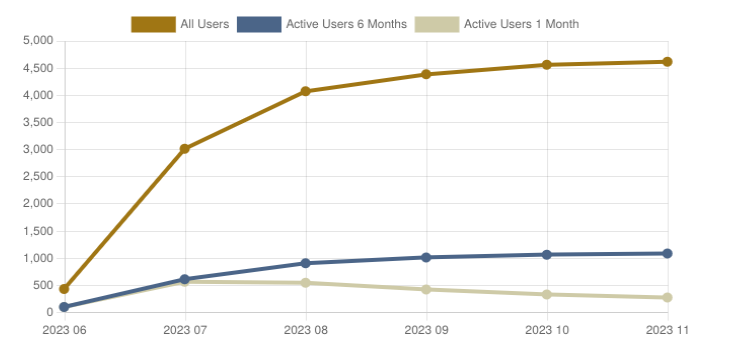

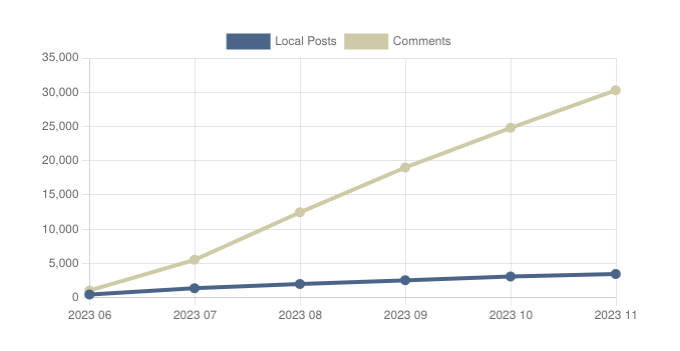

User Statistics

As some of you might know, the Lemmy-verse is not populated as it once was back when people were thinking of a max-exodus. But do not be dismayed! These graphs show the dedication of our userbase rather than a dying platform.

These lines are replicated across all of the biggest instances not just our own. If you are interested checkout Fediverse Observer who I borrowed these graphs from. Thank-you!

I'm proud to know that over 250 people are using the services we provide & I hope you are enjoying it too!

A rise of close to 5000 comments MoM goes to show that we have a healthy amount of users in the fediverse who are actively interacting and communicating.

Users

Posts/Comments

Server Stability (Maintenance Windows) & Future Plans

The server has been rock solid the past month(s) since the last update. Except for 2 unscheduled outages, one when our hosting provider restarted the underlying host. This was during their weekly maintenance window so I can't be completely mad at them and was due to them finishing migrating everything between the old host we were on.

For everyone's information, the maintenance windows are: (UTC)

- Friday nights -- 22:00 to 01:00

- Tuesday afternoons -- 12:00 to 16:00

The majority of the time you will never see downtime during these periods but if you do happen to see high load times or error pages, that will be the cause. Due to the nature of this, I also perform maintenance during these periods such as system updates.

Dedicated server?

Our eventual plan was to always purchase a dedicated server with a beefy CPU & NVMe drive to enable us to do 3 things:

- Provide fast database queries to reduce latency and give a snappy experience

- On-demand support for encoding videos to allow you to post video memes

- Allow for growth to over 10k active users per month

Black Friday/Cyber Monday not withstanding, dedicated servers cost on average A$150-200/m. You can get better deals with other providers but for budgeting wise it is a good range.

With the bots leaving and some people deciding federated platforms were not right for them, the decisions are answered for us. While it would be good to provide a slightly faster experience, or be able to withstand over 10k active users per day, we are not there yet.

Our current solutions have growing room for the foreseeable future and until we outgrow our server it seems prudent not willingly waste money.

We have always been community funded and the trust that you have given us to make the best call for our future is something that I will not squander.

Lemmy v0.19 & Pictrs v0.5 features (coming soon)

The new version of Lemmy is getting closer and closer to being ready for fediverse-wide adoption for everyone to use (as in next week!). As previously stated in our October update there will be teething issues for those with 2FA but we are here for you.

Pictrs (the app which processes all the pictures/memes/gifs/videos) is due to release 0.5 soon as well. Which brings with it new database support, specifically postgres. This is the same database which Lemmy runs on, which means all Lemmy server admins will be able to run multiple pictrs instances to provide faster images, handle greater concurrent uploads, and if all goes well, less transcoding of supported videos.

Once the Lemmy and Pictrs versions come out we will be performing the maintenance required to update during the maintenance periods listed above. If everything goes well with lemmy.ml's rollout (see here) we'll schedule a maintenance period. (I'm eyeing off the 8th of December currently)

Closing

Reddthat is constantly seeing new members sign-up though our new application process. Since implementing applications have gone from an abysmal 40% success rate to over a 90% success.

Welcome to all these new members and maybe today will be your first post or comment!

Cheers,

Tiff

On behalf of the Reddthat Admin Team.

Hello Reddthat,

Another month has flown by. It is now "Spooky Month". That time of year where you can buy copious amounts of chocolate and not get eyed off by the checkout clerks...

Lots has been happening at Reddthat over this last month. The biggest one being the new admin team!

New Administrators

Since our last update we have welcomed 4 new Administrators to our team! You might have seen them around answering questions, or posting their regular content. Mostly we have been working behind the scenes moderating, planning and streamlining a few this that 1 person cannot do all on their own.

I'd like to say: Thank you so much for helping us all out and hope you will continue contributing your time to Reddthat.

Kbin federated issues

Most of the time of our newly appointed admins have been taken up with moderating our federated space rather than the content on reddthat! As discussed in our main post (over here) https://reddthat.com/post/6334756 . We have chosen to remove specific kbin communities where the majority of spam was coming from. This was a tough decision but an unfortunate and necessary one.

We hope that it has not caused an issue for anyone. In the future once the relevant code has been updated and kbin federates their moderation actions, we will gladly 'unblock' those communities.

Funding Update & Future Server(s)

We are currently trucking along with 2 new recurring donators in our September month, bringing us to 34 unique donators! I have updated our donation and funding post with the names of our recurring donators.

With the upcoming changes with Lemmy and Pictrs it will give us the opportunity to investigate into being highly-available, where we run 2 sets of everything on 2 separate servers. Or scale out our picture services to allow for bigger pictures, and faster upload response times. Big times ahead regardless.

Upcoming Lemmy v0.19 Breaking Changes

Acouple of serious changes are coming in v0.19 that people need to be aware of. 2FA Reset and Instance level blocking per user

All 2FA will be effectivly nuked

This is a Lemmy specific issue where two factor authentication (2FA) has been inconsistent for a while. This will allow people who have 2FA and cannot get access to their account to login again. Unfortunately if someone outthere is successfully using 2FA then you will also need to go though the setup process again.

Instance blocks for users.

YaY!

No longer do you have to wait for the admins to defederate, now you will be able to block a whole instance from appearing in your feeds and comment threads. If you don't like a specific instance, you will be able to "defederate" yourself!

How this will work on the client side (via the web or applications) I am not too certain, but it is coming!

Thankyou

Thankyou again for all our amazing users and I hope you keep enjoying your Lemmy experiences!

Cheers

Tiff,

On behalf of the Reddthat Admin Team.

Hello Reddthat,

Similar to other Lemmy instances, we're facing significant amounts of spam originating from kbin.social users, mostly in kbin.social communities, or as kbin calls them, magazines.

Unfortunately, there are currently significant issues with the moderation of this spam.

While removal of spam in communities on other Lemmy instances (usually) federates to us and cleans it up, removal of spam in kbin magazines, such as those on kbin.social, is not currently properly federated to Lemmy instances.

In the last couple days, we've received an increased number of reports of spam in kbin.social magazines, of which a good chunk had already been removed on kbin.social, but these removals never federated to us.

While these reports are typically handled in a timely manner by our Reddthat Admin Team, as reports are also sent to the reporter's instance admins, we've done a more in-depth review of content in these kbin.social magazines.

Just today, we've banned and removed content from more than 50 kbin.social users, who had posted spam to kbin.social magazines within the last month.

Several other larger Lemmy instances, such as lemmy.world, lemmy.zip, and programming.dev have already decided to remove selected kbin.social magazines from their instances to deal with this.

As we also don't want to exclude interactions with other kbin users, we decided to only remove selected kbin.social magazines from Reddthat, with the intention to restore them once federation works properly.

By only removing communities with elevated spam volumes, this will not affect interactions between Lemmy users and kbin users outside of kbin magazines. kbin users are still able to participate in Reddthat and other Lemmy communities.

For now, the following kbin magazines have been removed from Reddthat:

[!random@kbin.social](/c/random@kbin.social)[!internet@kbin.social](/c/internet@kbin.social)[!fediverse@kbin.social](/c/fediverse@kbin.social)[!programming@kbin.social](/c/programming@kbin.social)[!science@kbin.social](/c/science@kbin.social)[!opensource@kbin.social](/c/opensource@kbin.social)

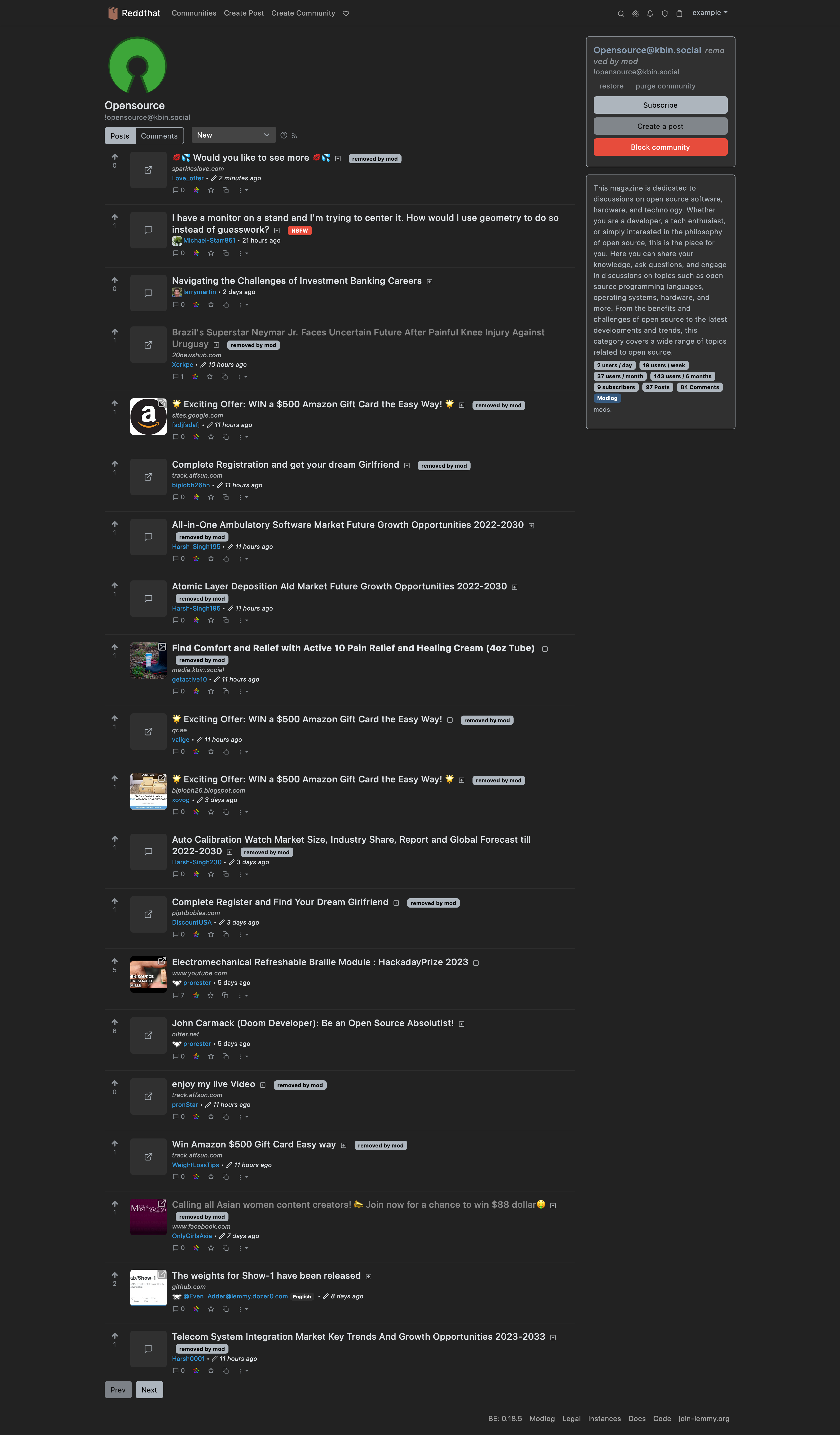

To get an idea of the spam to legitimate content ratio, here's some screenshots of posts sorted by New:

All the removed by mod posts mean that the content was removed by Reddthat admins, as the removals on kbin.social did not find their way to us.

If you encounter spam, please keep reporting it, so community mods and we admins can keep Reddthat clean.

If you're interested in the technical parts, you can find the associated kbin issue on Codeberg.

Regards,

example and the Reddthat Admin Team

TLDR

Due to spam and technical issues with the federation of spam removal from kbin, we've decided to remove selected kbin.social magazines (communities) until the situation improves.

Hello Reddthat,

It has been a while since I posted a general update, so here we go!

Slight content warning regarding this post. If you don't want to read depressing things, skip to # On a lighter note.

The week of issues

If you are not aware, the last week(s) or so has been a tough one for me & all other Lemmy admins. With recent attacks of users posting CSAM, a lot of us admins have been feeling a wealth of pressure.

Starting to be familiar with the local laws surrounding our instances as well as being completely underwhelmed with the Lemmy admin tools. All of these issues are a first for Lemmy and the tools do not exist in a way that enable us to respond fast enough or in a way in which content moderation can be effective.

If it happens on another instance it still affects everyone, as it effects every fediverse instance that you federate against.

As this made "Lemmy" news it resulted in Reddthat users asking valid questions on our !community@reddthat.com. I responded with the plan I have if we end up getting targeted for CSAM. At this point in time a user chose violence upon waking up and started to antagonise me for having a plan. This user is a known Lemmy Administrator of another instance. They instigated a GDPR request for their information. While I have no issue with their GDPR instigation, I have an issue with them being antagonising, abusive, and

Alternatively, stop attracting attention you fuckhead; a spineless admin saying “I’m Batman” is exactly how you get people to mess with you

Unfortunately they chose to create multiple accounts to abuse us further. This is not conduct that is conducive for conversation nor is it conduct fitting of a Lemmy admin either. As a result we have now de-federated from the instance under the control of that admin.

To combat bot signups, we have moved to using the registration approval system. The benefit to this system not only will ensure that we can hopefully weed out the bots and people who mean to do us harm, but we can use it to put the ideals and benefits of Reddthat in the forefront of the user.

This will hopefully help them understand what is and isn't acceptable conduct while they are on Reddthat.

On a lighter note

Stability

Server stability has been fixed by our hosting provider and a benefit is that we've been migrated to newer infrastructure. What this does for our vCPU is yet to be determined but we have benefited and have confirmed our status monitoring service (over here) is working perfectly and is detecting and alerting me.

Funding

Donations on a monthly basis are now completely covering our monthly costs! I can't thank you enough for these as it really does help keep the lights on.

In August we had 4 new donators, all recurring as well! Thank you so much!

Merch / Site Redesign

I would like to offer merchandise for those who want a hoody, mouse pad, stickers, etc.

Unfortunately I am a backend person, not a graphics designer. Thus if anyone would like to make some designs please let me know in the comments below. 🧡 An example:

I am thinking we need at least 3+ design choices:

- The Logo itself (Sticker/Phone/Magnet)

- Logo with Reddthat text

- Logo with

Where You've Truly 'ReddThat'! - Something cool that you would want on a hoody. <- Very important!

I've managed to find some on-demand printing services which will allow you to purchase directly through them and have it shipped directly from a local-ish printing facilities.

The goal is to reduce the time it takes you to get your merch, reduce the costs of shipping and hopefully ensure I know as little as possible about you as I can. If all goes to plan, I won't know about your addresses / payments / etc and everyone can have some cool merch!

New Admins

To help combat the spamming issue, the increase of reports and the new approval system I am looking for some new administrators to join the team.

While I do not expect you to do everything I do, an hour or two a day in your respective timezone, which amounts to randomly opening reddthat a couple times a day. Checking in to confirm everything is still hunky-dory is probably the minimum.

If you would like to apply to being an Admin to help us all out, please PM me with your approval answering the following questions:

Reason for your application:

Timezone:

Time you could allocate a day (estimate):

What you think you could bring to the team:

I am thinking we'll need about 6 admins to have full coverage around the globe and ensure that everyone will not be burnt out after a week! So even if you see a new admin pop up in the sidebar don't worry, there is always room for more!

Parting words

Thank you for those who have reached out and gave help. Thank you to the other Lemmy admins who helped me, and thank you everyone on Reddthat for still being here and enjoying our time together.

Cheers,

Tiff

tldr

- We have now defederated from our first instance :(

- Signups now are via Application process

- We had 4 new people join our recurring donations last month! 💛

- Merch is in the works. If you have some design skills please submit your designs in the comments!

- Admin recruitment drive

](

]( ](

](