this post was submitted on 27 Nov 2024

213 points (94.6% liked)

Firefox

19881 readers

6 users here now

A place to discuss the news and latest developments on the open-source browser Firefox

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

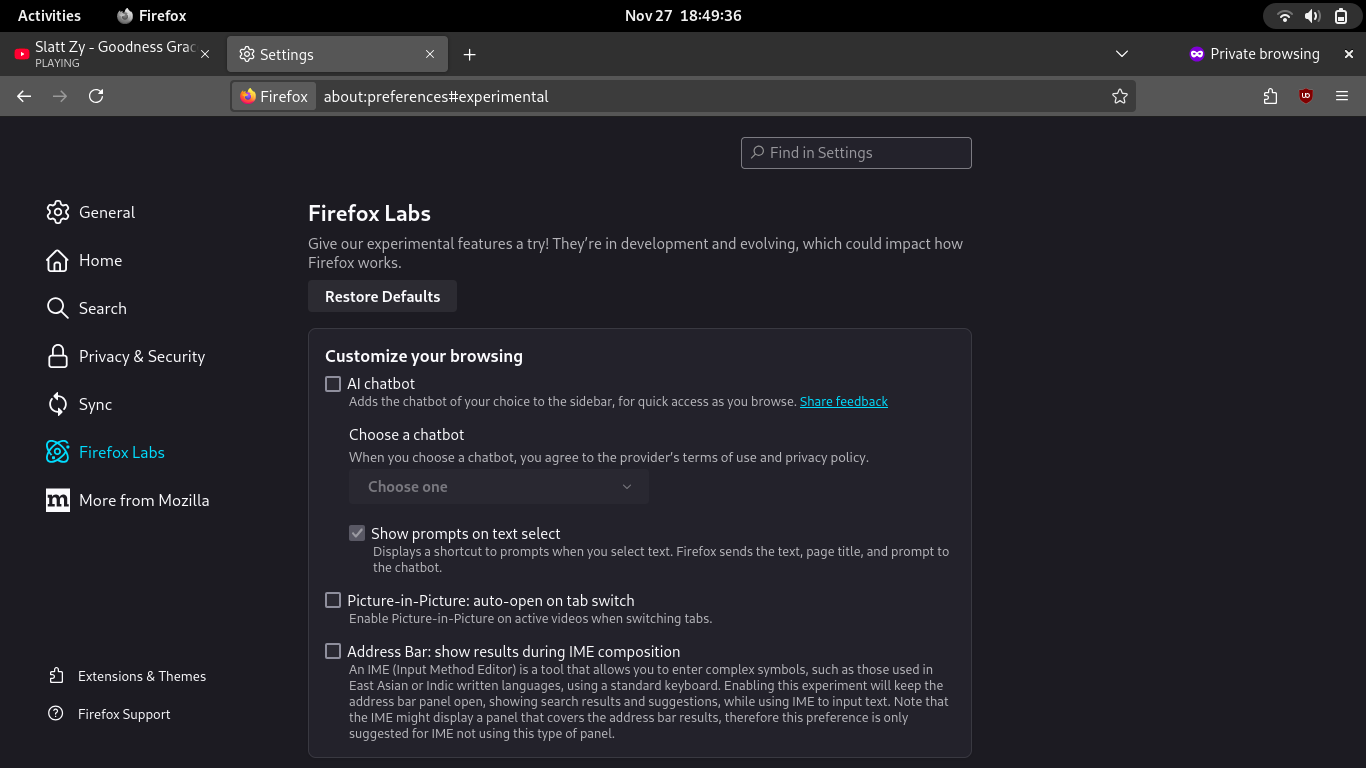

Are any of these open source or trustworthy?

I think Mistral is model-available (ie I'm not sure if they release training data/code but they do release model shape and weights), huggingchat definitely is open source and model-available

~~Sorry but HuggingChat / HuggingFace and all models on it are not open source~~ (Edit: Oh you meant the UI HuggingChat is Open Source. Yeah sorry, I was focused on the models. And there is no Open Source model from my understanding.) -> https://opensource.org/ai/open-source-ai-definition Off course opensource.org is not the only authority on what the word opensource means, but its not a bad start.

There are no open source ai models, even if they tell you that they are. HuggingFace is the closest thing to as something like open source where you can download ai models to run locally without internet connection. There are applications for that. In Firefox the HuggingChat uses models from HuggingFace, but I think it is running them on a server and does not download from?

The reason why they are not open source is, because we don't know exactly on what data they are trained on. We cannot rebuild them on our own. And for trustworthy, I assume you are talking about the integration and the software using the models, right? At least it is implemented by Mozilla, so there is (to me) some sort of trust involved. Yes, even after all the bullshit I trust Mozilla.

It's "open weights" if they are publishing the model file but nothing about its creation. There's some hypothetical security concerns with training it to give very specific outputs for certain very specific inputs but I feel like that's one of those kind of far fetched worries especially if you want to use it for chat or summarization and the comparison is getting AI output from a server API. Local is still way better.

probably not