this post was submitted on 07 Oct 2023

988 points (97.7% liked)

Technology

59317 readers

5567 users here now

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

And this is why AI detector software is probably impossible.

Just about everything we make computers do is something we're also capable of; slower, yes, and probably less accurately or with some other downside, but we can do it. We at least know how. We can't program software or train neutral networks to do something that we have no idea how to do.

If this problem is ever solved, it's probably going to require a whole new form of software engineering.

I don't know... My computer can do crazy math like 13+64 and other impossible calculations like that.

What exactly is "this"?

There are things computers can do better than humans, like memorizing, or precision (also both combined). For all the rest, while I agree in theory we could be on par, in practice it matters a lot that things happen in reality. There often is only a finite window to analyze and react and if you're slower, it's as good as if you knew nothing. Being good / being able to do something often means doing it in time.

Machine learning does that. We don't know how all these layers and neurons work, we could not build the network from scratch. We cannot engineer/build/create the correct weights, but we can approach them in training.

Also look at Generative Adversarial Networks (GANs). The adversarial part is literally to train a network to detect bad AI generated output, and tweak the generative part based on that error to produce better output, rinse and repeat. Note this by definition includes a (specific) AI detector software, it requires it to work.

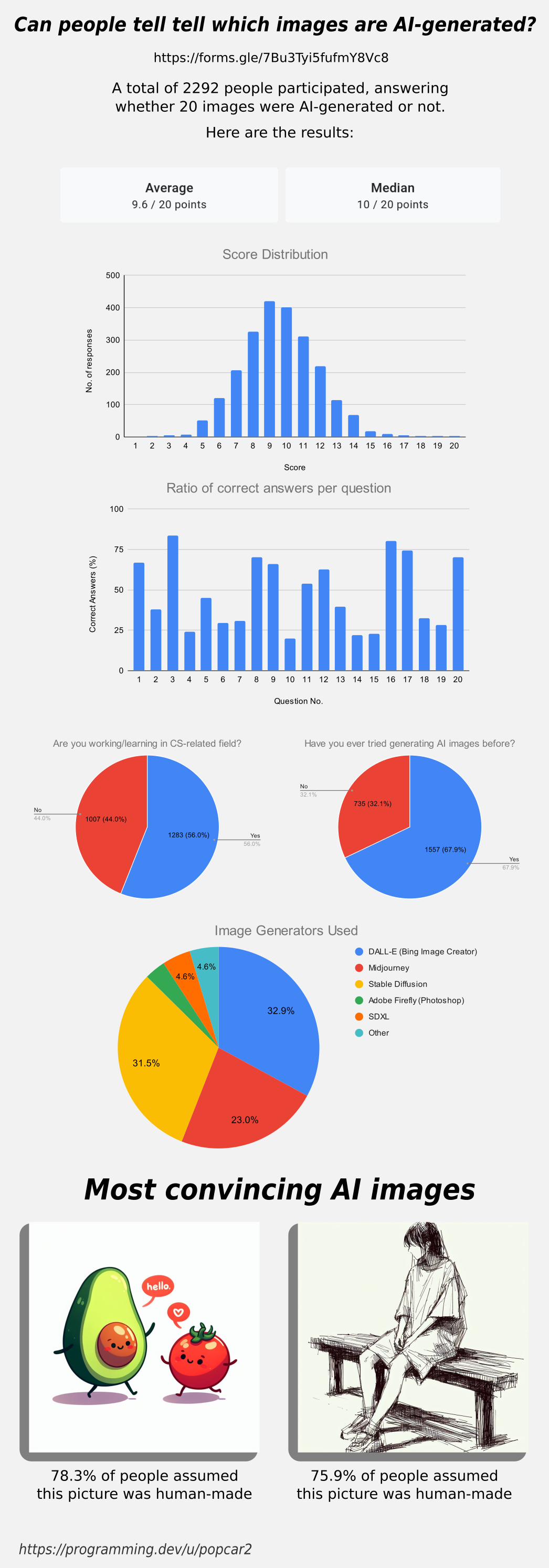

The results of this survey showing that humans are no better than a coin flip.

I didn't say "on par." I said we know how. I didn't say we were capable, but we know how it would be done. With AI detection, we have no idea how it would be done.

No it doesn't. It speedruns the tedious parts of writing algorithms, but we still need to be able to compose the problem and tell the network what an acceptable solution would be.

Several startups, existing tech giants, AI companies, and university research departments have tried. There are literally millions on the line. All they've managed to do is get students incorrectly suspended from school, misidentify the US Constitution as AI output, and get a network really good at identifying training data and absolutely useless at identifying real world data.

Note that I said that this is probably impossible, only because we've never done it before and the experiments undertaken so far by some of the most brilliant people in the world have yielded useless results. I could be wrong. But the evidence so far seems to indicate otherwise.

Right, thanks for the corrections.

In case of GAN, it's stupidly simple why AI detection does not take off. It can only be half a cycle ahead (or behind), at any time.

Better AI detectors train better AI generators. So while technically for a brief moment in time the advantage exists, the gap is immediately closed again by the other side; they train in tandem.

This does not tell us anything about non-GAN though, I think. And most AI is not GAN, right?

True, at least currently. Image generators are mostly diffusion models, and LLMs are largely GPTs.