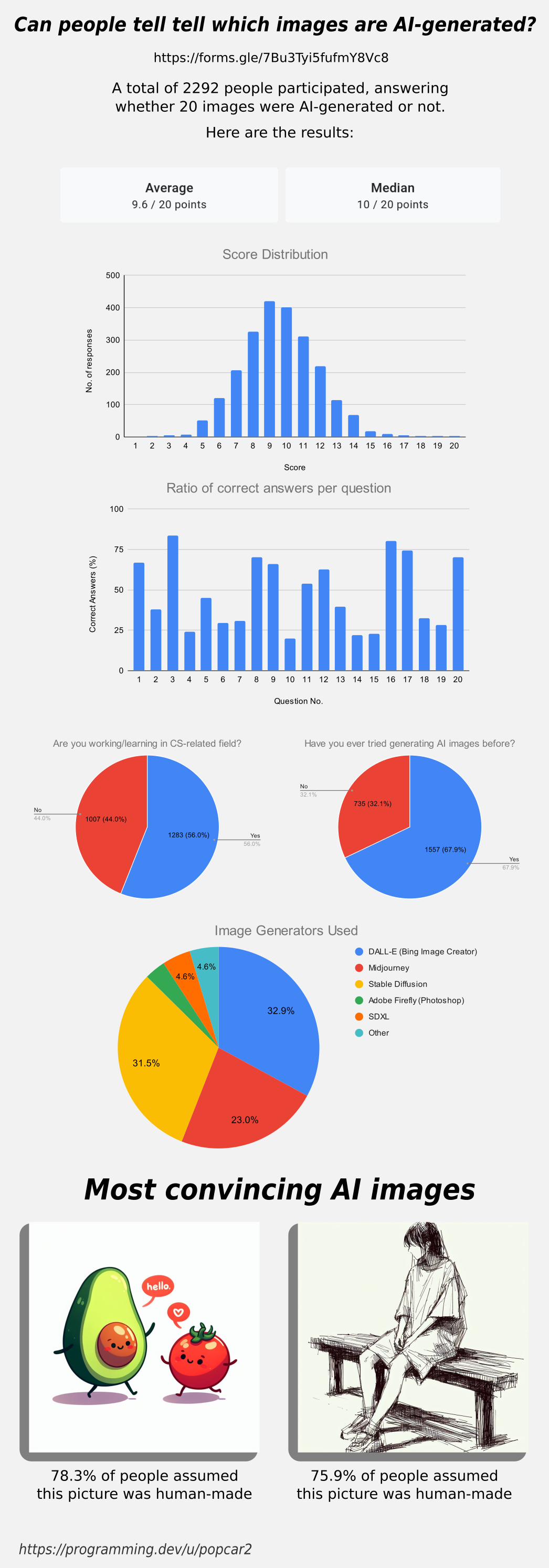

So if the average is roughly 10/20, that's about the same as responding randomly each time, does that mean humans are completely unable to distinguish AI images?

Technology

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

In theory, yes. In practice, not necessarily.

I found that the images were not very representative of typical AI art styles I've seen in the wild. So not only would that render preexisting learned queues incorrect, it could actually turn them into obstacles to guessing correctly pushing the score down lower than random guessing (especially if the images in this test are not randomly chosen, but are instead actively chosen to dissimulate typical AI images).

I would also think it depends on what kinds of art you are familiar with. If you don’t know what normal pencil art looks like, how are ya supposed to recognize the AI version.

As an example, when I’m browsing certain, ah, nsfw art, I can recognize the AI ones no issue.

Agreed. For the image that was obviously an emulation of Guts from Berserk, the only reason I called it as AI generated was because his right eye was open. I don't know enough about illustration in general, which led me to guess quite a few incorrect examples there.

I found the photos much easier, and I guessed each of those correctly just by looking for the sort of "melty" quality you get with most AI generated photos, along with intersected line continuity (e.g. an AI probably would have messed up this image where the railing overlaps the plant stems; the continuity made me accept it as real). But with illustrations, I don't know nearly enough about composition and technique, so I can't tell if a particular element is "supposed" to be there or not. I would like to say that the ones I correctly called as AI were still moderately informed because some of them had that sort of AI-generated color balance/bloom to them, but most of them were still just coin toss to me.

Maybe you didn’t recognize the AI images in the wild and assumed they were human made. It’s a survival bias; the bad AI pictures are easy to figure out, but we might be surrounded by them and would not even know.

Same as green screens in movies. It’s so prevalent we don’t see them, but we like to complain a lot about bad green screens. Every time you see a busy street there’s a 90+ % chance it’s a green screen. People just don’t recognize those.

If you look at the ratios of each picture, you’ll notice that there are roughly two categories: hard and easy pictures. Based on information like this, OP could fine tune a more comprehensive questionnaire to include some photos that are clearly in between. I think it would be interesting to use this data to figure out what could make a picture easy or hard to identify correctly.

My guess is that a picture is easy if it has fingers or logical structures such as text, railways, buildings etc. while illustrations and drawings could be harder to identify correctly. Also, some natural structures such as coral, leaves and rocks could be difficult to identify correctly. When an AI makes mistakes in those areas, humans won’t notice them very easily.

The number of easy and hard pictures was roughly equal, which brings the mean and median values close to 10/20. If you want to bring that value up or down, just change the number of hard to identify pictures.

It depends on if these were hand picked as the most convincing. If they were, this can’t be used a representative sample.

But you will always hand pick generated images. It's not like you hit the generate button once and call it a day, you hit it dozens of times tweaking it until you get what you want. This is a perfectly representative sample.

Personally, I’m not surprised. I thought a 3D dancing baby was real.

One thing I'm not sure if it skews anything, but technically ai images are curated more than anything, you take a few prompts, throw it into a black box and spit out a couple, refine, throw it back in, and repeat. So I don't know if its fair to say people are getting fooled by ai generated images rather than ai curated, which I feel like is an important distinction, these images were chosen because they look realistic

Well, it does say "AI Generated", which is what they are.

All of the images in the survey were either generated by AI and then curated by humans, or they were generated by humans and then curated by humans.

I imagine that you could also train an AI to select which images to present to a group of test subjects. Then, you could do a survey that has AI generated images that were curated by an AI, and compare them to human generated images that were curated by an AI.

Technically you're right but the thing about AI image generators is that they make it really easy to mass-produce results. Each one I used in the survey took me only a few minutes, if that. Some images like the cat ones came out great in the first try. If someone wants to curate AI images, it takes little effort.

I think if you consider how people will use it in real life, where they would generate a bunch of images and then choose the one that looks best, this is a fair comparison. That being said, one advantage of this kind of survey is that it involves a lot of random, one-off images. Trying to create an entire gallery of images with a consistent style and no mistakes, or trying to generate something that follows a design spec is going to be much harder than generating a bunch of random images and asking whether or not they're AI.

Did you not check for a correlation between profession and accuracy of guesses?

I have. Disappointingly there isn't much difference, the people working in CS have a 9.59 avg while the people that aren't have a 9.61 avg.

There is a difference in people that have used AI gen before. People that have got a 9.70 avg, while people that haven't have a 9.39 avg score. I'll update the post to add this.

Can we get the raw data set? / could you make it open? I have academic use for it.

Sure, but keep in mind this is a casual survey. Don't take the results too seriously. Have fun: https://docs.google.com/spreadsheets/d/1MkuZG2MiGj-77PGkuCAM3Btb1_Lb4TFEx8tTZKiOoYI

Do give some credit if you can.

Of course! I'm going to find a way to integrate this dataset into a class I teach.

If I can be a bother, would you mind adding a tab that details which images were AI and which were not? It would make it more usable, people could recreate the values you have on Sheet1 J1;K20

Done, column B in the second sheet contains the answers (Yes are AI generated, No aren't)

Sampling from Lemmy is going to severely skew the respondent population towards more technical people, even if their official profession is not technical.

I still don’t believe the avocado comic is one-shot AI-generated. Composited from multiple outputs, sure. But I have not once seen generative AI produce an image that includes properly rendered text like this.

Bing image creator uses the new DALL-E model which does hands and text pretty good.

generated this first try with the prompt a cartoon avocado holding a sign that says 'help me'

People forget just how fast this tech is evolving

Absolutely SDXL with loras already can do a lot of what it was thought impossible.

Image generation tech has gone crazy over the past year and a half or so. At the speed it's improving I wouldn't rule out the possibility.

Here's a paper from this year discussing text generation within images (it's very possible these methods aren't SOTA anymore -- that's how fast this field is moving): https://openaccess.thecvf.com/content/WACV2023/html/Rodriguez_OCR-VQGAN_Taming_Text-Within-Image_Generation_WACV_2023_paper.html

Something I'd be interested in is restricting the "Are you in computer science?" question to AI related fields, rather than the whole of CS, which is about as broad a field as social science. Neural networks are a tiny sliver of a tiny sliver

Especially depending on the nation or district a person lives in, where CS can have even broader implications like everything from IT Support to Engineering.

I got a 17/20, which is awesome!

I’m angry because I could’ve gotten an 18/20 if I’d paid attention to the thispersondoesnotexists’ glasses, which in hindsight, are clearly all messed up.

I did guess that one human-created image was made by AI, “The End of the Journey”. I guessed that way because the horses had unspecific legs and no tails. And also, the back door of the cart they were pulling also looked funky. The sky looked weirdly detailed near the top of the image, and suddenly less detailed near the middle. And it had birds at the very corner of the image, which was weird. I did notice the cart has a step-up stool thing attached to the door, which is something an AI likely wouldn’t include. But I was unsure of that. In the end, I chose wrong.

It seems the best strategy really is to look at the image and ask two questions:

- what intricate details of this image are weird or strange?

- does this image have ideas indicate thought was put into them?

About the second bullet point, it was immediately clear to me the strawberry cat thing was human-made, because the waffle cone it was sitting in was shaped like a fish. That’s not really something an AI would understand is clever.

One the tomato and avocado one, the avocado was missing an eyebrow. And one of the leaves of the stem of the tomato didn’t connect correctly to the rest. Plus their shadows were identical and did not match the shadows they would’ve made had a human drawn them. If a human did the shadows, it would either be 2 perfect simplified circles, or include the avocado’s arm. The AI included the feet but not the arm. It was odd.

The anime sword guy’s armor suddenly diverged in style when compared to the left and right of the sword. It’s especially apparent in his skirt and the shoulder pads.

The sketch of the girl sitting on the bench also had a mistake: one of the back legs of the bench didn’t make sense. Her shoes were also very indistinct.

I’ve not had a lot of practice staring at AI images, so this result is cool!

Wow, what a result. Slight right skew but almost normally distributed around the exact expected value for pure guessing.

Assuming there were 10 examples in each class anyway.

It would be really cool to follow up by giving some sort of training on how to tell, if indeed such training exists, then retest to see if people get better.

I feel like the images selected were pretty vague. Like if you have a picture of a stick man and ask if a human or computer drew it. Some styles aew just hard to tell

You could count the fingers but then again my preschooler would have drawn anywhere from 4 to 40.

One thing I'd be interested in is getting a self assessment from each person regarding how good they believe themselves to have been at picking out the fakes.

I already see online comments constantly claiming that they can "totally tell" when an image is AI or a comment was chatGPT, but I suspect that confirmation bias plays a big part than most people suspect in how much they trust a source (the classic "if I agree with it, it's true, if I don't, then it's a bot/shill/idiot")

And this is why AI detector software is probably impossible.

Just about everything we make computers do is something we're also capable of; slower, yes, and probably less accurately or with some other downside, but we can do it. We at least know how. We can't program software or train neutral networks to do something that we have no idea how to do.

If this problem is ever solved, it's probably going to require a whole new form of software engineering.

Wow, I got a 12/20. I thought I would get less. I'm scared for the future of artists

Having used stable diffusion quite a bit, I suspect the data set here is using only the most difficult to distinguish photos. Most results are nowhere near as convincing as these. Notice the lack of hands. Still, this establishes that AI is capable of creating art that most people can't tell apart from human made art, albeit with some trial and error and a lot of duds.

Idk if I'd agree that cherry picking images has any negative impact on the validity of the results - when people are creating an AI generated image, particularly if they intend to deceive, they'll keep generating images until they get one that's convincing

At least when I use SD, I generally generate 3-5 images for each prompt, often regenerating several times with small tweaks to the prompt until I get something I'm satisfied with.

Whether or not humans can recognize the worst efforts of these AI image generators is more or less irrelevant, because only the laziest deceivers will be using the really obviously wonky images, rather than cherry picking

These images were fun, but we can't draw any conclusions from it. They were clearly chosen to be hard to distinguish. It's like picking 20 images of androgynous looking people and then asking everyone to identify them as women or men. The fact that success rate will be near 50% says nothing about the general skill of identifying gender.

I have it on very good authority from some very confident people that all ai art is garbage and easy to identify. So this is an excellent dataset to validate my priors.

Curious which man made image was most likely to be classified as ai generated

Sketches are especially hard to tell apart because even humans put in extra lines and add embellishments here and there. I'm not surprised more than 70% of participants weren't able to tell that one was generated.