Anyone can download, but practically no one can run it.

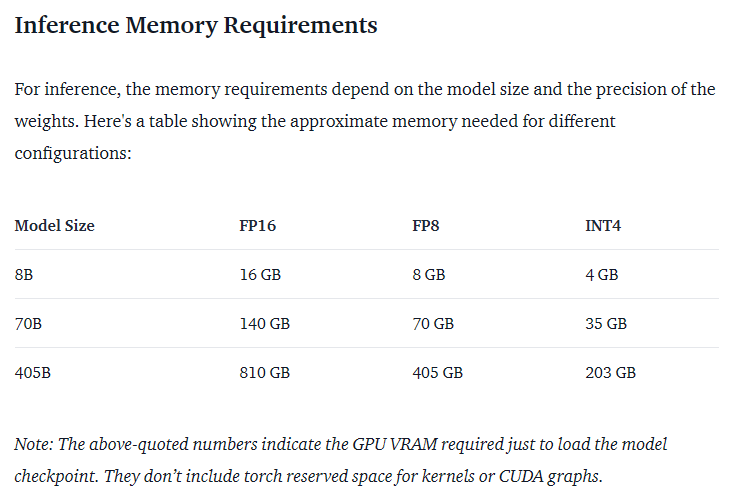

With the absolute highest memory compression settings, the largest model that you can fit inside of 24GB of VRAM is 109 billion parameters.

Which means even with crazy compression, you need at the very least, ~100GB of VRAM to run it. That's only in the realm of the larger workstation cards which cost around $24,000 - $40,000 each, so y'know.