Please remove it if unallowed

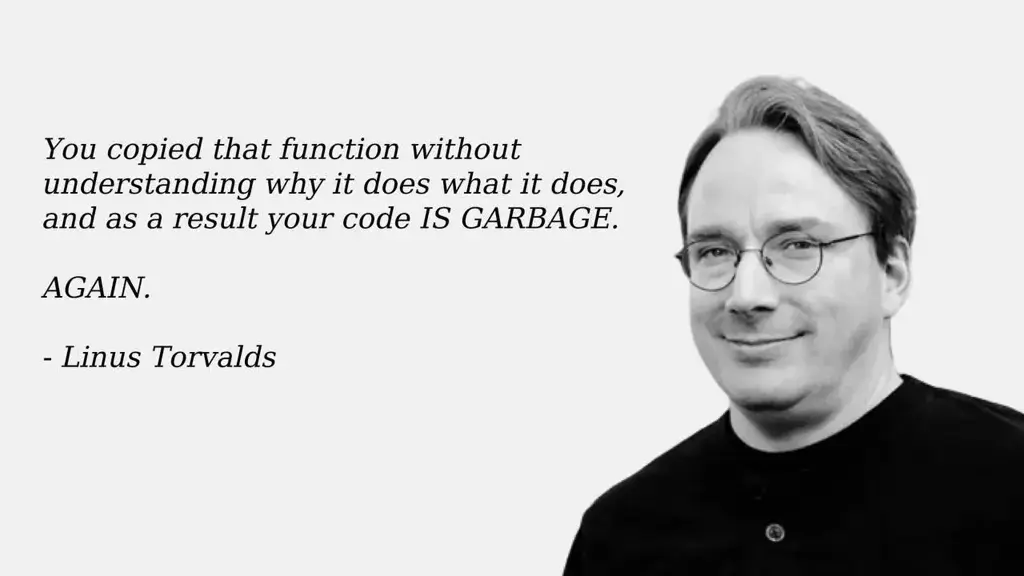

I see alot of people in here who get mad at AI generated code and I am wondering why. I wrote a couple of bash scripts with the help of chatGPT and if anything, I think its great.

Now, I obviously didnt tell it to write the entire code by itself. That would be a horrible idea, instead, I would ask it questions along the way and test its output before putting it in my scripts.

I am fairly competent in writing programs. I know how and when to use arrays, loops, functions, conditionals, etc. I just dont know anything about bash's syntax. Now, I could have used any other languages I knew but chose bash because it made the most sense, that bash is shipped with most linux distros out of the box and one does not have to install another interpreter/compiler for another language. I dont like Bash because of its, dare I say weird syntax but it made the most sense for my purpose so I chose it. Also I have not written anything of this complexity before in Bash, just a bunch of commands in multiple seperate lines so that I dont have to type those one after another. But this one required many rather advanced features. I was not motivated to learn Bash, I just wanted to put my idea into action.

I did start with internet search. But guides I found were lacking. I could not find how to pass values into the function and return from a function easily, or removing trailing slash from directory path or how to loop over array or how to catch errors that occured in previous command or how to seperate letter and number from a string, etc.

That is where chatGPT helped greatly. I would ask chatGPT to write these pieces of code whenever I encountered them, then test its code with various input to see if it works as expected. If not, I would ask it again with what case failed and it would revise the code before I put it in my scripts.

Thanks to chatGPT, someone who has 0 knowledge about bash can write bash easily and quickly that is fairly advanced. I dont think it would take this quick to write what I wrote if I had to do it the old fashioned way, I would eventually write it but it would take far too long. Thanks to chatGPT I can just write all this quickly and forget about it. If I want to learn Bash and am motivated, I would certainly take time to learn it in a nice way.

What do you think? What negative experience do you have with AI chatbots that made you hate them?

That is the general reason, i use llms to help myself with everything including coding too, even though i know why it's bad

That is the general reason, i use llms to help myself with everything including coding too, even though i know why it's bad