Heyha !

This is probably going to be long take and it's late here in europe... So for those who bare with me and are ready to read through my broken English, thank you.

I'm personally concerned about how my data and my identity is used against my will while surfing the web or using/hosting services. Self-hoster and networking enthousiast, I have some entry/medium security infrastructure.

Ranging from self-hosted adblocker, dns, router, vlans, containers, server, firewall, wireguard, VPN... you name it ! I was pretty happy to see all my traffic being encrypted through wireshark and having what I consider a solid homelab.

Also having most undesired dns/ads blocked with adguard in firefox with custom configuration, blocking everything, and changing some about:config options:

- privacy.resistFingerprinting

- privacy.trackingprotection.fingerprinting.enabled

- ...

I though I had some pretty harden security and safe browsing experience, but oh my I was wrong...

From pixel tracking, to WebRTC leaking your real ip, fonts fingreprinting, canvas fingreprinting, audio fingerprinting, android default keyboard sending samples, ssl certificate with known vulnerabilities...

And most of them are not even some new tracking tech... I mean even firefox 54 was aware of most of these way of fingerprinting the user, and it makes me feel firefox is just another hidden evil-corp hiding with a fancy privacy facade ! Uhhg...

And even if you somehow randomize those fingerprint, user-agent and block most of those things, this makes you stand out of the mass and makes you even easier to track or fingerprint. Yeah something I read recently and it actually make sense... the best way to be somehow invisible is actually to blend into the mass... If you stand out, you are pretty sure to be notices and identified (if that makes sense :/)

This really makes me depressed right now... It feels like a losing battle where my energy is just being wasted to try to have some privacy and anonimity on the web... While fighting against the new laws ringing on our doors and big tech company always having two steps ahead...

I'm really asking myself if it really matters and if it actually make sense to use harden technology or browsers like arkenfox or the tor browser whose end node are mostly intercepted by private institutions and governemental institutions...

I'm probably overthinking and falling into a deep hole... But the more i dig into security and privacy, the more I get the feeling that this is an already lost battle against big tech...

Some recent source:

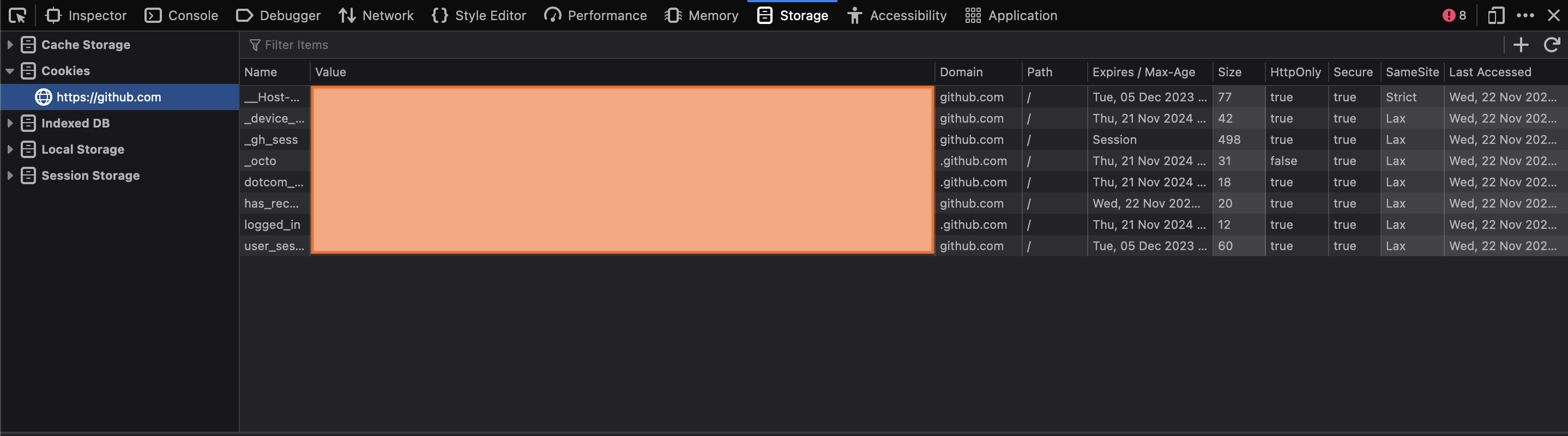

As long as they continue to maintain the github repository and keep it free without any hidden ads/spyware or restrictions, I will continue to use their service.