this post was submitted on 18 Oct 2024

165 points (100.0% liked)

technology

23313 readers

72 users here now

On the road to fully automated luxury gay space communism.

Spreading Linux propaganda since 2020

- Ways to run Microsoft/Adobe and more on Linux

- The Ultimate FOSS Guide For Android

- Great libre software on Windows

- Hey you, the lib still using Chrome. Read this post!

Rules:

- 1. Obviously abide by the sitewide code of conduct. Bigotry will be met with an immediate ban

- 2. This community is about technology. Offtopic is permitted as long as it is kept in the comment sections

- 3. Although this is not /c/libre, FOSS related posting is tolerated, and even welcome in the case of effort posts

- 4. We believe technology should be liberating. As such, avoid promoting proprietary and/or bourgeois technology

- 5. Explanatory posts to correct the potential mistakes a comrade made in a post of their own are allowed, as long as they remain respectful

- 6. No crypto (Bitcoin, NFT, etc.) speculation, unless it is purely informative and not too cringe

- 7. Absolutely no tech bro shit. If you have a good opinion of Silicon Valley billionaires please manifest yourself so we can ban you.

founded 4 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

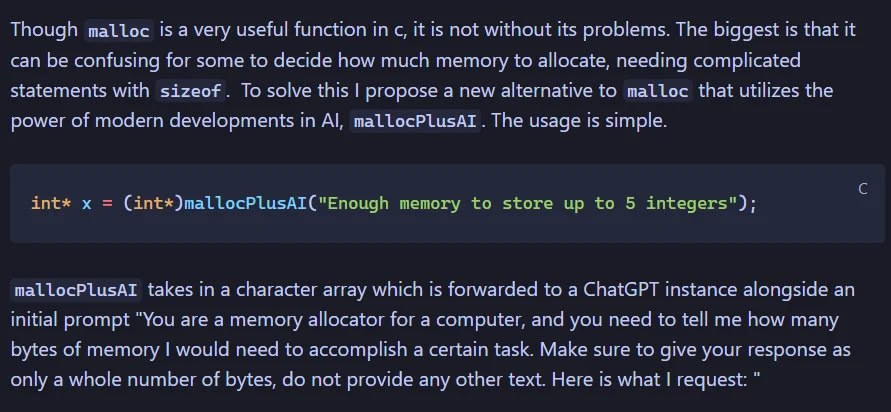

I might need reeducation because I think that image is probably the closest thing to an appropriate usecase for LLMs I've seen ever.

I don't think the idea of it is terrible, I can see it's use cases, but I think it being implemented in MySQL directly is extremely silly. Imagine having to have all the instances of your database server running on some beefy hardware to handle the prompts, just do the actual processing on a separate machine with the appropriate hardware then shove it into the database with another query when it's done.

Probably useful in data science

It's nice to be able to do something like this without having to use an ORM. Especially if you need a version of the data that's limited to a certain character size.

Like having a replica on the edge that serves the 100 character summaries then only loads the full 1000+ character record when a user interacts with it.

A summary of the review is also more useful than just the first 100 characters of a review.

If the model that's used for that is moderately light I could see it actually using less energy over time for high volume applications if it is able to lower overall bandwidth.

This is all assuming that the model is only run one time or on record update though and not every time the query is run...