this post was submitted on 05 Feb 2024

666 points (87.8% liked)

Memes

50979 readers

133 users here now

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

founded 6 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

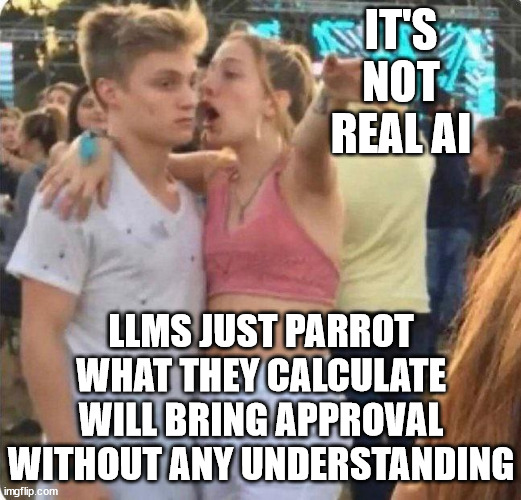

They're predicting the next word without any concept of right or wrong, there is no intelligence there. And it shows the second they start hallucinating.

They are a bit like you'd take just the creative writing center of a human brain. So they are like one part of a human mind without sentience or understanding or long term memory. Just the creative part, even though they are mediocre at being creative atm. But it's shocking because we kind of expected that to be the last part of human minds to be able to be replicated.

Put enough of these "parts" of a human mind together and you might get a proper sentient mind sooner than later.

Exactly. Im not saying its not impressive or even not useful, but one should understand the limitation. For example you can't reason with an llm in a sense that you could convince it of your reasoning. It will only respond how most people in the used dataset would have responded (obiously simplified)

You repeat your point but there already was agreement that this is how ai is now.

I fear you may have glanced over the second part where he states that once we simulated other parts of the brain things start to look different very quickly.

There do seem to be 2 kind of opinions on ai.

those that look at ai in the present compared to a present day human. This seems to be the majority of people overall

those that look at ai like a statistic, where it was in the past, what improved it and project within reason how it will start to look soon enough. This is the majority of people that work in the ai industry.

For me a present day is simply practice for what is yet to come. Because if we dont nuke ourselves back to the stone age. Something, currently undefinable, is coming.

What i fear is AI being used with malicious intent. Corporations that use it for collecting data for example. Or governments just putting everyone in jail that they are told by an ai

I'd expect governments to use it to craft public relation strategies. An extension of what they do now by hiring the smartest sociopaths on the planet. Not sure if this would work but I think so. Basically you train an AI on previous messaging and results from polls or voting. And then you train it to suggest strategies to maximize for support for X. A kind of dumbification of the masses. Of course it's only going to get shittier from there on out.

I didn't, I just focused on how it is today. I think it can become very big and threatening but also helpful, but that's just pure speculation at this point :)

...or you might not.

It's fun to think about but we don't understand the brain enough to extrapolate AIs in their current form to sentience. Even your mention of "parts" of the mind are not clearly defined.

There are so many potential hidden variables. Sometimes I think people need reminding that the brain is the most complex thing in the universe, we don't full understand it yet and neural networks are just loosely based on the structure of neurons, not an exact replica.

True it's speculation. But before GPT3 I never imagined AI achieving creativity. No idea how you would do it and I would have said it's a hard problem or like magic, and poof now it's a reality. A huge leap in quality driven just by quantity of data and computing. Which was shocking that it's "so simple" at least in this case.

So that should tell us something. We don't understand the brain but maybe there isn't much to understand. The biocomputing hardware is relatively clear how it works and it's all made out of the same stuff. So it stands to reason that the other parts or function of a brain might also be replicated in similar ways.

Or maybe not. Or we might need a completely different way to organize and train other functions of a mind. Or it might take a much larger increase in speed and memory.

You say maybe there's not much to understand about the brain but I entirely disagree, it's the most complex object in the known universe and we haven't discovered all of it's secrets yet.

Generating pictures from a vast database of training material is nowhere near comparable.

Ok, again I'm just speculating so I'm not trying to argue. But it's possible that there are no "mysteries of the brain", that it's just irreducible complexity. That it's just due to the functionality of the synapses and the organization of the number of connections and weights in the brain? Then the brain is like a computer you put a program in. The magic happens with how it's organized.

And yeah we don't know how that exactly works for the human brain, but maybe it's fundamentally unknowable. Maybe there is never going to be a language to describe human consciousness because it's entirely born out of the complexity of a shit ton of simple things and there is no "rhyme or reason" if you try to understand it. Maybe the closest we get are the models psychology creates.

Then there is fundamentally no difference between painting based on a "vast database of training material" in a human mind and a computer AI. Currently AI generated images is a bit limited in creativity and it's mediocre but it's there.

Then it would logically follow that all the other functions of a human brain are similarly "possible" if we train it right and add enough computing power and memory. Without ever knowing the secrets of the human brain. I'd expect the truth somewhere in the middle of those two perspectives.

Another argument in favor of this would be that the human brain evolved through evolution, through random change that was filtered (at least if you do not believe in intelligent design). That means there is no clever organizational structure or something underlying the brain. Just change, test, filter, reproduce. The worst, most complex spaghetti code in the universe. Code written by a moron that can't be understood. But that means it should also be reproducible by similar means.

Possible, yes. It's also entirely possible there's interactions we are yet to discover.

I wouldn't claim it's unknowable. Just that there's little evidence so far to suggest any form of sentience could arise from current machine learning models.

That hypothesis is not verifiable at present as we don't know the ins and outs of how consciousness arises.

Lots of things are possible, we use the scientific method to test them not speculative logical arguments.

These would need to be defined.

Can't be sure of this... For example, what if quantum interactions are involved in brain activity? How does the grey matter in the brain affect the functioning of neurons? How do the heart/gut affect things? Do cells which aren't neurons provide any input? Does some aspect of consciousness arise from the very material the brain is made of?

As far as I know all the above are open questions and I'm sure there are many more. But the point is we can't suggest there is actually rudimentary consciousness in neural networks until we have pinned it down in living things first.

I have a silly little model I made for creating Vogoon poetry. One of the models is fed from Shakespeare. The system works by predicting the next letter rather than the next word (and whitespace is just another letter as far as it's concerned). Here's one from the Shakespeare generation:

KING RICHARD II:

Exetery in thine eyes spoke of aid.

Burkey, good my lord, good morrow now: my mother's said

This is silly nonsense, of course, and for its purpose, that's fine. That being said, as far as I can tell, "Exetery" is not an English word. Not even one of those made-up English words that Shakespeare created all the time. It's certainly not in the training dataset. However, it does sound like it might be something Shakespeare pulled out of his ass and expected his audience to understand through context, and that's interesting.

Wow, sounds amazing, big probs to you! Are you planning on releasing the model? Would be interested tbh :D

Nothing special about it, really. I only followed this TensorFlow tutorial:

https://www.tensorflow.org/text/tutorials/text_generation

The Shakespeare dataset is on there. I also have another mode that uses entries from the Joyce Kilmer Memorial Bad Poetry Contest, and also some of the works of William Topaz McGonagall (who is basically the Tommy Wiseau of 19th century English poetry). The code is the same between them, however.

Nice, thx

...yeah dude. Hence artificial intelligence.

There aren't any cherries in artificial cherry flavoring either 🤷♀️ and nobody is claiming there is