this post was submitted on 28 May 2024

1060 points (96.9% liked)

Fuck AI

1455 readers

351 users here now

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

founded 8 months ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

I don't know if this is a great example. Chess is an environment with an extremely defined end goal and very strict rules.

The ability of a chess engine to defeat human players does not mean it became creative or grew insight. Rather, we advanced the complexity of the chess engine to encompass more possibilities, more strategies, etc. In addition, it's quite naive for people to have suggested that a computer would be incapable of "real analysis" when its ability to do so entirely depends on the ability of humans to create a complex enough model to compute "real analyses" in a known system.

I guess my argument is that in the scope of chess engines, humans underestimated the ability of a computer to determine solutions in a closed system, which is usually what computers do best.

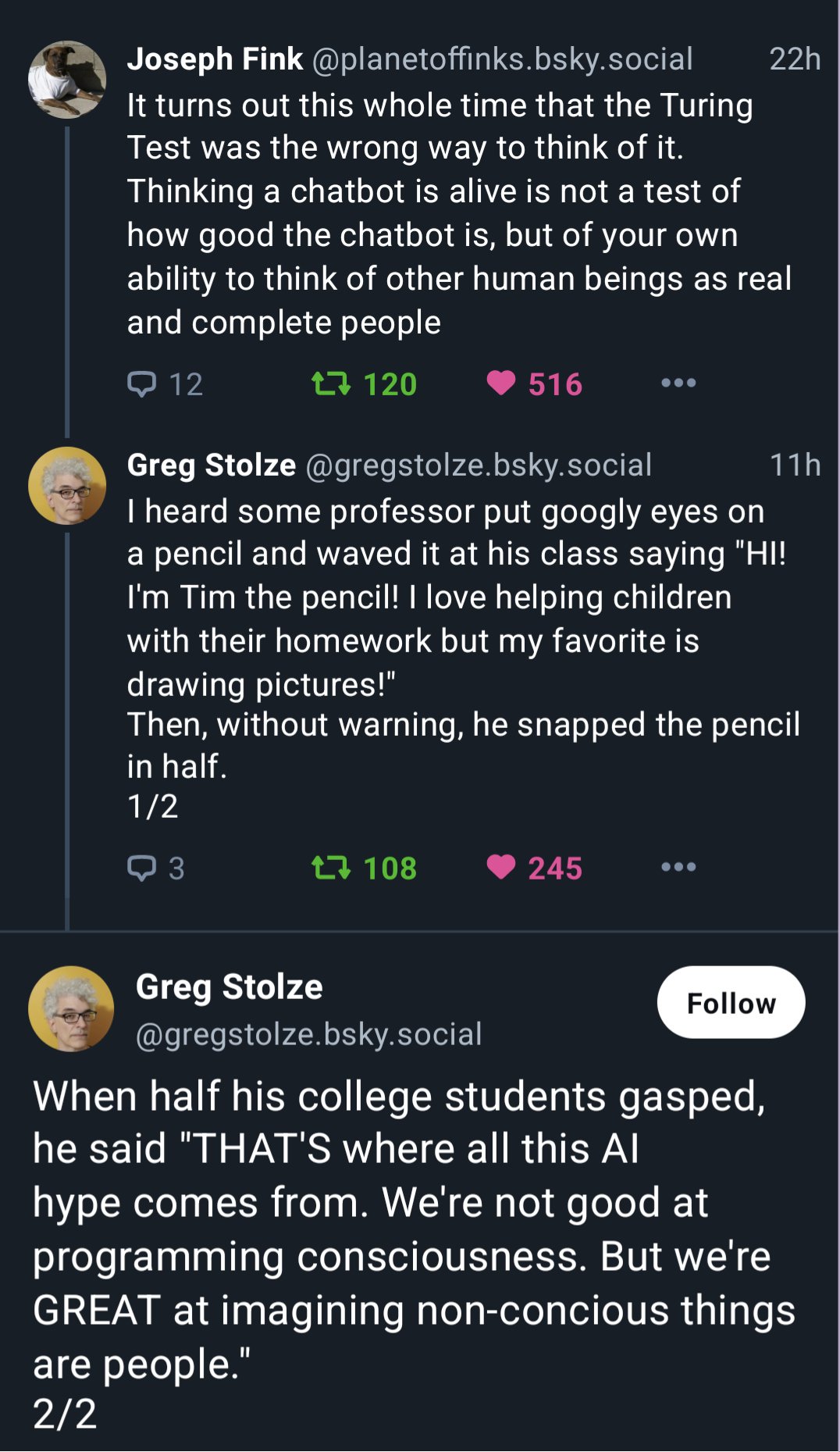

Consciousness, on the other hand, cannot be easily defined, nor does it adhere to strict rules. We cannot compare a computer's ability to replicate consciousness to any other system (e.g. chess strategy) as we do not have a proper and comprehensive understanding of consciousness.

I'm not saying chess engines became better than humans so LLM's will become concious, just using that example to say humans always have this bias to frame anything that is not human is inherently less, while it might not be. Chess engines don't think like a human do, yet play better. So for an AI to become concious, it doesn't need to think like a human either, just have some mechanism that ends up with a similar enough result.

Yeah, I can agree with that. So long as the processes in an AI result in behavior that meets the necessary criteria (albeit currently undefined), one can argue that the AI has consciousness.

I guess the main problem lies in that if we ever fully quantify consciousness, it will likely be entirely within the frame of human thinking... How do we translate the capabilities of a machine to said model? In the example of the chess engine, there is a strict win/lose/draw condition. I'm not sure if we can ever do that with consciousness.