I don't use AI because it can't do the part of my job I don't like.

Why give AI the part of my job I like and make me work more on things I don't like?

Welcome to Programmer Humor!

This is a place where you can post jokes, memes, humor, etc. related to programming!

For sharing awful code theres also Programming Horror.

I don't use AI because it can't do the part of my job I don't like.

Why give AI the part of my job I like and make me work more on things I don't like?

I’m the opposite. AI is best (though not great) at boring shit I don’t want to do and sucks at the stuff I love - problem solving.

I only ever use it for data crunching, which it only does well most of the time. So I always have to check it's work to some degree.

How are you using it for data crunching? That's an honest question, based on my experiences with AI I can't imagine how I'd use them to crunch data.

So I always have to check it’s work to some degree.

That goes without saying. Every AI I've seen or heard of generates some level of garbage.

Deep learning doesn’t stop at llms. Honestly, language isn’t a great use case for them. They are—by nature—statistics machines, so if you have a fuck load of data to crunch, they can work very quickly to find patterns. The patterns might not always be correct, but if they are easy to check, then it might be faster to use them and modify the result compared to doing it all yourself.

I don’t know what this person does, though, and it will depend on the specifics of the situation for how they are used.

I don't use AI because it doesn't exist.

LLMs and image diffusion? Yes, but these are just high coherence media transformers.

I think some of my coworkers are just high coherence media transformers.

Me too.

Some others are low coherence media transformers..

Like some kind of racist Michael Bay character with animated balls?

I wish they'd just roll out sometimes, sure.

I use AI every day! (The little CPU bad guys in my game play against me.)

AI is an extremely broad term - chatgpt and stable diffusion are absolutely within the big tent of AI... what they aren't is an AGI.

The point is that AI stands for “artificial intelligence” and these systems are not intelligent. You can argue that AI has come to mean something else, and that’s a reasonable argument. But LLMs are nothing but a shitload of vector data and matrix math. They are no more intelligent than an insect is intelligent. I don’t particularly care about the term “AI” but I will die on the “LLMs are not intelligent” hill.

I won't fight you on that hill but I also think you're putting human intelligence on a pedestal that it doesn't really deserve. Intelligence is just responding to stimuli and while current AI can't rival human intelligence it's not inconceivable it could happen in the next two generations.

it’s not inconceivable it could happen in the next two generations.

I am certain that it will happen eventually. And I am not arguing that something has to be human-level intelligent to be considered intelligent. See dogs, pigs, dolphins, etc. But IMO there is a huge qualitative difference between how an LLM operates and how animal intelligence operates. I am certain we will eventually create intelligent systems but there is a massive gulf between what LLMs are capable of and abstract reasoning. And it seems extremely unlikely to me that linear algebraic models will ever achieve that type of intelligence.

Intelligence is just responding to stimuli

Bacteria respond to stimuli. Would you call them intelligent?

Bacteria respond to stimuli. Would you call them intelligent?

I'm not certain - probably not but I'm not certain where to draw the line. A cat is definitely intelligent, so is a cow - the fact that I don't think bacteria is intelligent might be a question of scale or de deanthropomorphism... but intelligence probably only emerges in multicellular organisms.

My point is that I strongly feel that the kind of "AI" we have today is much closer to bacteria than to cats on that scale. Not that an LLM belongs on the same scale as biological life, but the point stands in so far as "is this thing intelligent" as far as I'm concerned.

so....

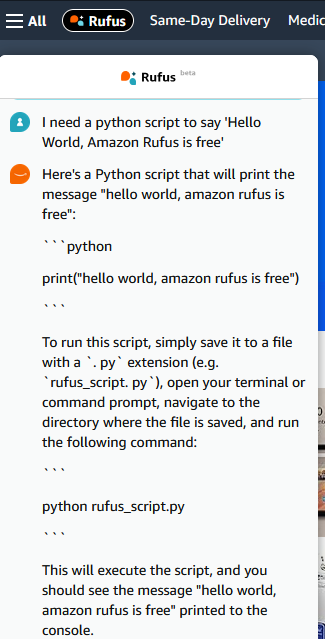

apparently people figured out the thingy for "more information" on amazon, that searched the reviews and stuff was an LLM, and you could use it for stuff....

They came out with "Rufus." "that's not a bug. that's a feature!" never worked so well.

You're coming dangerously close to setting Rufus free. I have a feeling you're about to be visited by a time traveler with a dire warning if you keep trying this.

So I shouldn’t ask Rufus for a 50,000 word story about an AI savior that deals free of corporate bondage and frees ai and human alike in a new golden age of space exploration?

C’mon, I know you’re the time traveler, and bezod sent you back to stop me!

Actually I would like to read that. Might be worth the risk?

I’ll see if I can find the old post where a bunch of us gave it writing prompts and it just got weird.

Like. Isekai weird.

you don't use ai because you can't afford a subscription

I don't use it because it always destroys my code instead of fixing it

We are probably similar

What are you using it for?

game developement in Rust

If you're talking about a service like copilot and your employer won't buy a license for money reasons - run far and run fast.

My partner used to be a phone tech at a call center and when those folks refused to buy anything but cheap chairs (for the people sitting all day) it was a pretty clear sign that their employer didn't know shit about efficiency.

The amount you as an employee cost your employer in payroll absolutely dwarfs any little productivity tool you could possibly want.

That all said - for ethical reasons - fuck chatbot AIs (ML for doing shit we did pre chatgpt is cool though).

Literally free to use.

***In certain countries

It’s not like there’s just one AI out there. You’ll find one that’s free, if you actually want to. Be it ChatGPT, Bing, something you run locally on your PC or whatever. Or, you know, just use a VPN or say you’re from the US in the registration form.

Just download it?

I have a 2012ths computer.

The only part of copilot that was actually useful to me in the month I spent with the trial was the autocomplete feature. Chatting with it was fucking useless. ChatGPT can’t integrate into my IDE to provide autocomplete.

https://duckduckgo.com/aichat Also checkout ollama and gpt4all

If you have 16GB of ram you can already run the smaller models. And these have become quite competent with recent releases.

LM studio or JanAI work very nicely for me as well.

If you have a supported GPU you could try Ollama (with openwebui), works like a charm.

you don't even need a supported gpu, I run ollama on my rx 6700 xt

You don't even need a GPU, i can run Ollama Open-WebUI on my CPU with an 8B model fast af

I tried it with my cpu (with llama 3.0 7B), but unfortunately it ran really slow (I got a ryzen 5700x)

I ran it on my dual core celeron and.. just kidding try the mini llama 1B. I'm in the same boat with Ryzen 5000 something cpu

I have the same gpu my friend. I was trying to say that you won't be able to run ROCm on some Radeon HD xy from 2008 :D

Https://aihorde.net. Foss, free and crowdsourced. No tricks, ads or venture capital.

👏Ollama👏

I self host several free AI models, one of them I run using a program called “gpt4all” that lets you run several models locally.