I love it for what I use it for which is research, speeding up scripting and code writing, resume building, paraphrashing stupidly long news articles, teaching me Spanish and Japanese, bypassing the bullshit that are what passes as search engines these days, and talking my anxiety down. They cut through the noise and boost my productivity.

Asklemmy

A loosely moderated place to ask open-ended questions

If your post meets the following criteria, it's welcome here!

- Open-ended question

- Not offensive: at this point, we do not have the bandwidth to moderate overtly political discussions. Assume best intent and be excellent to each other.

- Not regarding using or support for Lemmy: context, see the list of support communities and tools for finding communities below

- Not ad nauseam inducing: please make sure it is a question that would be new to most members

- An actual topic of discussion

Looking for support?

Looking for a community?

- Lemmyverse: community search

- sub.rehab: maps old subreddits to fediverse options, marks official as such

- !lemmy411@lemmy.ca: a community for finding communities

~Icon~ ~by~ ~@Double_A@discuss.tchncs.de~

Using AI like Deepseek is a lot easier than shifting through 50 search results, if the question is for a relatively new technology though then it usually doesn't work

As of now its overblown. Still a useful tool tho. Ive been using deepseek to help with resume formatting. Just dont let them do any actual writing for you or theyll make shit up. But for formatting theyre great. Feed it what you wrote, and ask to to clean it up without changing the text. Re read it to make sure it didnt change stuff anyway. Its also great at things like troubleshooting issues. Way better then just googling it. You can still run into hallucinations tho so be careful. I also reccomend avoiding any western AI and only use chinese AI. Its better and safer.

I think it's fine if used in moderation. I use mine for doing the mindless day-to-day stuff like writing cover letters or business-type emails. I don't use it for anything creative though, just to free myself up to do that stuff.

I also suck at coding so I use it to write little scripts and stuff. Or at least to do the framework and then I finish them off.

Generative ai is just an advanced chat bot, a toy that uses too much power to be efficient.

My personal experience is that any output has to be double checked and edited. It would be better to just do whatever I asked it to do from the beginning. When it can fact check itself and cite sources, then it might become useful.

An ai that can comb through vast amounts of data and give an output of specific data relevant to the question presented than a generative ai might be useful. But it can’t analyze data very well at the current moment. It hallucinates too much.

It's bullshit. It's inauthentic. It can be useful for chewing through data, but even then the output can't be trusted. The only people I've met who are absolutely thrilled by it are my bosses, who are two of the most frustrating, stupid, pig-headed, petty people I've ever met. I wish it would go away. I'm quitting my job next week, taking a big paycut and barely being able to pay the bills, specifically because those two people are unbearable. They also insist that I use AI as much as possible.

Most GenAI was trained on material they had no right to train on (including plenty of mine). So I'm doing my small part, and serving known AI agents an infinite maze of garbage. They can fuck right off.

Now, if we're talking about real AI, that isn't just a server park of disguised markov chains in a trenchcoat, neural networks that weren't trained on stolen data, that's a whole different story.

I like to think somewhere researchers are working on actual AI and the AI has already decided that it doesn't want to read bullshit on the internet

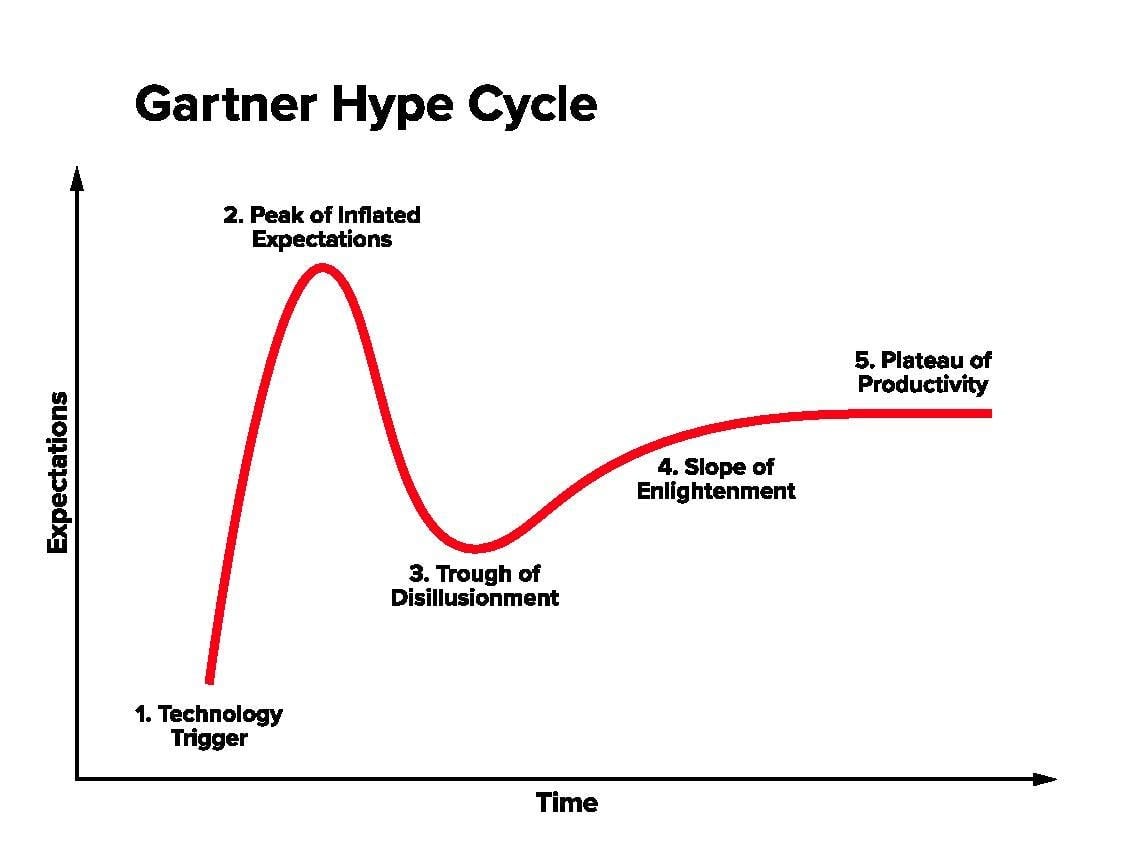

It's a tool with some interesting capabilities. It's very much in a hype phase right now, but legitimate uses are also emerging. Automatically generating subtitles is one good example of that. We also don't know what the plateau for this tech will be. Right now there are a lot of advancements happening at rapid pace, and it's hard to say how far people can push this tech before we start hitting diminishing returns.

For non generative uses, using neural networks to look for cancer tumors is a great use case https://pmc.ncbi.nlm.nih.gov/articles/PMC9904903/

Another use case is using neural nets to monitor infrastructure the way China is doing with their high speed rail network https://interestingengineering.com/transportation/china-now-using-ai-to-manage-worlds-largest-high-speed-railway-system

DeepSeek R1 appears to be good at analyzing code and suggesting potential optimizations, so it's possible that these tools could work as profilers https://simonwillison.net/2025/Jan/27/llamacpp-pr/

I do think it's likely that LLMs will become a part of more complex systems using different techniques in complimentary ways. For example, neurosymbolics seems like a very promising approach. It uses deep neural nets to parse and classify noisy input data, and then uses a symbolic logic engine to operate on the classified data internally. This addresses a key limitation of LLMs which is the ability to do reasoning in a reliable way and to explain how it arrives at a solution.

Personally, I generally feel positively about this tech and I think it will have a lot of interesting uses down the road.

I personally hate the path that AI is going. Generative ai steals art and scrapes text to create garbage on demand using too much power and computing resources that could be spent on better purposes, such as simulating protein folding for disease research (see folding at home). u/yogthos@lemmy.ml gave some good uses of ai.

To be honest, I think it's a severe mistake that AI is continuing to improve, as long as you aren't gullible and know what to look for, you can tell when something is ai generated, but there are too many people who are easily fooled by ai generated images and videos. When chatpgt released, I thought it was a nice toy, but now that I know the methods of which such large scale models are obtaining their data to train on, I can only resent it. So long as generative models continue to improve in accuracy of text and images, so will my hatred towards it in turn.

p.s: don't use the term "AI art" for the love of God. art captures human emotions and experiences, machines can't understand them, they are only silicon. Only humans can create art, nothing else.

I kinda wish we had one on lemmy that summarized articles since we dont have the userbase of reddit (theres always some dude summarizing the facts without the fluff in the comments) AI is good at summaries.

I straight up dont like reading most of these articles, its often written in a way that makes you stay on the page longer

Let me know when we have some real AI to evaluate rather than products labeled as a marketing ploy. Anyone remember when everything had to be called "3D" because it was cool? I missed my chance to get 3D stereo cables.

It’s a glorified crawler that is incredibly inefficient. I don’t use it because I’ve been programmed to be picky about my sources and LLMs haven’t.

I'm a layman in terms if AI but I think it can be a useful tool, if used in proper context. I use it when I struggle to find something by regular internet search. The fact you can search in a conversational style and specify as you go on what you need is great.

I feel it is pushed into contexts where it has no place and where it's usefulness is limited or counterproductive.

Then there is the question of the inproper use of copyrighted material which is terrible

Actual piracy doesn't bother me, but I'm supposed to care that a robot learned English by reading library books? Learning is what libraries are for. Yeah, the draw-anything robot can only draw The Simpsons because it's seen The Simpsons. How else was it supposed to happen?

Training is transformative use. You can't spend a zillion compute-hours guessing the next word of a story, in such a way that it can fake Tolkien retelling Shrek as a rap battle, and claim that's the same as LordOfTheRings.txt on an FTP server. What the network is and does will not substitute the original work. Not unless the Silmarillion had more swamp ogres than I've heard.

Image stuff will become a brush that does whatever you tell it. Type the word "inks" and drag it over your sketch, and it'll smooth out your lines. Type the word "photorealistic" and it'll turn your blocky shading into unreasonably good lighting. None of this prevents human art. The more you put in, the more you get out. Stable Diffusion is a denoiser, where the concept of noise can be defined as bad anatomy.

Video stuff might end Hollywood, as soon as editors figure out they've inherited the Earth. The loosest animatics can become finished shots without opening Blender or picking up a camera. A static image of what a character looks like should be enough to say, this stick figure is that guy. Or this actor is that cartoon character. Or this cardboard cutout is that approaching spaceship. The parts that don't look like that are noise, and get removed. We're rapidly going to learn how blobby and blurry an input can be, for the machine to export a shot from your head, just the way you imagine it. And where it's not exactly what you intended - neither is any shot ever filmed. A film only exists in the edit. So anyone who can string together some already-spooky output, based on the stories they'd like to tell, is going to be a studio unto themselves.

Pretty cool technology ruined by greed. If we don't get this under control (which we won't probably) we're in for a pretty interesting age of the Internet, maybe even the last one.

Mixed feelings. I decided not to study graphic design because I saw the writing on the wall, so I'm a little salty. I think they can be really useful for cutting back on menial tasks though. For example, I don't see why people bitch about someone using AI for their cover letter as long as they proofread it afterwards. That seems like the kind of thing you'd want to automate, unlike art and human interaction.

I think right now I just kind of hate AI because of capitalism. Tech companies are trying to make it sound like they can do so many things they really can't, and people are falling for it.

Writing a cover letter is a good exercise in self reflection

True, I just assumed that reflection was required in order to give the AI the prompt, and the AI was mainly used to format it correctly. I might be talking out of my ass here since I haven't used it extensively.

Hype bubble. Has potential, but nothing like what is promised.

The trough of dissolutionment!

As a tool for reducing our societal need to do hard labor I think it is incredibly useful. As it is generally used in America I think it is an egregious from of creative theft that threatens to replace a large range of the working class in our nation.

agreed, I'm staying hopeful it'll improve lives for most when used efficiently, at the cost of others losing jobs, sadly.

on the other hand, wealth inequality will worsen until policies change

I think AI will end truth as a concept

If it does then we also lose the ability to even say that that's what it's done. And if that's the case then has it really done it? /ponders uselessly

I would probably be a bit more excited if it didn't start coming out during a time of widespread disinformation and anti-intellectualism.

I just come here to share animal facts and similar things, and the amount of reasonably realistic AI images and poorly compiled "fact sheets", and recently also passable videos of non-real animals is very disappointing. It waters down basic facts as it blends in to more and more things.

Stuff like that is the lowest level of bad in the grand scheme of things. I don't even like to think of the intentionally malicious ways we'll see it be used. It's a going to be the robocaller of the future, but not just spamming our landlines, but everything. I think I could live without it.

AI is a tool and a lot of fields used it successfully prior to the chatgpt craze. It's excellent for structural extraction and comprehension and it will slowly change the way most of us work but there's a hell of a craze right now.

-

I don’t think it’s useful for a lot of what it’s being promoted for—its pushers are exploiting the common conception of software as a process whose behavior is rigidly constrained and can be trusted to operate within those constraints, but this isn’t generally true for machine learning.

-

I think it sheds some new light on human brain functioning, but only reproduces a specific aspect of the brain—namely, the salience network (i.e., the part of our brain that builds a predictive model of our environment and alerts us when the unexpected happens). This can be useful for picking up on subtle correlations our conscious brains would miss—but those who think it can be incrementally enhanced into reproducing the entire brain (or even the part of the brain we would properly call consciousness) are mistaken.

-

Building on the above, I think generative models imitate the part of our subconscious that tries to “fill in the banks” when we see or hear something ambiguous, not the part that deliberately creates meaningful things from scratch. So I don’t think it’s a real threat to the creative professions. I think they should be prevented from generating works that would be considered infringing if they were produced by humans, but not from training on copyrighted works that a human would be permitted to see or hear and be affected by.

-

I think the parties claiming that AI needs to be prevented from falling into “the wrong hands” are themselves the most likely parties to abuse it. I think it’s safest when it’s open, accessible, and unconcentrated.

What do you think about what are your thoughts on AI?

No. It is an unneeded waste of resources spent by anti-human perverts.

The actual purpose is to parse surveillance data for the capitalist class.

No joke, it will probably kill us all.. The Doomsday Clock is citing Fascism, Nazis, Pandemics, Global Warming, Nuclear War, and AI as the harbingers of our collective extinction.. The only thing I would add, is that AI itself will likely speed-run and coordinate these other world-ending disasters... It's both Humanity's greatest invention, and also our assured doom.

“AI” is humanity’s greatest invention…? wtf lol

Death. Kill 'em all. Butlerian jihad now. Anybody trying to give machines even the illusion of thought is a traitor to humanity. I know this might sound hyperbolic; it's not. I am not joking rn. I mean it.

You sound like spiritualist empires in Stellaris

Whatever that means, it sounds based (I've been meaning to play Stellaris for ages but haven't really gotten around to it since the one game I played back in like 2018 when I bought it)

The pushback against genAI's mostly reactionary moral panic with (stupid|misinformation|truth stretching) talking points , such's :

- AI art being inherently "plagiarising"

- AI using as much energy's crypto , the AI = crypto mindset in general

- AI art "having no soul" , .*

- "Peops use AI to do «BAD THING» , therefour AI ISZ THE DEVILLLL ‼‼‼"

- .*

Any legitimate criticisms sadly drowned out by this bollocks , can't trust anti AI peops to actually criticise the tech . Am bitter

AI art being inherently “plagiarising”

Yes it is, simply due to the nature of the "training"/"learning" process, which is learning in name alone. If you know how this mathematical process works you know the machine's definition of success is how well it's output matches the data it was trained with. The machine is effectively trying to encrypt it's data base on it's nodes. I would recommend you inform yourself on how the "training" process actually works, down to the mathematical level.

AI using as much energy’s crypto , the AI = crypto mindset in general

AI is often push by the same people who pushed NFTs and whatnot, so this is somewhat understandable. And yes, AI consumes a lot of energy and water. Maybe not as much as crypto, but still, not something we can afford to use for mindless entertainment in our current climate catastrophe.

AI art “having no soul”

Yup. AI "art" works by finding pixel patterns that repeat with a given token. Due to it's nature, it can only repeat patterns which it identified in it's training data. Now, we have all heard of the saying "An image in worth a thousand words". This saying is quite the understatement. For one to describe an image down to the last detail, such detail that someone who never saw the image could perfectly replicate it, one how need more than a thousand words, as evidenced by computer image files, since these are basically what was just described. The training data never has enough detail to describe the whole image in such detail and therefore it is incapable of doing anything too specific.

Art is very personal, the more of yourself you put into a piece, the more unique and "soulful" it will be. The more of the work you delegate to the machine, the less of yourself you can put into the piece, and if 100% of the image generation was made by the machine, which is in turn simply calculating an average image that matches the prompt, then nothing of you is in the piece. It is nothing more than the maths that created it.

Simple text descriptions do not give the human meaningful control over the final piece, and that is why pretty much any artist worth their tittle is not using it.

Also, the irony that we are automating the arts, something which people enjoy doing, instead of the soul degrading jobs nobody wants to do, should not be lost on us.

“Peops use AI to do «BAD THING» , therefour AI ISZ THE DEVILLLL ‼‼‼”

It is true that AI is being used in horrible was that will take sometime to adapt, it is simply that the negative usages of AI have more visibility than the positive usages. As a matter of fact, this node network technology was already in use in many fields before the Chat-GPT induced AI hype train.

can’t trust anti AI peops to actually criticise the tech

Correct. It is well known that those who stem to financially benefit from the success of AI are more than willing to lie about it's true capabilities.