this post was submitted on 02 Aug 2024

1522 points (98.4% liked)

Science Memes

11217 readers

3355 users here now

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- !abiogenesis@mander.xyz

- !animal-behavior@mander.xyz

- !anthropology@mander.xyz

- !arachnology@mander.xyz

- !balconygardening@slrpnk.net

- !biodiversity@mander.xyz

- !biology@mander.xyz

- !biophysics@mander.xyz

- !botany@mander.xyz

- !ecology@mander.xyz

- !entomology@mander.xyz

- !fermentation@mander.xyz

- !herpetology@mander.xyz

- !houseplants@mander.xyz

- !medicine@mander.xyz

- !microscopy@mander.xyz

- !mycology@mander.xyz

- !nudibranchs@mander.xyz

- !nutrition@mander.xyz

- !palaeoecology@mander.xyz

- !palaeontology@mander.xyz

- !photosynthesis@mander.xyz

- !plantid@mander.xyz

- !plants@mander.xyz

- !reptiles and amphibians@mander.xyz

Physical Sciences

- !astronomy@mander.xyz

- !chemistry@mander.xyz

- !earthscience@mander.xyz

- !geography@mander.xyz

- !geospatial@mander.xyz

- !nuclear@mander.xyz

- !physics@mander.xyz

- !quantum-computing@mander.xyz

- !spectroscopy@mander.xyz

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and sports-science@mander.xyz

- !gardening@mander.xyz

- !self sufficiency@mander.xyz

- !soilscience@slrpnk.net

- !terrariums@mander.xyz

- !timelapse@mander.xyz

Memes

Miscellaneous

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

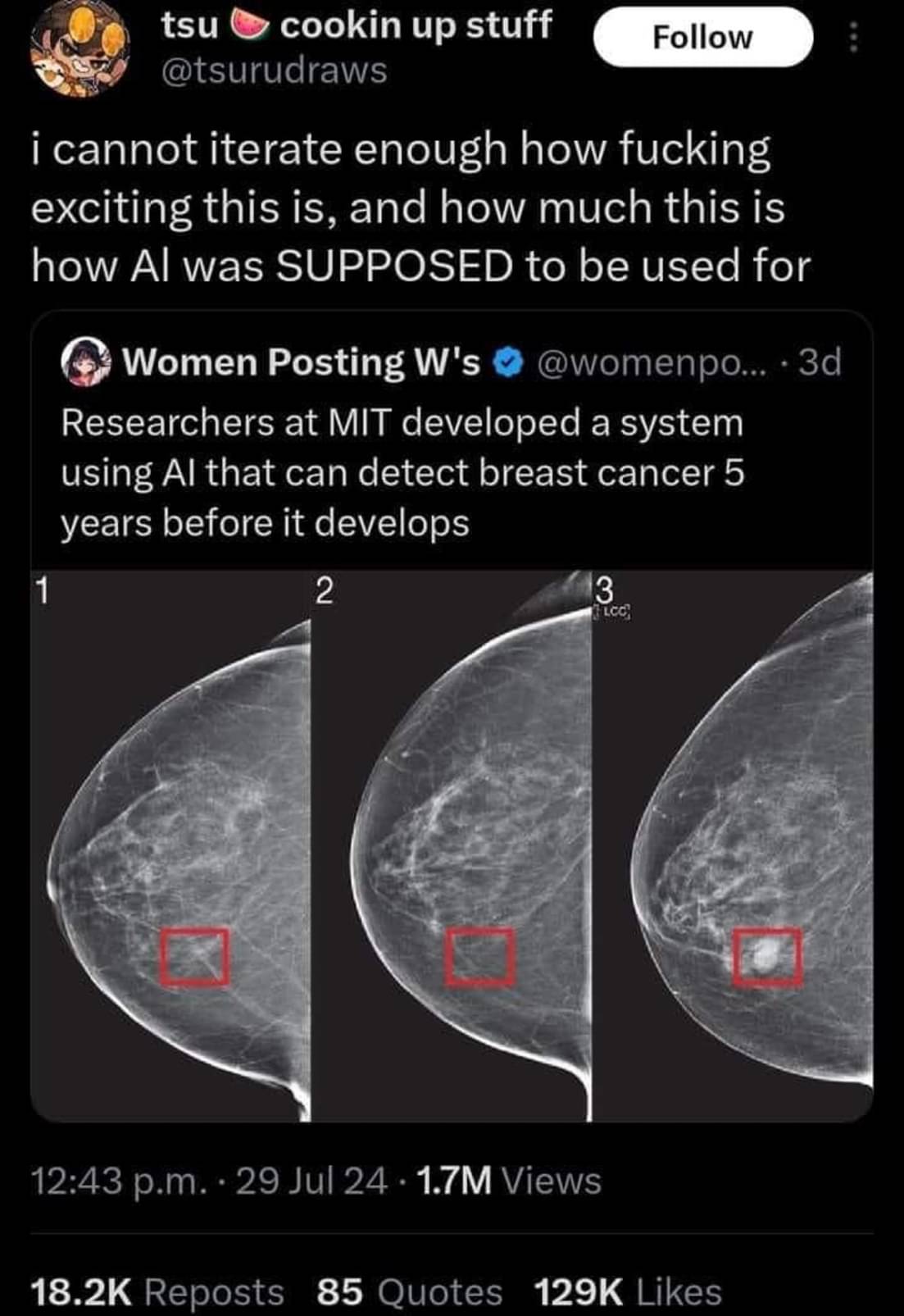

Hey look, this took me like 5 minutes to find.

Censius guide to AI interpretability tools

Here's a good thing to wonder: if you don't know how you're black box model works, how do you know it isn't racist?

Here's what looks like a university paper on interpretability tools:

Oh yeah. I forgot about that. I hope your model is understandable enough that it doesn't get you in trouble with the EU.

Oh look, here you can actually see one particular interpretability tool being used to interpret one particular model. Funny that, people actually caring what their models are using to make decisions.

Look, maybe you were having a bad day, or maybe slapping people is literally your favorite thing to do, who am I to take away mankind's finer pleasures, but this attitude of yours is profoundly stupid. It's weak. You don't want to know? It doesn't make you curious? Why are you comfortable not knowing things? That's not how science is propelled forward.

"Enough" is doing a fucking ton of heavy lifting there. You cannot explain a terabyte of floating point numbers. Same way you cannot guarantee a specific doctor or MRI technician isn't racist.

A single drop of water contains billions of molecules, and yet, we can explain a river. Maybe you should try applying yourself. The field of hydrology awaits you.

No, we cannot explain a river, or the atmosphere. Hence weather forecast is good for a few days and even after massive computer simulations, aircraft/cars/ships still need to do tunnel testing and real life testing. Because we only can approximate the real thing in our model.

You can't explain a river? It goes down hill.

I understand that complicated things frieghten you, Tja, but I don't understand what any of this has to do with being unsatisfied when an insurance company denies your claim and all they have to say is "the big robot said no.. uh... leave now?"

The wheels on the bus go round and round

interpretability costs money though :v