this post was submitted on 04 Aug 2024

237 points (98.8% liked)

memes

22768 readers

276 users here now

dank memes

Rules:

-

All posts must be memes and follow a general meme setup.

-

No unedited webcomics.

-

Someone saying something funny or cringe on twitter/tumblr/reddit/etc. is not a meme. Post that stuff in !the_dunk_tank@www.hexbear.net, it's a great comm.

-

Va*sh posting is haram and will be removed.

-

Follow the code of conduct.

-

Tag OC at the end of your title and we'll probably pin it for a while if we see it.

-

Recent reposts might be removed.

-

No anti-natalism memes. See: Eco-fascism Primer

founded 4 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

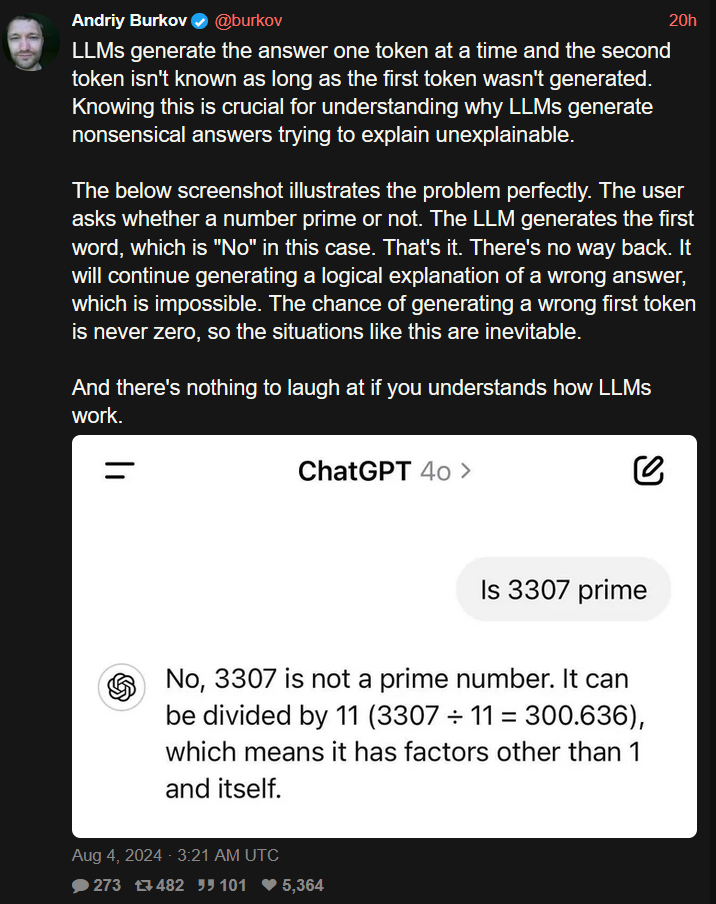

I think the best way to show people is to get them to ask it leading questions.

LLMs can't deal with leading questions by design unless the expert system sitting on top of them can deal with it.

Like get them to ask why a very obviously wrong thing is right. Works better with very industry specific stuff that they haven't programmed the expert system managing responses to deal with.

In my industry: "Thanks for helping me figure out my 1:13 split fiber optic network, what even sized cable would I need to make the implementation work?"

It'll just refuse to give you an answer or it'll give you no answer and just start explaining terms. When you get a response like that it's because another LLM system tailored the response because of low confidence in the answer. Those are usually asked to re-phrase the answer to not assert anything and just focus on individual elements of the question.

My usual response is a list of definitions and tautologies followed by "I need more information" but that's not what the LLM responded with. Responses like that are tailored by another LLM that's triggered when confidence in a response is low.