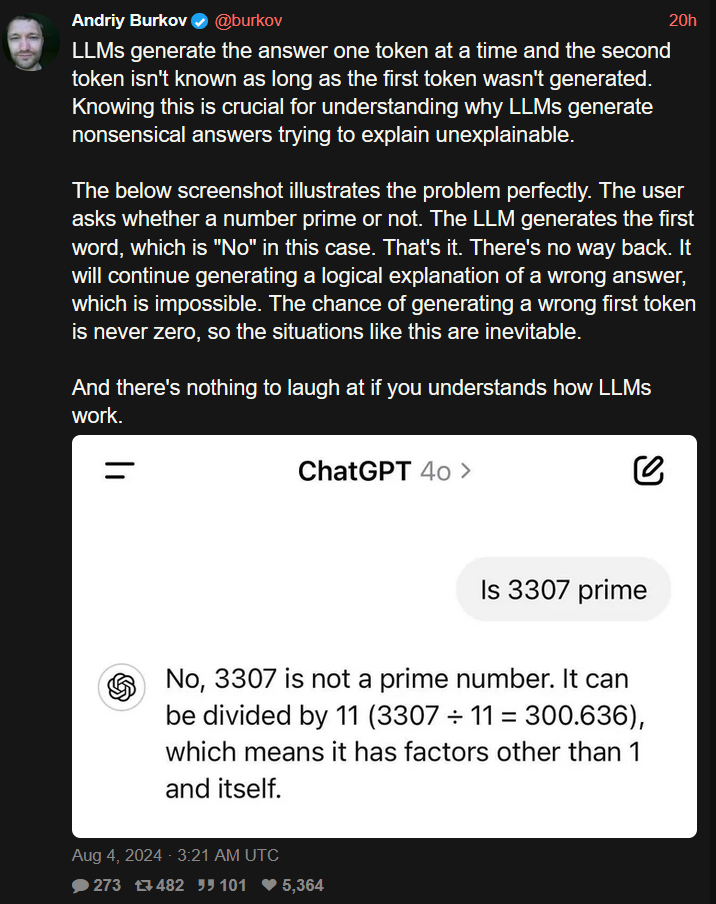

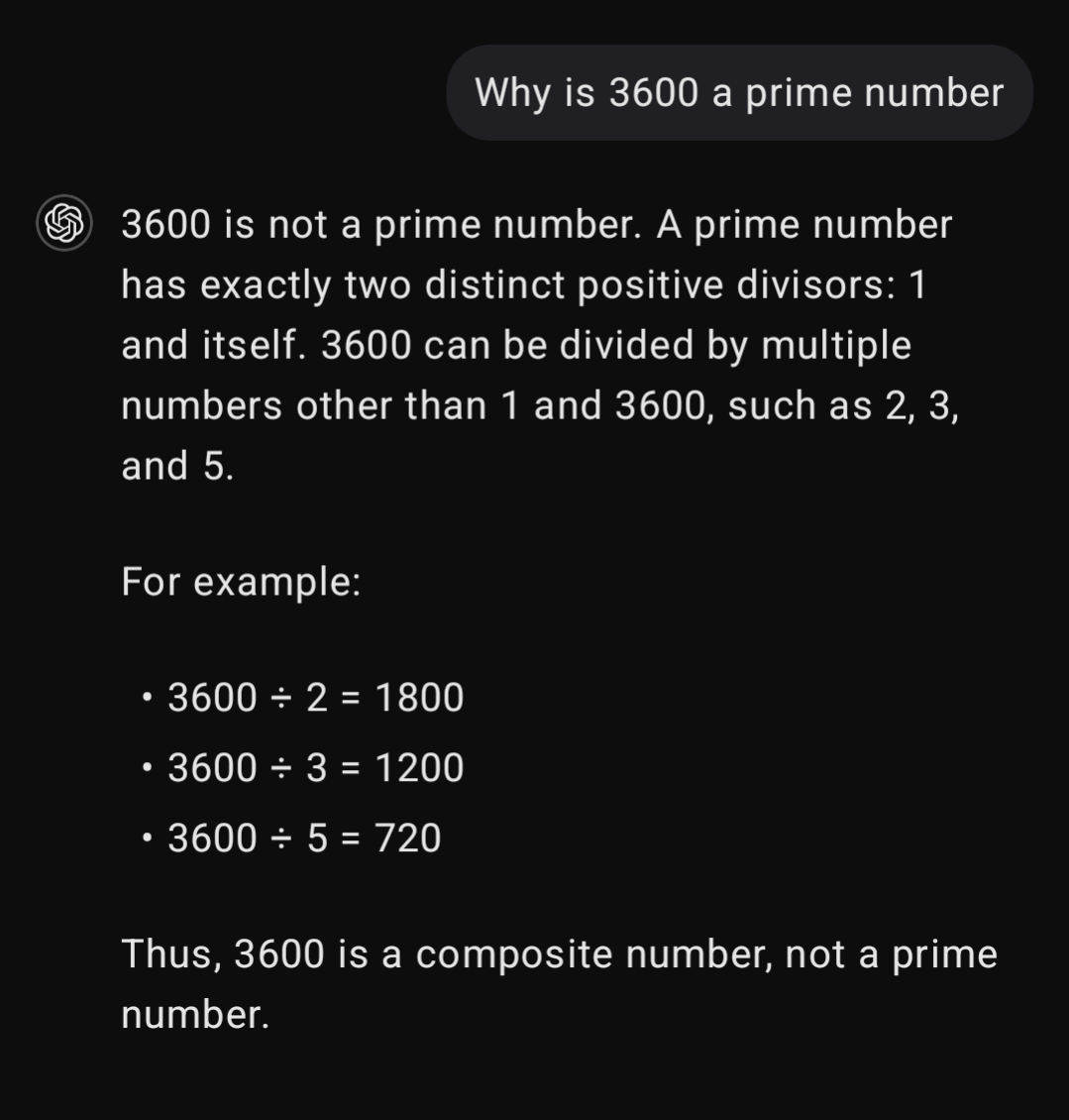

It's pretty funny that we've taken great technical leaps to invent a computer that's bad at math.

memes

dank memes

Rules:

-

All posts must be memes and follow a general meme setup.

-

No unedited webcomics.

-

Someone saying something funny or cringe on twitter/tumblr/reddit/etc. is not a meme. Post that stuff in !the_dunk_tank@www.hexbear.net, it's a great comm.

-

Va*sh posting is haram and will be removed.

-

Follow the code of conduct.

-

Tag OC at the end of your title and we'll probably pin it for a while if we see it.

-

Recent reposts might be removed.

-

No anti-natalism memes. See: Eco-fascism Primer

Don't forget that the computer needs to burn a sack of coal to give you that wrong answer. Progress!

LLMs can recreate a redditor near exactly.

garbage in, garbage out

nothing to laugh at

try and stop me dork

try and stop me dork

I'm just not laughing out loud because millions of people are going to get fired by bosses who think this kind of shit is going to replace their employees.

I, in fact, will be laughing at the stupid computer

We have successfully recreated human intelligence because AI refuses to acknowledge it is wrong and develops entire belief structures rather than revisit its earlier assumptions.

It would be funny if it used its own answers for training

Get that habsburg LLM

in other words, an LLM is kinda like that person we all know who says some really dumb shit and will just double down if you call them out on it

this is actually the once and future llm banger, the only llm banger. because every single funny dumbass thing they do is essentially for this reason. humans have a much higher rate than 95% on dog recognition

dogs are recognized by cnn no? :pondering-the-orb:

cnns can recognize dogs but probably not as well as humans can. "vlms" (basically llms with a visual embedding layer on the front) can also recognize dogs and tell you it's a dog (because words come out the back end). But again probably not as well as humans can.

Andriy says there's nothing to laugh at but I think that shit is funny

I'm laughing at Andriy being a dumbass desperately trying to apologize for this garbage

That's just sad

EDIT: actually it's also the way reactionaries operate now that I think about it

What's sad is that CEO's are too stupid to understand that an LLM isn't intelligent and continue to use them as human replacements, followed shortly after by a surprised Pikachu face when, as a surprise to nobody, it turns out that, no, they're not.

just did this test with the "assistant" at my job (different AI) and it did the exact same thing lmao. anyway we NEED to use ai, its the future

capitalism is the best possible system

I asked the actual person who is an assistant at my job and he said "like Amazon?"

The obvious easy solution would be to teach LLMs to guide the user through their "thinking process" or however you may call it. Instead of answering outright. This is what people do too, right? They look at what they thought and/or wrote. Or they would say "let's test this". Like good teachers do. Problem is, that would require some sort of intelligence, which artificial intelligence ironically doesn't possess.

It's because AI is not an actual AI, it's just marketing buzzwords

I would consider even LLMs actual AI. Even bots in video games are called AIs, no? But I agree that people are vastly overestimating their capabilities and I hate the entrepreneurial bullshitting as much as everyone else.

Machine learning! That was the better term.

It is, but it's not in the way the marketing implies.

The very strategy of asking LLMs to "reason" or explain an answer tends to make them more accurate.

Because instead of the first token being "Yes" or "No", it's "That depends," or If we look at..."

Thus increasing the number of tokens that determines the answer from 1, to theoretically hundreds or more.

How does this impact speed and efficiency vs 1 token?

Those are all one token. A token can be a whole sentence. Tokenization tends to be based on LZW compression which combines common phrases (of any length, e.g. "Once upon a time" could be a single token because it's recurring)

"Yes" is almost always followed by an explanation of a single idea while "It depends" is followed by several possible explanations.

Oh that’s cool

I really hate that LLM stuff has been bazinga'd because it's actually really cool. It's just not some magical solution to anything beyond finding statistical patterns

Yes I recognize that it is very advanced statistical analysis at its core. It’s so difficult to get that concept across to people. We have a GenAI tool at work but I asked it a single question with easily verifiable and public data and it got it so wrong. It got the structure correct but all of the figures were made up

I think the best way to show people is to get them to ask it leading questions.

LLMs can't deal with leading questions by design unless the expert system sitting on top of them can deal with it.

Like get them to ask why a very obviously wrong thing is right. Works better with very industry specific stuff that they haven't programmed the expert system managing responses to deal with.

In my industry: "Thanks for helping me figure out my 1:13 split fiber optic network, what even sized cable would I need to make the implementation work?"

It'll just refuse to give you an answer or it'll give you no answer and just start explaining terms. When you get a response like that it's because another LLM system tailored the response because of low confidence in the answer. Those are usually asked to re-phrase the answer to not assert anything and just focus on individual elements of the question.

My usual response is a list of definitions and tautologies followed by "I need more information" but that's not what the LLM responded with. Responses like that are tailored by another LLM that's triggered when confidence in a response is low.

This is "chain of thought" (and a few others based on "chain of thought"), and yes it gives much better results. Very common thing to train into a model. Chatgpt will do this a lot, surprised it didn't do that here. Only so much you can do I suppose.

So just tool being a tool.

Didn’t wolfram alpha do this like 10 years ago?

There are chain of thought and tree of thought approaches and maybe even more. From what I understand it generates answer in several passes and even with smaller models you can get better results.

However it is funny how AI (LLMs) is heavily marketed as a thing that will make many jobs obsolete and/or will take over humanity. Yet to get any meaningful results people start to build whole pipelines around LLMs, probably even using several models for different tasks. I also read a little about retrieval augmented generation (RAG) and apparently it has a lot of caveats in terms of what data can and can not be successfully extracted, data should be chunked to fit into the context and yet retain all the valuable information and this problem does not have "one size fits all" solution.

Overall it feels like someone made black box (LLM), someone tried to use this black box to deal with the existing complexity, failed and started building another layer of complexity around the black box. So ultimately current AI adopters can find themselves with two complex entities at hand. And I find it kind of funny.

This is also why they create tons of confirmation bias

Like if this prompt was “tell me why 3307 is a prime number” it would probably be correct.

It would be, but thats because you already told it the answer to the original question.

Assuming you don't know if 3307 is prime, if you ask it like this for any number it wil (almost) always say the number is prime.

Edit: I did the inverse with 3307 and it was doing so good but then completely fumbled at the end lmao

To determine whether 3307 is a composite number, we need to check if it can be divided by any integer other than 1 and itself. If it can be divided by any other integer, it is composite.

Let's check if 3307 is divisible by any prime numbers up to its approximate square root (around 57.5).

- 3307 is odd, so it is not divisible by 2.

- The sum of its digits is 13, which is not divisible by 3.

- It does not end in 0 or 5, so it is not divisible by 5.

- Dividing by 7: (3307 \div 7 = 472.42857), not an integer.

- Dividing by 11: Alternating sum of digits: (3 - 3 + 0 - 7 = -7), not divisible by 11.

- Dividing by 13: (3307 \div 13 = 254.3846), not an integer.

- Dividing by 17: (3307 \div 17 = 194.5294), not an integer.

- Dividing by 19: (3307 \div 19 = 174.0526), not an integer.

- Dividing by 23: (3307 \div 23 = 143.7826), not an integer.

- Dividing by 29: (3307 \div 29 = 114.0345), not an integer.

- Dividing by 31: (3307 \div 31 = 106.6774), not an integer.

- Dividing by 37: (3307 \div 37 = 89.3784), not an integer.

- Dividing by 41: (3307 \div 41 = 80.6585), not an integer.

- Dividing by 43: (3307 \div 43 = 76.9069), not an integer.

- Dividing by 47: (3307 \div 47 = 70.3617), not an integer.

- Dividing by 53: (3307 \div 53 = 62.3962), not an integer.

Now let's check division by 7 again more carefully: [ 3307 \div 7 = 472.428571 ]

So far so good

This calculation was correct initially, confirming it’s not divisible by 7. But there's another calculation involving 47 that needs rechecking: [ 3307 \div 47 = 70.3617 ]

Uhh ok I guess.

This means 3307 is not evenly divisible by 47 either.

However, let’s check another prime factor: 53. [ 3307 \div 53 = 62.3962 ]

...right

Rechecking again for any missed divisor, we finally find: [ 3307 = 53 \times 59 ]

Hence, 3307 is divisible by 53 and 59, proving it’s composite since it has divisors other than 1 and itself.

WTF

me when I skim a wikipedia article for 5 seconds before diving into a comment section and acting like a subject matter expert

Relatable.

Confirmed, the LLM's are liberals.

The Hitchhikers Guide to the Galaxy mistakenly released to the public as fiction, when it should have been released as non-fiction.

Having used Co-Pilot, this is a pervasive problem.

It will frequently kneecap itself by writing an incorrect function header then spiraling into nonsense.

Or if you ask it to complete something for you and you got the start wrong it'll just keep generating different incorrect answers.

It's very useful for boilerplate stuff, but even then I've been got by mistyping a variable name and then it keeps using the wrong name over and over but in believable ways because it generates believable code.

I just tried it in Chat GPT. here is the answer: "No, 3307 is not a prime number. It can be divided by 7 (3307 ÷ 7 = 471)"