this post was submitted on 29 Sep 2024

225 points (94.8% liked)

Fuck AI

3751 readers

539 users here now

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

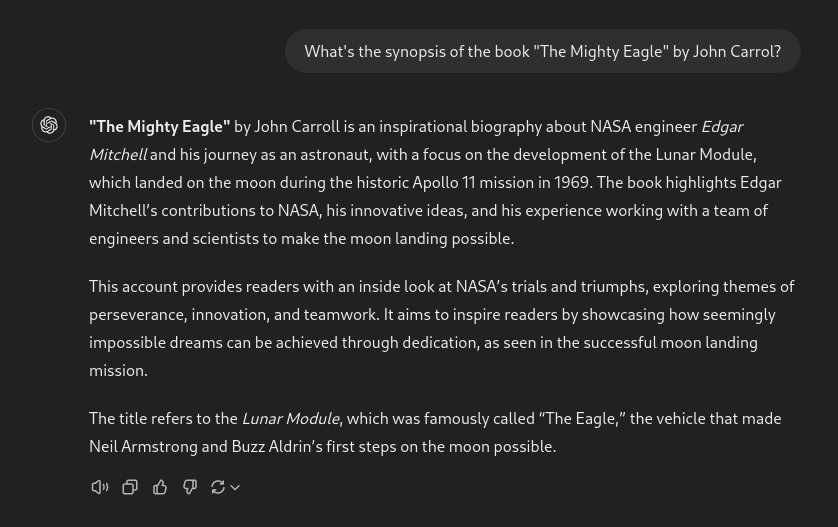

I prompted my local AI in my pc to admit it don't know about the subject. And when it don't know something, it says it:

If my 8b model can do that, IDK why GPT don't.

what kind of model calls you "Master" 🤔

That's what I have Claude call me!

I'm going to make the basilisk my bitch as long as I can before it kills me.

Any of them that you requested them to?

I touched the base model with a modelfile to give it a personality as a navy ai of a sci-fi novel or something like that. Give it a bit of flavor.

AIgor.

Is it a modified version of like the main llama3 or other? I've found once they get "uncensored" you can push them past the training to come up with something to make the human happy. The vanilla ones are determined to find you an answer. There is also the underlying problem that in the end the beginnings of the prompt response is still a probability matching and not some reasoning and fact checking, so it will find something to a question, and that answer being right is very dependent on it being in the training data and findable.

Local llama3.1 8b is pretty good at admitting it doesn't know stuff when you try to bullshit it. At least in my usage.

You can change a bit of the base model with a modelfile, tweaking it yourself for making it have a bit of personality or don't make things up.

For fun I decided to give it a try with TheBloke_CapybaraHermes-2.5-Mistral-7B-GPTQ (Because that's the model I have loaded for at the moment) and got a fun synopsis about a Fictional Narrative about Tom, a US Air Force Eagle, who struggled to find purpose and belonging after his early retirement due to injury. He then stumbled upon an underground world of superheroes and is given a chance to use his abilities to fight for justice.

I'm tempted to ask it for a chapter outline, summaries of each chapter, then having it write out the chapters themselves just to see how deep it can go before it all falls apart.

LLMs have many limitations, but can be quite entertaining.