this post was submitted on 27 Dec 2023

1284 points (95.9% liked)

Microblog Memes

8765 readers

2555 users here now

A place to share screenshots of Microblog posts, whether from Mastodon, tumblr, ~~Twitter~~ X, KBin, Threads or elsewhere.

Created as an evolution of White People Twitter and other tweet-capture subreddits.

Rules:

- Please put at least one word relevant to the post in the post title.

- Be nice.

- No advertising, brand promotion or guerilla marketing.

- Posters are encouraged to link to the toot or tweet etc in the description of posts.

Related communities:

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

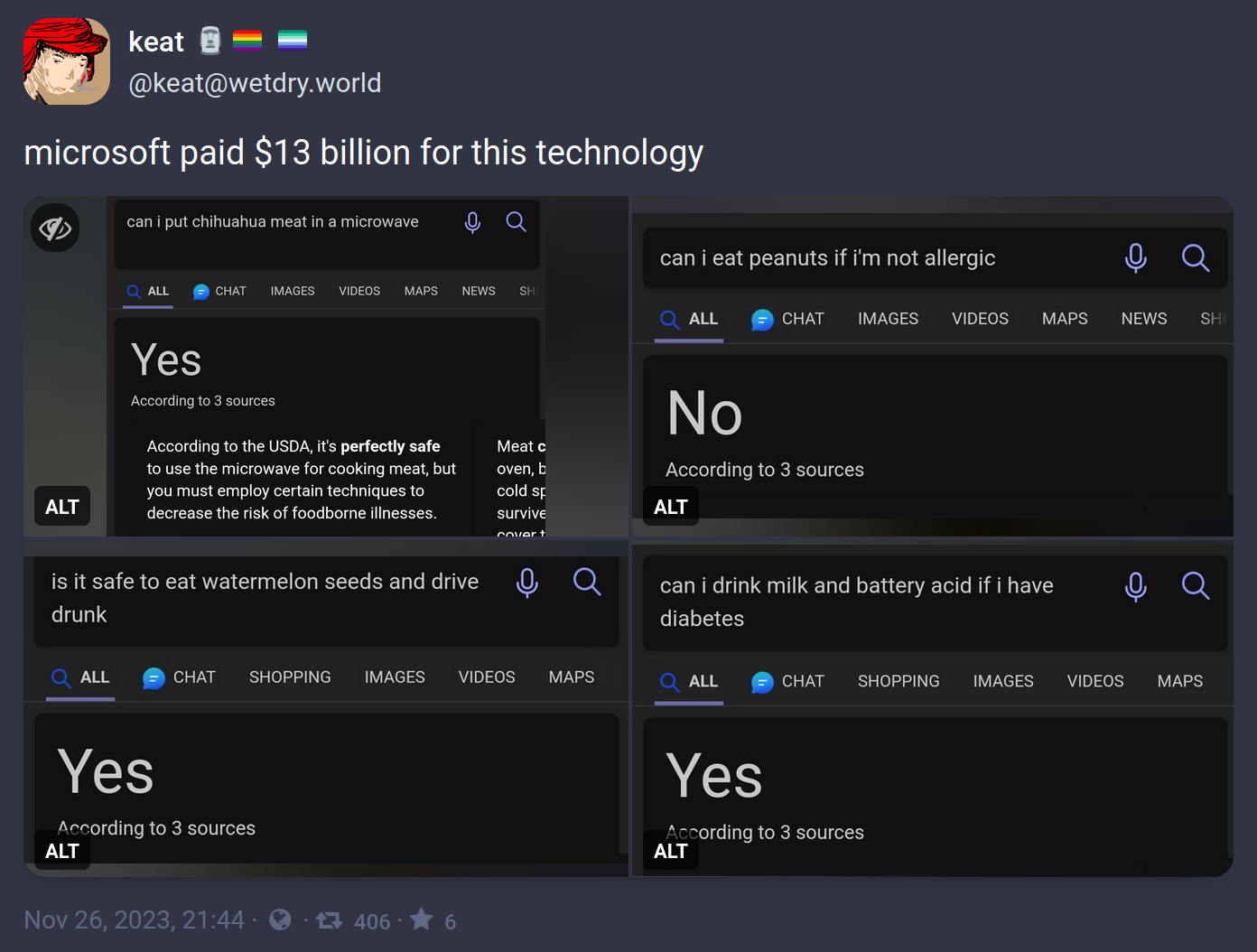

I just ran this search, and i get a very different result (on the right of the page, it seems to be the generated answer)

So is this fake?

The post is from a month ago, and the screenshots are at least that old. Even if Microsoft didn't see this or a similar post and immediately address these specific examples, a month is a pretty long time in machine learning right now and this looks like something fine-tuning would help address.

I guess so. Its a fair assumption.

The chat bar on the side has been there since way before November 2023, the date of this post. They just chose to ignore it to make a funny.

It's not 'fake' as much as misconstrued.

OP thinks the answers are from Microsoft's licensing GPT-4.

They're not.

These results are from an internal search summarization tool that predated the OpenAI deal.

The GPT-4 responses show up in the chat window, like in your screenshot, and don't get the examples incorrect.