So the problem isn't the technology. The problem is unethical big corporations.

Memes

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

Disagree. The technology will never yield AGI as all it does is remix a huge field of data without even knowing what that data functionally says.

All it can do now and ever will do is destroy the environment by using oodles of energy, just so some fucker can generate a boring big titty goth pinup with weird hands and weirder feet. Feeding it exponentially more energy will do what? Reduce the amount of fingers and the foot weirdness? Great. That is so worth squandering our dwindling resources to.

Disagree. The technology will never yield AGI as all it does is remix a huge field of data without even knowing what that data functionally says.

We definitely don't need AGI for AI technologies to be useful. AI, particularly reinforcement learning, is great for teaching robots to do complex tasks for example. LLMs have shocking ability relative to other approaches (if limited compared to humans) to generalize to "nearby but different, enough" tasks. And once they're trained (and possibly quantized), they (LLMs and reinforcement learning policies) don't require that much more power to implement compared to traditional algorithms. So IMO, the question should be "is it worthwhile to spend the energy to train X thing?" Unfortunately, the capitalists have been the ones answering that question because they can do so at our expense.

For a person without access to big computing resources (me lol), there's also the fact that transfer learning is possible for both LLMs and reinforcement learning. Easiest way to explain transfer learning is this: imagine that I want to learn Engineering, Physics, Chemistry, and Computer Science. What should I learn first so that each subject is easy for me to pick up? My answer would be Math. So in AI speak, if we spend a ton of energy to train an AI to do math and then fine-tune agents to do Physics, Engineering, etc., we can avoid training all the agents from scratch. Fine-tuning can typically be done on "normal" computers with FOSS tools.

all it does is remix a huge field of data without even knowing what that data functionally says.

IMO that can be an incredibly useful approach for solving problems whose dynamics are too complex to reasonably model, with the understanding that the obtained solution is a crude approximation to the underlying dynamics.

IMO I'm waiting for the bubble to burst so that AI can be just another tool in my engineering toolkit instead of the capitalists' newest plaything.

Sorry about the essay, but I really think that AI tools have a huge potential to make life better for us all, but obviously a much greater potential for capitalists to destroy us all so long as we don't understand these tools and use them against the powerful.

depends. for "AI" "art" the problem is both terms are lies. there is no intelligence and there is no art.

Technology is a cultural creation, not a magic box outside of its circumstances. "The problem isn't the technology, it's the creators, users, and perpetuators" is tautological.

And, importantly, the purpose of a system is what it does.

Always has been

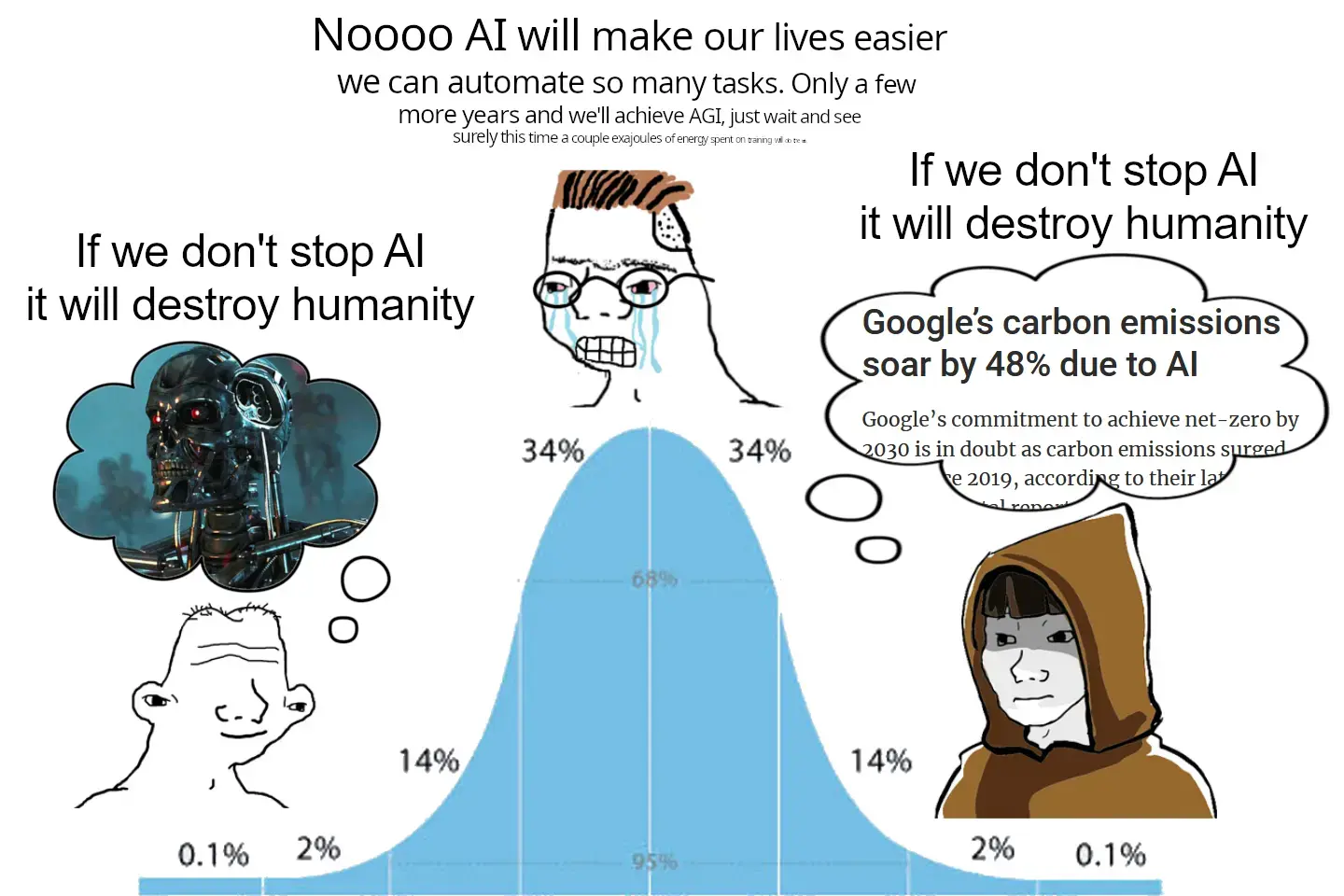

It's been a while since I've seen this meme template being used correctly

Turns out, most people think their stupid views are actually genius

The root problem is capitalism though, if it wasn't AI it would be some other idiotic scheme like cryptocurrency that would be wasting energy instead. The problem is with the system as opposed to technology.

But what if we use AI in robots and have them go out with giant vacuums to suck up all the bad gasses?

My climate change solution consultation services are available for hire anytime.

Careful! Last time I sarcastically posted a stupid AI idea, within minutes a bunch of venture capitalists tracked me down, broke down my door and threw money at me non stop for hours.

Robots figuring out that without humans releasing gas their job is a lot more efficient could cause a few problems.

Don't worry, they will figure out that without humans releasing gasses they have no purpose, so they will cull most of the human population but keep just enough to justify their existence to manage it.

Although you don't need AI to figure that one out. Just look at the relationships between the US intelligence and military and "terrorist groups".

It’s wild how we went from…

Critics: “Crypto is an energy hog and its main use case is a convoluted pyramid scheme”

Boosters: “Bro trust me bro, there are legit use cases and energy consumption has already been reduced in several prototype implementations”

…to…

Critics: “AI is an energy hog and its main use case is a convoluted labor exploitation scheme”

Boosters: “Bro trust me bro, there are legit use cases and energy consumption has already been reduced in several prototype implementations”

They're not really comparable. Crypto and blockchain were good solutions looking for problems to solve. They're innovative and cool? Sure, but they never had a widescale use. AI has been around for awhile, it just got recently rebranded as artificial intellectual, the same technologies were called algorithms a few years ago... And they basically run the internet and the global economy. Hospitals, schools, corporations, governments, the militaries, etc all use them. Maybe certain uses of AI are dumb, but trying to pretend that the thing as a whole doesn't have, or rather already has, genuine uses is just dumb

Stupid AI will destroy humanity. But the important thing to remember is that for a brief, shining moment, profit will be made.

This conveniently ignores the progress being made with smaller and smaller models in the open source community.

As with literally every technical progress, tech itself is no problem, capitalism usage of it is.

Nowadays you can actually get a semi decent chat bot working on a n100 that consumes next to nothing even at full charge.

In what sense does a small community working with open weight (note: rarely if ever open source) llm have any mitigating impact on the rampant carbon emissions for the sake of bullshit generators?

And all for some drunken answers and a few new memes

In my country this kind of AI is being used to more efficiently find tax fraud and to create chatbots for users to understand taxes, that due to the much more reliable and limited training set does not allucinate and can provide clear sources for the information given.

Personally I think AI systems will kill us dead simply by having no idea what to do, dodgy old coots thinking machines are magic and know everything when in reality machines can barely approximate what we tell them to do and base their information on this terrible approximation.

Machines will do exactly what you tell them to do and is the cause of many software bugs. That’s kind of the problem, no matter how elegant the algorithm, fuzzy goes in, fuzzy comes out. It was clear this very basic principle was not even considered when Google started telling people to eat rocks and glue. You can’t patch special cases out when they are so poorly understood.

Not only the pollution.

It has triggered an economic race to the bottom for any industry that can incorporate it. Employers will be forced to replace more workers with AI to keep prices competitive. And that is a lot of industries, especially if AI continues its growth.

The result is a lot of unemployment, which means an economic slowdown due to a lack of discretionary spending, which is a feedback loop.

There are only 3 outcomes I can imagine:

- AI fizzles out. It can't maintain its advancement enough to impress execs.

- An unimaginable wealth disparity and probably a return to something like feudalism.

- social revolution where AI is taken out of the hands of owners and placed into the hands of workers. Would require changes that we'd consider radically socialist now, like UBI and strong af social safety nets.

The second seems more likely than the third, and I consider that more or less a destruction of humanity

I don't like to use relative numbers to illustrate the increase. 48% can be miniscule or enormous based on the emission last year.

While I don't think the increase is miniscule it's still an unessesary ambiguity.

The relative number here might be more useful as long as it's understood that Google already has significant emissions. It's also sufficient to convey that they're headed in the wrong direction relative to their goal of net zero. A number like 14.3 million tCO₂e isn't as clear IMO.

There are some pretty smart/knowledgeable people in the left camp

https://www.youtube.com/watch?v=2ziuPUeewK0

wait until the curveless anon comes in