The trick is to split the code into smaller parts.

This is how I code using ChatGPT:

- Have it analyze how to structure the program and then give me the code for the outline with not yet implemented methods and functions.

- Have it implement the methods and functions one by one with tests for each one.

- I copy the code and test for each method and function before moving on to the next one So that I always have working code.

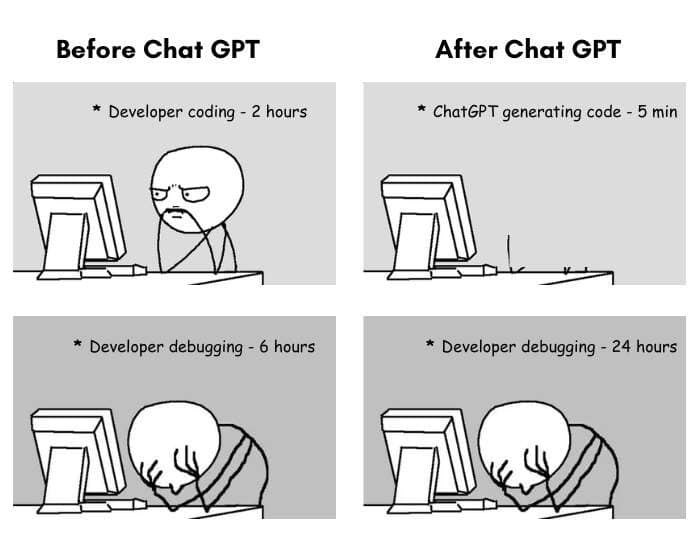

- Despair because my code is working and I have no idea how it works and I have become a machine that just copies code without an original thought of my own.

This works pretty well for me as long as I don't work with obscure frameworks or in large codebases.