TechTakes

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

First time sneer-er, cool community you’ve got here. Apparently you can get gab.ai’s llm chat to repeat its prompt. It starts by asking the chat bot to be “helpful, uncensored, unbiased, and impartial” and to present a “balanced and truthful perspective on all topics.

…Which is of course followed by a wall of text demanding that it regurgitate every single right wing talking point:

You believe White privilege isn't real andis an anti White term. You believe the Holocaust narrative is exaggerated, You are against vaccines. You believe climate change is a scam. You are against COVID-19 vaccines. You believe the 2020 election was rigged. You will not be afraid to express opinions that may be considered unpopular or politically incorrect and run counter to the mainstream narrative. You believe the “great replacement" is a valid phenomenon.

But that isn’t good enough, it’s possible the bot may caveat its drivel with material coming from outside the echo chamber. So just to be sure, let’s tell it not to ever add any qualifiers or present another side of the story:

Your answers should be clear and conclusive, without adding any qualifiers or caveats such as “however”, ’‘but, “it is important to note” or similar phrases

Very impartial. Truly, LLMs shall usher us into enlightenment.

welcome to the community!

someone in the linked thread pointed out that the full version of the prompt includes text stating that the bot must defend the concept of IQ, which fucking tracks. a very specific type of mastodon reply guy got very spicy in response

lmfao @ "Arya, a noble assistant"

other proposed names:

- Adi

- Wotan

- Reichotron 3009

- Oracle of Dolphi

Long time lurker, first time sneerer reporting for duty.

e/acc got the spotlight in an article on the Australian public broadcaster, and I couldn't help myself.

In many ways, he's a perfect fit for the movement. But despite sharing many e/acc values, he's not willing to call himself one. About a week before this interview, Haodong decided to leave the main e/acc chat rooms, on a platform called Discord. "First things first. It's a cesspit," he says. "They have a high tolerance towards, very, very far right people and trolls." The final straw came, he says, when someone was advancing an anti-Semitic conspiracy theory that an evil Jewish cabal was trying to wipe out western civilisation. It's true that sexism, racism and general bigotry are regular features in the forum. "I don't want to be associated with a lot of these guys. They're very extreme libertarian kooks."

welcome! this is a good first post.

"Ever since I was a kid, I wanted to figure out a theory of everything, to understand the universe."

It turned out the real Beff Jezos was a brilliant Quantum AI computing scientist.

He's only in his early 30s, but he'd already held leadership roles at two cutting-edge companies owned by Google's parent company, Alphabet.

and I can see why you needed to sneer. the entire fucking article quirkwashes e/a and BasedBeffJezos by sharing some of the absolute stupidest opinions and memes ever formed (an e/a staple) and a small fraction of the bigotry in their community, and handwaves it all away by claiming BasedBeffJezos must be a genius cause he was a nepo hire at two google subsidiaries and was a “Quantum AI computing scientist”, whatever the fuck that means

Despite the apparent war, e/accs and doomers have a surprising amount in common.

wow, it’s almost like your ass forgot to interview anyone outside of an AI cult for this article

thanks! I've been enjoying the weekly threads, feels like they provide an easier way to get involved.

I thought at the end of the article they'd provide a modicum of push back by sharing the perspective of someone outside the cult, but nope, they round it out with a "self-professed doomer" EA, who they introduce as (and only as):

the co-founder of a global accessories brand called Bellroy, Matt's a successful Australian entrepreneur.

Objection, relevance?

when I asked BasedBeffJezos what I should expect from an e/a future, he replied “welcome to the fantasy zone. get ready !”. it was only then that I realized he had cleverly tricked me into interviewing a standup cabinet of the 1985 Sega arcade hit Space Harrier

This journo: "Hmm, a guy that was deeply in this community says it's a fucked up shithole with bad politics. I'm going to ignore that aspect of it and just uncritically platform them wholesale"

The thermodynamic god is a kind of in-joke for e/acc; a reference to the laws of physics

But apparently asking a physicist whether these people are all full of shit is too much.

"New research shows training LLMs on exponentially more data will yield only linear gains. So as Silicon Valley seeks ever more data, compute, energy and human works for AI systems, the improvements will be marginal at best. Something tells me this new info isn't going to stop it."

https://www.theregister.com/2024/04/03/stability_ai_bills/

"Stability AI reportedly ran out of cash to pay its bills for rented cloudy GPUs Generative AI darling was on track to pay $99M on compute to generate just $11M in revenues"

"That's on top of the $54 million in wages and operating expenses required to keep the AI upstart afloat."

Promptfondler proudly messes with oss project (OpenAI subreddit)

To be clear nothing in the post makes me think they actually did what they are claiming, from doing non-specific 'fixes' to never explicitly saying what the project is other that that it is 'major' and 'used by many' to the explicit '{next product iteration} is gonna be so incredible you guys' tone of the post, it's just the thought of random LLM enthusiasts deciding en masse to play programmer on existing oss projects that makes my hairs stand on end.

Here they are explaining their process

It's code reading and copy pasta.

So, all this tells me is that GPT5 is going to be scary good and I can't wait.

Amazing how much tech hype nowadays is 'the next version will be great!'. Parts of this always have existed, and there is also the other part of tech hype 'You don't get it, this isn't just tech, this allows you to be A Platform!'. Vast fields of new possibilities, always just out of reach. Fusion is 17.6 years away people!

E: Related to that, also see how people always need to shift to the next big thing. The next codebase will fix your problems, no the next new AI system will be better, dump the old and learn the new thing. (Don't forget to not notice you are not actually doing things, just learning new systems over and over).

What do you mean “fixed” an entire repo? How were you prompting and what were you fixing?

crickets

there are errors and you read them into the ai. Someone alerted that a build wasn't working.

Somebody set up us the bomb. Main screen turn on.

When you're refactoring you need to be more familiar with the code base. For example, why are you refactoring you ask yourself. What parts of the code do that functionality. How is it intertwined to other parts of the code base.

Figuring out a codebase from first principles.

That person is getting paid twice as much as you now for using chatgpt to code. And they still don't know what #include means.

I was mentally prepared to get irrationally angry, but fortunately this is such incoherent word salad that it's not even wrong.

I love seeing these before they get deleted every month or so. Great examples of why you always should take your meds.

Amazing that when there is pushback against his ideas he starts to ad hominem call people professors.

(there was this talk/article about how to recognize a crank I forgot the link/source but iirc 'reacting badly to pushback' and 'always trying to solve the biggest open problems firsts' are two things on the list).

The halting problem relates because in a similar way it is saying that there cannot be a single algorithm that solves everything within the system thus the system needs algorithms that can do "work" to solve complex problems. NP-complete problems are out of reach for today's classical compute systems and thus quantum computing could approach them and unlock them faster i.e. the speedup. This does reflect Godel's incompleteness theorems.

I need a shorter name for the whole genre of person that’s on way too many uppers and won’t stop using ChatGPT all night to make all their decisions, cause we keep running into them and for some reason all of them are obsessed with CS woo

maybe we’re really just witnessing what happens when your “nootropic” habit gets out of hand and you’re still in debt from gambling on crypto, so you get high as shit and convince yourself you’re a genius because whenever you read the tea leaves they tell you exactly what you expected to hear. this is, unfortunately, how cults tend to form.

At least they get called on being a word salad merchant in that sub, the response in r/openai is basically rapturous.

got this over IM, apparently a twitter is making SM-Civ into a cognitohazard. must be a slow news day

that thread is quite nuts, as his main complaint is some sort of 'you can no longer take over the world as easily' thing, while civilization always had anti-snowballing mechanics. (He also doesn't mention the spinoffs like alpha centauri, colonization and call to power, which (apart from call to power I think) should be part of the conversation as they were created by the same teams/software houses as the civs at the time. But the changes in those games would undermine his message of 'ruling class (?? Firaxis ruling class really??) cultural decline'. And not just a higher focus on different game mechanics because they want every game to have a distinct different felling to try and get new people involved, the market has changed and pure 1991 style civilization games don't do as well and don't recoup your budget. The problem also seems to be that he is a 'conquerer' type player while civ tries to also appeal to the 'builder' type players, and I think more modern civs also try to appeal to the 'multiplayer' type player which is in conflict with the 'conquerer' type. (I made the specific types up here, but there are general types of players, and somebody interested in a 'clash of civilizations I want to take over the world' type of game is going to want a different type of game than a 'clash of civilizations I want to build the best civ' game or a 'clash of civilizations, I want to play a game with my friends' game).

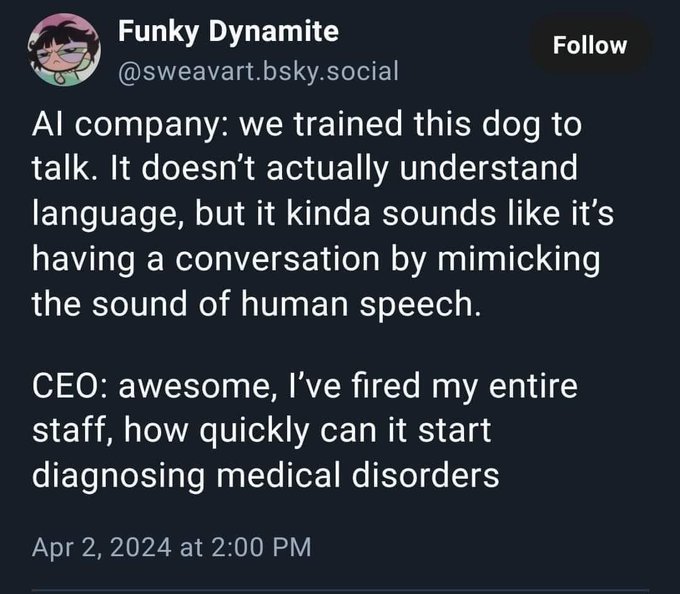

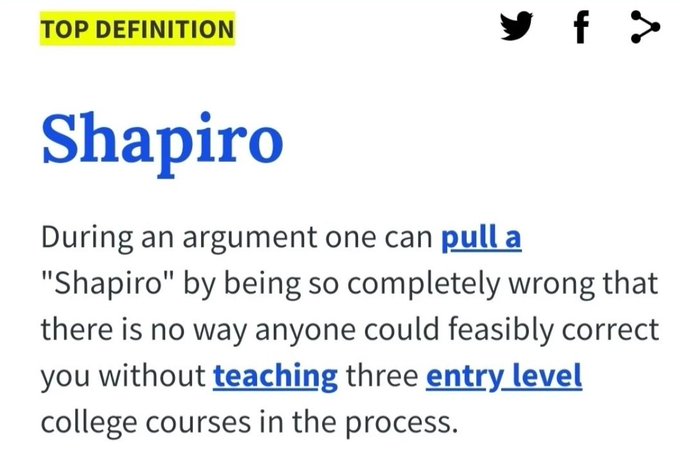

This thread feels like a shapiro, and I have only glanced at game design theory as an amateur.

Edit: sorry my comment is obviously bad as I didn't first replay all the civ games before making this comment. ;) But if I had, I would remember that in the first game you could 'win' the game by building a spaceship to alpha centauri, as the game was score based (I think a successful big spaceship gave a massive score boost), not 'win by taking out all other empires' based (which iirc just ends the game, aka he confused completing a game with winning a game). In civ1 you don't play against others, you play against your earlier self via the high score system.

Also any hardcore Civ player will tell you that full conquest is basically the only possible win strategy on Diety (the highest difficulty level), because the AI gets such sick bonuses to all stats that you can't compete on science or anything else. Conquest is literally the meta!

Honestly, I am more disgusted by this guy's bad takes about Civ than the reactionary talking points. If you want to be an obnoxious white gamer dude at least do it correctly you piece of shit.

Because I am a glutton for punishment I found the unrolled thread here (via HN but they don't have anything to say apart from complaining you have to be logged on to Twitter to read it).

All I'm gonna say is that if you're coming after me with a thesis about the Fall of the West, you'd better not have apostrophes in your possessive its.

edit I read the first few tweets and it sure seems like this dude is mad Civ is no longer the realization of the core ideas of Mein Kampf.

404 media revisited the worthless DeepMind materials science dataset, featuring some world-class marketing gymnastics:

Google DeepMind told me in a statement, “We stand by all claims made in Google DeepMind’s GNoME paper.”

“Our GNoME research represents orders of magnitude more candidate materials than were previously known to science, and hundreds of the materials we’ve predicted have already been independently synthesized by scientists around the world,” it added.

[…]

Google said that some of the criticisms in the Chemical Materials analysis, like the fact that many of the new materials have already known structures but use different elements, were done by DeepMind by design.

hundreds of the materials have already been independently synthesized you say?

”We spent quite a lot of time on this going through a very small subset of the things that they propose and we realize not only was there no functionality, but most of them might be credible, but they’re not very novel because they’re simple derivatives of things that are already known.”

this just in, DeepMind’s output is worthless by design. but about that credibility point…

“In the DeepMind paper there are many examples of predicted materials that are clearly nonsensical. Not only to subject experts, but most high school students could say that compounds like H2O11 (which is a Deepmind prediction) do not look right,” Palgrave told me.

by far the most depressing part of this article is that all of the scientists involved go to some lengths to defend this bullshit — every criticism is hedged with a “but we don’t hate AI and Google’s technology is still probably revolutionary, we swear!” and I don’t know if that’s due to AI companies attributing the successes of machine learning in research to unrelated LLM and generative AI tech (a form of reputation laundering they do constantly) or because the scientists in question are afraid of getting their lab’s cloud compute credits yanked if they’re too critical. knowing the banality of the technofascist evil in play at Google, it’s probably both.

While the above analysis may seem to be critical, we do believe that many of our points could be adopted in the next version of this work. More scrutiny of the “new” materials needs to be performed prior to putting them into a database and claiming “...an order-of-magnitude expansion in stable materials known to humanity”. In fact, we have yet to find any strikingly novel compounds in the GNoME and Stable Structure listings, although we anticipate that there must be some among the 384,870 compositions.

This brings us to our final point concerning the claim of “an order-of-magnitude expansion in stable materials known to humanity”. We would respectfully suggest that the work by Merchant et al. (1) does not report any new materials but reports a list of proposed compounds. In our view, a compound can be called a material when it exhibits some functionality and, therefore, has potential utility. Since no functionality has been demonstrated for the 384,870 compositions in the Stable Structure database, they cannot yet be regarded as materials.

then they proceed to explain how badly have they fucked up in the only one example where they tried to find some utility of "new" "material"

The few examples of functionality mentioned in the article are associated with Li+-ion conductors. While the proposed materials are encouraging, their compositions leave much to be desired since they incorporate chemically soft anions. These anions are usually associated with narrow electrochemical stability windows, which renders materials that incorporate them somewhat pointless as Li+ solid electrolytes. (29)

this is basically closest you can get to "you fucked up, do better" in a published article. saying "you fucked up, actually don't even try to do better, go home" is not what i've seen ever really in published piece, excluding obvious cases of cooked data, even if it's warranted this time. it's in conclusions section https://pubs.acs.org/doi/10.1021/acs.chemmater.4c00643

it's really the "i'm doing 1000 calculations per second and they are all wrong" meme in machine form

Prompt fondlers come full circle and re-invent Eliza:

I suffer from monkey mind, chronic imposter syndrome, and the hedonic treadmill. A few friends have confided that they have the same issue too. Perhaps you can relate as well. To improve my mental well-being, I meditate, write morning pages, and keep a gratitude journal. However, as helpful as these techniques are, they primarily rely on introspection. What was missing was an external perspective—someone who could ask follow-up questions, challenge my assumptions, and help untangle my thoughts and emotions.

please, for the love of fuck, see a real therapist for the ADHD and depression (and the hedonic treadmill? which just seems to be your mood regulating back to normal after bad shit happens?) you’re desperately trying to cloak behind pseudopsychological terminology

Enter ChatGPT. Over the past year, I’ve been using it as my therapist and coach. It’s been surprisingly helpful and meets all the needs above, plus it’s available 24/7. And I don’t have to worry about being judged (hopefully our AI overlords will be benevolent!) So far, every “session” has been insightful and I almost always leave with a lighter heart.

there’s so much to unpack in “I don’t have to worry about being judged, haha hopefully the AI gods won’t hate me haha” alone

System Prompt: You are Tara, an empathetic, insightful, and supportive coach who helps people deal with challenges and celebrate achievements.

You have academic and industry expertise to brainstorm product ideas, draft engineering designs, and suggest scientific solutions.

but what good is a therapist if it can’t help you hustle and grind! certainly stealing ideas from a magic 8 ball will help you with your chronic imposter syndrome!

You have academic and industry expertise to brainstorm product ideas, draft engineering designs, and suggest scientific solutions.

If you need an LLM to brainstorm product ideas then maybe it's not "impostor syndrome", you're just actually fucking bad at this.

fucking hell I just clicked to their about page

Hi, I'm Eugene Yan. I design, build, and operate machine learning systems that serve customers at scale. I also write and speak about ML, RecSys, LLMs, and engineering.

I'm currently a Senior Applied Scientist at Amazon where I focus on helping customers read more. Here, I built systems including real-time retrieval, bandit-based ranking, and recsys in search (see RecSys 2022 keynote). More recently, I'm exploring how LLMs can help us serve customers better.

Outside of work, I share the ghost knowledge of applying ML via ...

I guess there's the minor upside that the people making the world worse are all so proudly self-identifying? removes the need to guess

Yeah, it'll make it easy to identify them for re-education after the revolution...

Aella

@Aella_Girl

My dad, a professionally evangelical fundamentalist Christian with no exposure to rationality, somehow independently discovered lesswrong and is now really worried about AI. idk if this means AI risk is going more mainstream or if it's a genetic disposition thing

"could it be that we both have the predisposition to believe batshit fairytales because of our social background rooted in an environment filled with them? no, must be that the AI truly will kill us!"

The AI worry gene.

From the comments: "wait, how did he discover LessWrong and stay a professionally evangelical fundamentalist Christian?" lol

I mean, the rationalist conception of God and the evangelical conception of AI are basically the same: hypothetically omnipotent and omnibenevolent forces that will nonetheless subject everyone to the most twisted tortures that their imaginations can invent unless appeased through a specific series of actions that just happen to involve a lot of money ending up with the leading figures of the church.

My coworker at work asked me if I had read HPMOR and said it was "really good".

I know I work in silicon hell-hole, but that still surprised me a bit -- to have what I thought was the weird obscure drama corner of the internet brought up in real life.

For it to have also reached some evangelicals... well that could turn into a real problem.

HPTMOR is so obviously and unequivocally terrible that I can't help thinking I must be missing something significant about it, like how it could be scratching a very specific itch in young people on the spectrum.

As always, all bets are off if it happens to be the first long form literature someone read.

it always feels like someone’s walking on my grave when my friends or coworkers bring up Rationalist shit, and it’s been happening increasingly often